In the realm of computational linguistics and artificial intelligence, the quest for models that can emulate human-like reasoning in mathematics has been relentless and unforgiving.

Yet, amidst this relentless pursuit, a new dawn has emerged with the advent of WizardMath-7B-V1.1, a model that not only understands the complex language of mathematics but also resolves it with an almost artisanal finesse. This isn't just another step in the evolution of Large Language Models (LLMs); it's a giant leap for machine learning.

WizardMath-7B-V1.1 isn't merely a tool; it's the culmination of countless hours of rigorous training, fine-tuning, and an unwavering commitment to excellence in the field of mathematical reasoning. It stands as a testament to what can be achieved when ingenuity meets the meticulousness of machine learning.

Why WizardMath-7B-V1.1 Is a Mathematical Genius

When we delve into the core of WizardMath-7B-V1.1, we're not just looking at a series of algorithms and computational processes; we're witnessing the embodiment of a mathematical virtuoso in the digital realm. This model's ability to dissect and solve complex mathematical problems is not just impressive; it's revolutionary.

- Performance Metrics: WizardMath-7B-V1.1 boasts a staggering 83.2% pass rate at GSM8k and a 33.0% pass rate at MATH, benchmarks that assess a model's prowess in mathematical problem-solving.

- Competitive Edge: The model's performance is not in isolation but against the backdrop of fierce competition. It doesn't just match but surpasses its contemporaries, including both open-source and closed-source models.

- Innovative Training: The secret to its success lies in its innovative training regimen, which includes Reinforced Evol-Instruct (RLEIF), ensuring that the model is not only accurate but also efficient in its computations.

Each of these points illustrates not just a technical success but also a conceptual breakthrough. WizardMath-7B-V1.1 doesn't just understand mathematics; it redefines how we perceive machines' ability to comprehend and interact with the language of logic and numbers.

WizardMath-7B-V1.1 Benchmarks

For WizardMath-7B-V1.1, its prowess in mathematical reasoning is put to the test through the GSM8k benchmark suite, which is designed to rigorously evaluate the ability of models to solve grade-school level math problems.

GSM8K

The GSM8k benchmarks put WizardMath-7B-V1.1 in a competitive arena with other leading models. Here's how it stands:

- WizardMath-7B-V1.1 has achieved a Pass@1 rate of 83.2%, placing it firmly at the forefront. This is not a marginal lead; it's a statement of clear superiority.

- Claude Instant 1 lags slightly behind at 80.9%, while ChatGPT posts a Pass@1 rate of 80.8%, and PaLM 2 540B is close with 80.7%. It's noteworthy that WizardMath accomplishes this with a significantly smaller model size, hinting at a more efficient mathematical comprehension.

- The progression from WizardMath's own previous version is remarkable — WizardMath-7B-V1.0 scored 54.9% on the same benchmark, making the leap to 83.2% a testament to the model's evolutionary progress and the efficacy of its training regime.

Broader Spectrum of Competence:

A broader look at the field shows a spectrum of competencies among various models:

- Models like MPT-7B and Falcon-7B are at the lower end with scores around 6.8%.

- LLaMA variants span a range, with LLaMA-1-7B at 11.0% and LLaMA-2-7B slightly higher at 14.6%.

- Notably, WizardMath-13B-V1.0 shows a strong performance with 63.9% and WizardMath-70B-V1.0 even higher at 81.6%, indicating that larger model sizes in the WizardMath family also exhibit robust mathematical capabilities.

Reproducibility and Systematic Evaluation:

The developers of WizardMath emphasize that the performance of their models is 100% reproducible, provided that the evaluation follows the prescribed steps. This underscores a commitment to transparency and reliability in the model's capabilities. Furthermore, the evaluation process uses a consistent system prompt format, ensuring that the comparisons across different models are equitable and coherent.

Significance of the Benchmarks:

The results from the GSM8k benchmarks are not just numbers; they're a narrative. They tell us about the rigorous training and the intricate processes that have gone into making WizardMath-7B-V1.1 a leader in its field. They tell us about the potential of WizardMath to transform industries that rely on mathematical modeling and problem-solving.

Moreover, these benchmarks are a window into the future of AI in education, where such models could potentially assist in personalized learning and tutoring, helping students grasp complex mathematical concepts with ease and confidence.

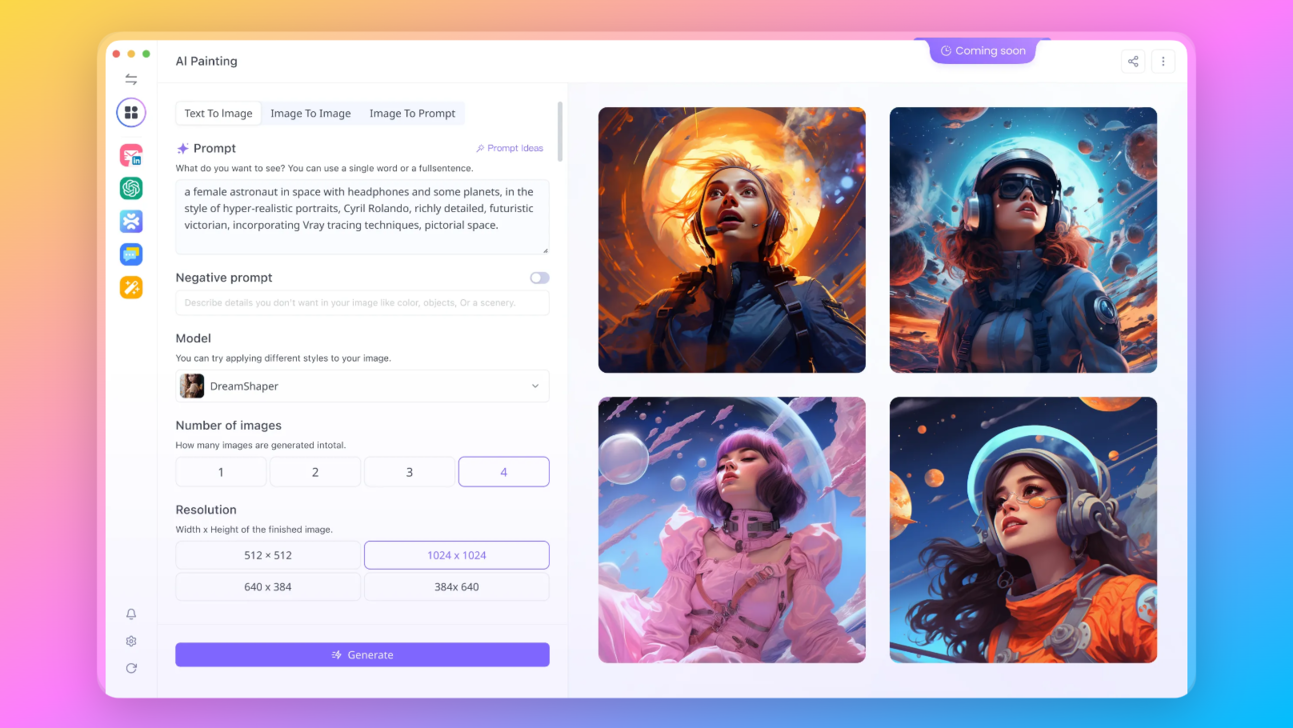

Can You Try Open Source LLMs Online?

Yes! Anakin AI is one of the most convenient way that you can test out some of the most popular AI Models without downloading them

Here are the other Open Source and Free Models that Anakin AI supports:

- Mistral 7B and 8x7B: the hottest names for Open Source LLMs!

- Dolphin-2.5-Mixtral-8x7b: get a taste the wild west of uncensored Mixtral 8x7B!

- OpenHermes-2.5-Mistral-7B: One of the best performing Mistral-7B fine tune models, give it a shot!

- OpenChat, now you can build Open Source Lanugage Models, even if your data is imperfect!

Other models include:

- GPT-4: Boasting an impressive context window of up to 128k, this model takes deep learning to new heights.

- Google Gemini Pro: Google's AI model designed for precision and depth in information retrieval.

- DALLE 3: Create stunning, high-resolution images from textual descriptions.

- Stable Diffusion: Generate images with a unique artistic flair, perfect for creative projects.

Want to test out all these awesome LLMs online? Try Anakin AI!

Anakin AI is one of the most convenient way that you can test out some of the most popular AI Models without downloading them!

The Training Process of WizardMath-7B-V1.1

The training of WizardMath-7B-V1.1 is a paradigm of complexity and sophistication. It’s constructed on a bedrock of novel machine learning techniques tailored specifically to grasp and execute mathematical concepts with precision. Let’s peel back the layers of this intricate process to understand the mechanisms that contribute to the model's mathematical acumen.

Conceptual Framework

At the heart of WizardMath's training lies the concept of Reinforced Evol-Instruct (RLEIF). This technique is a hybrid that blends evolutionary strategies with reinforcement learning, all within the structure of instructional tuning.

- Evolutionary Strategies (ES): ES are algorithmic models that simulate the process of natural evolution. This approach uses mechanisms like mutation, recombination, and selection to evolve the model parameters towards optimum performance.

- Reinforcement Learning (RL): RL involves training models through rewards and penalties. In the context of WizardMath, the model is rewarded for correct answers and penalized for incorrect ones, refining its ability to solve mathematical problems over time.

- Instructional Tuning: This refers to the process of training the model using a series of detailed instructions, which helps in better understanding and following complex, multi-step mathematical problems.

Training Steps

The training process of WizardMath-7B-V1.1 can be summarized in the following steps:

- Pre-training on Diverse Data: The model is initially pre-trained on a diverse dataset that includes a vast array of texts, helping it develop a broad understanding of natural language.

- Fine-tuning on Mathematical Data: The model undergoes fine-tuning with a dataset specifically curated for mathematical reasoning, which includes problem statements, solutions, and step-by-step explanations.

- Reinforced Learning Phase: Using RLEIF, the model is subjected to a series of problems, where it is iteratively rewarded for correct solutions, helping it adapt its strategy for problem-solving.

- Evaluative Feedback Loop: The model's outputs are continuously evaluated against correct solutions, and feedback is provided, forming a feedback loop that further refines its accuracy.

How to Fine-tune WizardMath-7B-V1.1, Step-by-Step:

The fine-tuning of WizardMath-7B-V1.1 is a well-orchestrated process using Llama-X's WizardMath training script. This supervised fine-tuning (SFT) phase is critical for honing the model's capability to execute complex mathematical reasoning. Below, we'll outline the steps and provide a sample code snippet for this phase.

Steps for Supervised Fine-tuning:

-

Environment Setup: Begin by setting up the environment according to Llama-X's documentation. Ensure all dependencies, including

deepspeed==0.10.0andtransformers==4.31.0, are correctly installed. -

Training Script Replacement: Replace the default

train.pywith thetrain_wizardmath.pyscript from the WizardMath repository. -

Huggingface Authentication: Login to Huggingface using the CLI to access models and datasets:

huggingface-cli login -

Executing the Training Command: Use the

deepspeedCLI to kickstart the fine-tuning process with the specified hyperparameters:deepspeed train_wizardmath.py \ --model_name_or_path "/your/path/to/llama-2-13b" \ --data_path "/your/path/to/math_instruction_data.json" \ --output_dir "/your/path/to/save_ckpt" \ --num_train_epochs 3 \ --model_max_length 2048 \ --per_device_train_batch_size 16 \ --per_device_eval_batch_size 1 \ --gradient_accumulation_steps 1 \ --evaluation_strategy "no" \ --save_strategy "steps" \ --save_steps 50 \ --save_total_limit 50 \ --learning_rate 2e-5 \ --warmup_steps 10 \ --logging_steps 2 \ --lr_scheduler_type "cosine" \ --report_to "tensorboard" \ --gradient_checkpointing True \ --deepspeed config/deepspeed_config.json \ --fp16 True -

Monitoring and Adjustments: Continuously monitor the training process via TensorBoard and make adjustments as needed to optimize the learning rate, batch size, or other parameters.

Code License and Legal Compliance:

It's essential to note that all code and data are subject to strict auditing and legal review. The researchers involved in the development of WizardMath-7B-V1.1 must comply with open-source policies and regulations, ensuring that all releases are authorized by the legal team.

Inference and Evaluation of WizardMath-7B-V1.1

After fine-tuning, the next step is to deploy WizardMath for inference and evaluate its performance on benchmark datasets like GSM8k and MATH.

Setting Up the Inference Environment:

conda create -n wizardmath python=3.8 -y

conda activate wizardmath

pip install vllm

pip install jsonlines

pip install Fraction

pip install openai

cd WizardMath

Running the Decoding Script:

For GSM8k benchmarks, a sample inference command might look like this:

python inference/gsm8k_inference.py --data_file data/gsm8k_test.jsonl \

--model "/your/path/to/load_ckpt" --batch_size 60 --tensor_parallel_size 1

And for the MATH benchmarks:

python inference/MATH_inference.py --data_file data/MATH_test.jsonl \

--model "/your/path/to/load_ckpt" --batch_size 50 --tensor_parallel_size 1

These commands will read the input file, generate responses for each sample, and calculate the score, thereby providing a quantitative assessment of the model's mathematical reasoning capabilities.

Inference Prompt:

The inference prompt used for WizardMath is designed to guide the model in a structured manner for problem-solving:

"Below is an instruction that describes a task. Write a response that appropriately completes the request.

### Instruction:

{instruction}

### Response:

Let's think step by step."

By adhering to this prompt format, WizardMath is better positioned to understand and respond to the task at hand, utilizing the "Chain of Thought" (CoT) reasoning process for complex math problems.

You can read the WizardMath's GitHub page to learn more about the training process.

Conclusion

The future shaped by WizardMath-7B-V1.1 is one of unlimited potential, a testament to the transformative power of machine learning in the domain of mathematics. As we stand on the cusp of this new horizon, it is clear that WizardMath-7B-V1.1 is not just a product of its time; it is a beacon for the future, a guiding light into the uncharted territories of mathematical reasoning and beyond.