Microsoft's Phi-2 model is changing the game in artificial intelligence (AI). This tool, packed with 2.7 billion parameters, is Microsoft's latest step in AI development. It's designed to make machines think more like humans and do it safely. Phi-2 isn't just about numbers; it's about a smarter, safer way for computers to understand and interact with the world.

Satya announcing Phi-2, says will be open source 🤔, and is 50% better at mathematical reasoning pic.twitter.com/y2UyrRdvCu

— anton (@abacaj) November 15, 2023

Phi-2 stands out because it's been taught with a mix of new language data and careful checks to make sure it acts right. It's built to do many things like writing, summarizing texts, and coding, but with better common sense and understanding than its earlier version, Phi-1.5.

Microsoft's Phi-2: Defying Conventional Scaling Laws

In the latest update, Microsoft has released a blog discussing the surprising power of small language models.

Benchmarking Phi-2:

In the rapidly evolving landscape of AI language models, benchmarks are crucial for assessing performance. Microsoft's Phi-2 model, though smaller in size, demonstrates impressive proficiency across various domains:

- Size: Phi-2 is a 2.7 billion parameter model, smaller than others in comparison.

- Language Understanding: With a score of 62.0, it showcases a strong grasp of language nuances.

- Math: It achieves a notable 61.1 in mathematical reasoning, a domain typically challenging for AI.

- Coding: Phi-2 scores 53.7, reflecting its capability in understanding and generating code.

The benchmarks present Phi-2 as a potent model, particularly considering its size relative to competitors:

| Model | Size | Language Understanding | Math | Coding |

|---|---|---|---|---|

| Llama-2 | 7B | 56.7 | 16.5 | 21.0 |

| 13B | 61.9 | 34.2 | 25.4 | |

| 70B | 67.6 | 64.1 | 38.3 | |

| Mistral | 7B | 63.7 | 46.4 | 39.4 |

| Phi-2 | 2.7B | 62.0 | 61.1 | 53.7 |

These figures underscore Phi-2's competitive edge:

- Efficiency: Phi-2 operates with fewer parameters, indicating a more efficient use of computational resources.

- Versatility: The model's balanced performance across different fields suggests versatility, an asset for practical applications.

- Potential: Phi-2's capabilities hint at a future where smaller, more efficient models could democratize AI access.

Small Language Models, Smaller, But Better

Phi-2's achievement in the realm of small language models (SLMs) is noteworthy, with significant implications for the efficiency and capabilities of AI systems:

- Strategic Training Data Selection: The importance of high-quality training data is accentuated, with an emphasis on "textbook-quality" datasets.

- Scaled Knowledge Transfer: Utilizing knowledge from the previous Phi-1.5 model has proven to be an effective method for improving performance in Phi-2.

- Benchmarks Across Domains: Phi-2 showcases superior performance across various academic benchmarks, notably in commonsense reasoning, language understanding, math, and coding, even when compared to larger models.

- Training Efficiency and Safety: The model's efficiency is highlighted by its 14-day training period on 96 A100 GPUs, and it has shown better behavior with respect to toxicity and bias without reinforcement learning or instruct fine-tuning.

Phi-2's evaluation demonstrates its proficiency over larger models in aggregated benchmarks, emphasizing the potential of smaller models to achieve comparable or superior performance to their larger counterparts. This is particularly evident in its comparison with Google Gemini Nano 2, where Phi-2 outshines despite its smaller size.

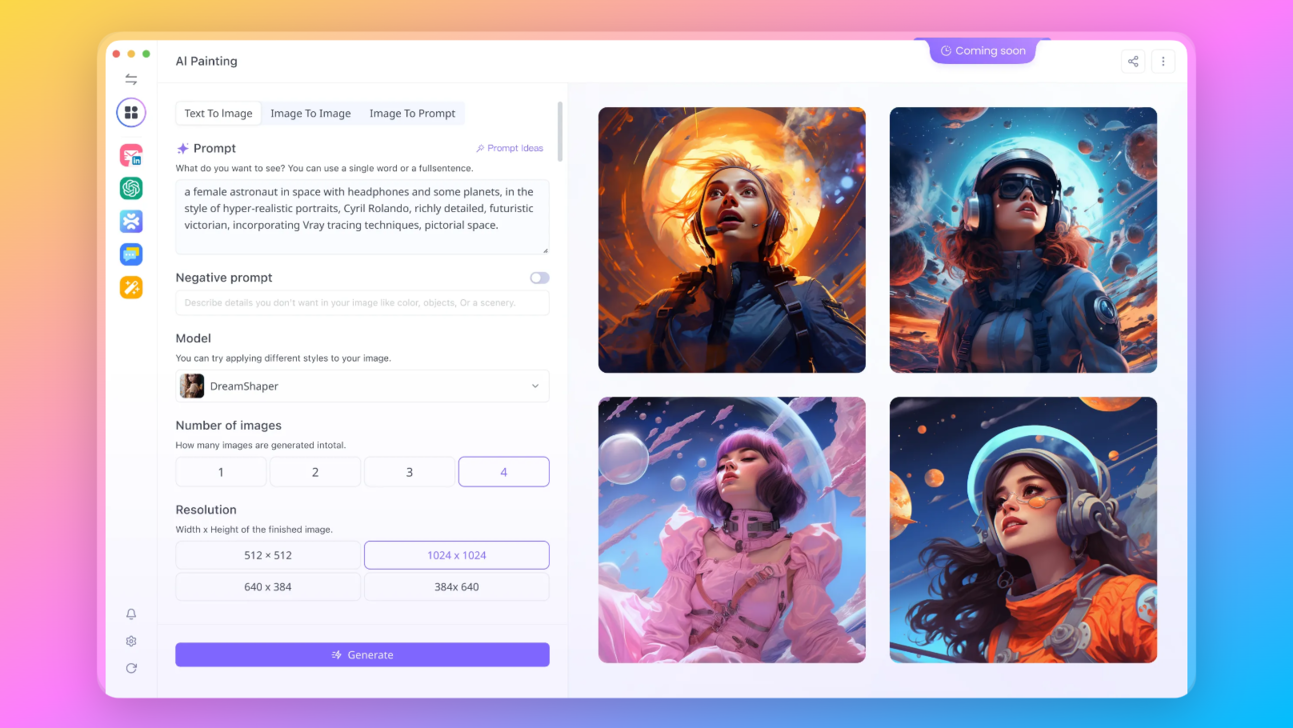

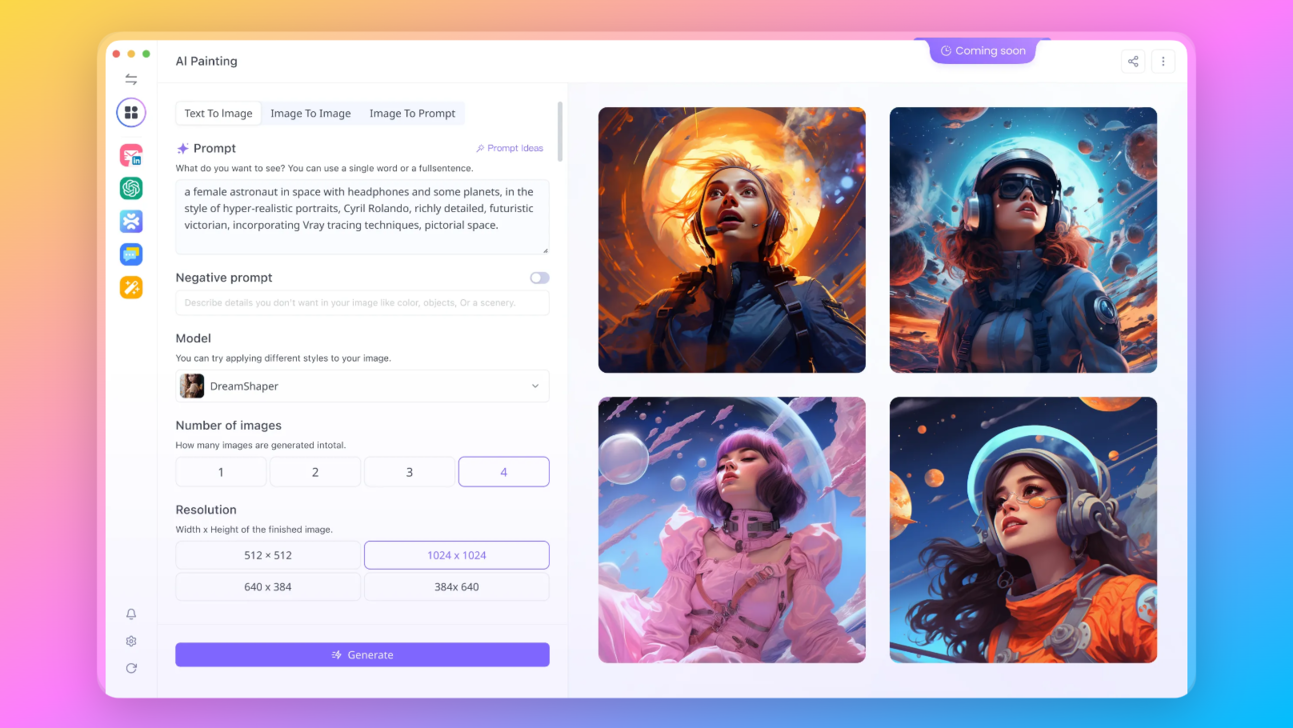

How Can I Try Open Source LLMs Online?

Yes, you can test out these awesome Open Source LLMs online with Anakin AI!

Anakin AI is one of the most convenient way that you can test out some of the most popular AI Models without downloading them!

Here are the other Open Source and Free Models that Anakin AI supports:

- Mistral 7B and 8x7B: the hottest names for Open Source LLMs!

- Dolphin-2.5-Mixtral-8x7b: get a taste the wild west of uncensored Mixtral 8x7B!

- OpenHermes-2.5-Mistral-7B: One of the best performing Mistral-7B fine tune models, give it a shot!

- OpenChat, now you can build Open Source Lanugage Models, even if your data is imperfect!

Other models include:

- GPT-4: Boasting an impressive context window of up to 128k, this model takes deep learning to new heights.

- Google Gemini Pro: Google's AI model designed for precision and depth in information retrieval.

- DALLE 3: Create stunning, high-resolution images from textual descriptions.

- Stable Diffusion: Generate images with a unique artistic flair, perfect for creative projects.

Want to test out all these awesome LLMs online? Try Anakin AI!

Anakin AI is one of the most convenient way that you can test out some of the most popular AI Models without downloading them!

Tutorial: Running phi-2 Locally and on Google Colab for Free

Here's your guide to running phi-2, a potent 3B parameter language model, both locally and on Google Colab.

Running phi-2 on Google Colab

- Access the Notebook: Use our specially prepared Colab Notebook. It's user-friendly and perfect for beginners.

- Follow the Instructions: The notebook is equipped with detailed steps to get phi-2 up and running without any hassles.

Running phi-2 Locally

For local execution, you'll need a GPU (at least T4) and the following setup:

- Install Dependencies: Use

pipto installsentencepiece,transformers,accelerate, andeinops. - Verify GPU Availability: Ensure your GPU is detected with

torch.cuda.is_available(). - Download phi-2: Utilize

snapshot_downloadfromhuggingface_hubto download the phi-2 model from the Hugging Face repository. - Load the Model and Tokenizer: Set up the tokenizer and model using

AutoTokenizerandAutoModelForCausalLMfrom thetransformerspackage. The model automatically adapts to your GPU settings. - Optimize Memory Usage: Optionally, switch to

float16for reduced memory usage, requiring only 6.7 GB of VRAM. - Generate Text: Use the provided

generatefunction to create text based on prompts. The script includes performance metrics like speed and token generation time.

Final Note: Phi-2, as a base model, hasn't been fine-tuned for specific instructions. It excels in general text completion and generation tasks.

The Capabilities of Phi-2 Compared to Phi-1.5

What's Phi-2, and why is it important? It's the next step in AI models, and here's why:

- More Parameters: Phi-2 has double the parameters of Phi-1.5, meaning it can get language nuances better.

- Strong Performance: It's been tested and has shown it can nearly match the best AI models out there.

- Better Safety: It's built to be safer and smarter in how it handles tasks.

Phi-2 lets developers do things like:

- Get Precise Answers: It understands complex questions and gives more accurate answers.

- Build Safely: Its focus on safety makes it a solid choice for apps that need careful content handling.

Phi-2 is a big jump forward. It's all about building AI that's smart and does the right thing, which is key for Microsoft's focus on creating responsible AI.

- Is Phi-2 available for use outside of Microsoft's ecosystem?

Yes, while Phi-2 is optimized for integration with Microsoft's services, it can also be utilized in other environments, giving developers flexibility.

- How does Phi-2 ensure data privacy?

Phi-2 is designed with advanced safety measures to manage and protect data, aligning with Microsoft's commitment to responsible AI.

- What kind of tasks can Phi-2 handle?

Phi-2 is versatile, able to draft emails, create stories, summarize texts, and even generate code, all with a deeper understanding of context.

- Will Phi-2's capabilities continue to evolve?

Microsoft is dedicated to ongoing research and development, which means Phi-2 is expected to grow smarter and more efficient over time.

Integration of Phi Models in Windows AI Studio

Windows AI Studio makes working with Phi-2 straightforward for developers. What is Windows AI Studio exactly? It's a place where developers can go to get tools and AI models for their projects. It's got everything needed to take Phi-2 and tailor it for different uses, from online services to apps that run right on your computer.

Windows AI Studio offers:

- Tools for Developers: It makes setting up and adjusting AI models easy.

- Access to Advanced Models: Not just Phi-2, but also models like Meta’s Llama 2 and Mistral.

Putting Phi-2 in Windows AI Studio means developers have the latest in AI right at their fingertips. It's all about giving them a simple way to use powerful AI tools without getting bogged down in the technical side of things.

Another important update from Microsoft is the development of Microsoft’s hybrid loop. This approach helps make sure that developers can work on AI projects by utilizing Microsoft's Cloud Servers and developer's local device in a hybrid way.

Introduction to Microsoft Phi-1.5

Let's talk about Microsoft's Phi-1.5, the smaller predecessor to Microsoft's Phi-2 Model.

Image Source: Textbooks Are All You Need II: phi-1.5 technical report

Microsoft Phi-1.5, a 1.3 billion parameter language model, stands out in the AI landscape for its impressive ability to outperform larger models on critical benchmarks. Its design is tailored to tackle complex reasoning and language understanding tasks effectively. This section will guide you through the steps of utilizing Phi-1.5 in your own AI projects.

Step 1: Understanding Phi-1.5's Capabilities

Before diving into implementation, it's crucial to recognize what makes Phi-1.5 suitable for your project:

- Focused Training: Unlike its predecessor Phi-1, which was trained on a mix of high-quality textbook data, Phi-1.5 focuses on synthetic data, enhancing its reasoning abilities.

- Performance: Phi-1.5 competes with models five times its size, excelling in natural language understanding and complex reasoning tasks.

- Toxicity Reduction: With careful training, Phi-1.5 is designed to generate less toxic content compared to other language models.

Step 2: Preparing Your Environment

To start working with Phi-1.5, set up your development environment:

- Ensure you have Python installed on your system.

- Install necessary libraries such as

transformers,datasets, and any other dependencies.

pip install transformers datasetsStep 3: Loading Phi-1.5

With the environment set up, load Phi-1.5 using the Hugging Face transformers library:

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("microsoft/phi-1.5")

model = AutoModelForCausalLM.from_pretrained("microsoft/phi-1.5")Step 4: Utilizing Phi-1.5 for Inference

To generate text with Phi-1.5, use the following code snippet:

# Encode some text to generate from.

input_ids = tokenizer.encode("Here is some text to encode: ", return_tensors='pt')

# Generate text using Phi-1.5 model

output = model.generate(input_ids, max_length=50)

# Decode the generated text

decoded_output = tokenizer.decode(output[0], skip_special_tokens=True)

print(decoded_output)

This basic example takes a prompt and generates a continuation of the text.

Step 5: Fine-Tuning Phi-1.5

If Phi-1.5 needs to be fine-tuned on your specific dataset:

- Prepare your dataset in a suitable format.

- Use the Hugging Face

TrainerAPI or custom training loops to fine-tune the model.

from transformers import Trainer, TrainingArguments

# Define training arguments

training_args = TrainingArguments(

output_dir='./results',

num_train_epochs=3,

per_device_train_batch_size=8,

per_device_eval_batch_size=16,

warmup_steps=500,

weight_decay=0.01,

logging_dir='./logs',

logging_steps=10,

)

# Initialize the Trainer

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset

)

# Start the training

trainer.train()

Phi-1.5 offers a compelling combination of performance and practicality, making it an excellent candidate for a wide range of AI applications. By following these steps, you can effectively integrate Phi-1.5 into your AI projects, leveraging its capabilities to enhance your applications.

Conclusion: The Future of AI on Windows

Microsoft's Phi-2 model, alongside Nvidia's updates and the hybrid loop development pattern, is setting a new direction for AI on Windows. Developers now have tools that are not only powerful but also easier to use and more secure. This is leading to a future where AI can do more and be more helpful in everyday tasks.

Phi-2 and these new developments show that Microsoft is serious about making AI that's both smart and user-friendly. With these tools, anyone from hobbyists to professional developers can create AI applications that could change the way we work and live.

Want to test out all these awesome LLMs online? Try Anakin AI!

Anakin AI is one of the most convenient way that you can test out some of the most popular AI Models without downloading them!