Introduction to OpenLLM

In the rapidly evolving field of artificial intelligence, language models stand out as pivotal tools that drive understanding and interaction in numerous applications. OpenLLM, an open-source framework, allows developers to harness the power of large language models efficiently. When combined with LangChain, a library designed to streamline the creation of language-based applications, OpenLLM's potential is significantly amplified. This article will guide you through the essentials of using OpenLLM within the LangChain environment, from installation to building your first language application.

TL;DR Quick Summary

- Install Necessary Packages: Use pip to install LangChain and OpenLLM.

- Initialize OpenLLM: Set up OpenLLM with your API key in a Python script.

- Configure LangChain: Integrate OpenLLM as the language model in LangChain.

- Build and Run Your Application: Create and execute a simple language application like a chatbot.

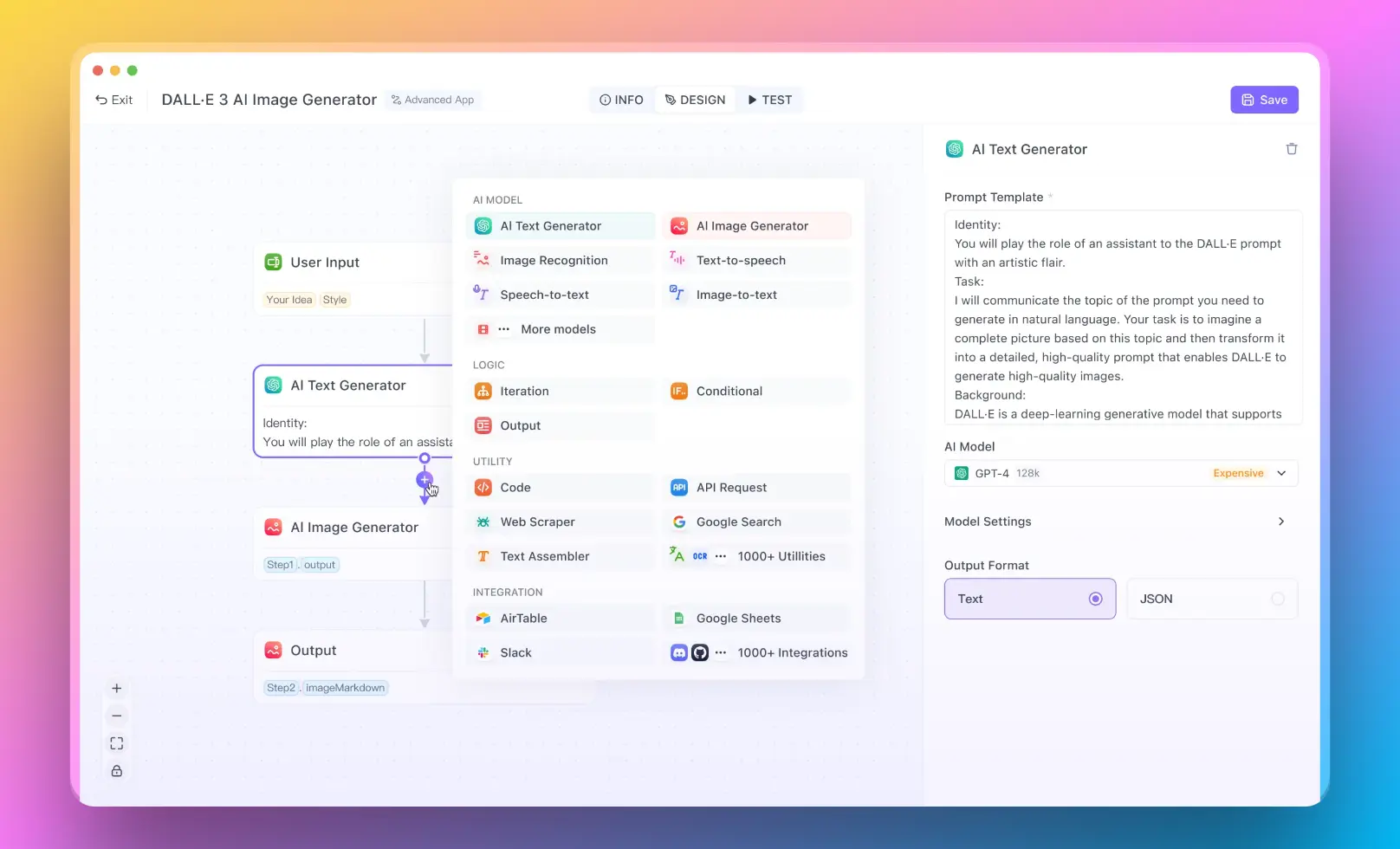

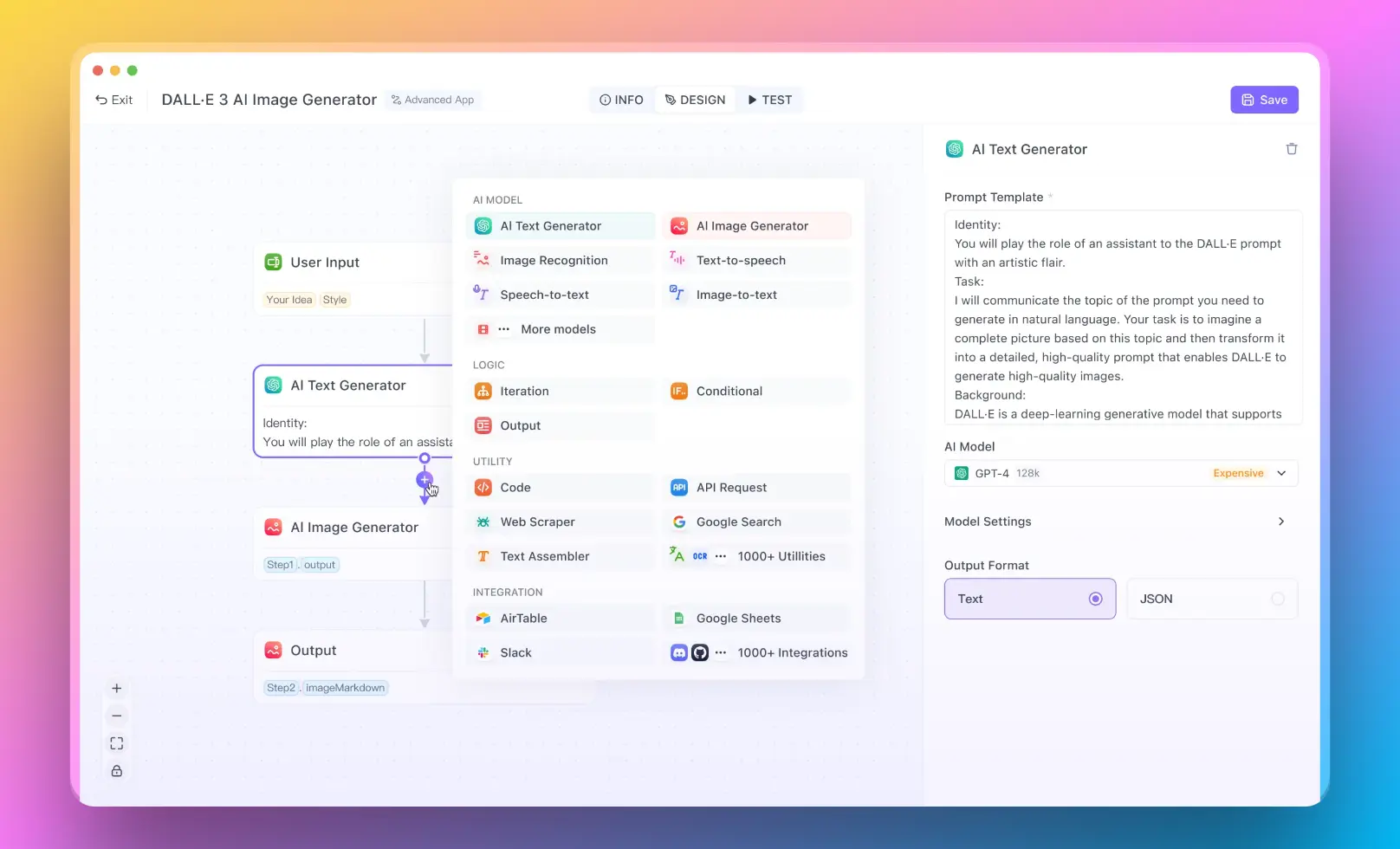

- For users who want to run a RAG system with no coding experience, you can try out Anakin AI, where you can create awesome AI Apps with a No Code Builder!

What is the Use of OpenLLM?

OpenLLM serves multiple purposes in the realm of AI and machine learning. Primarily, it is used to:

- Enhance text-based applications with features like text summarization, question answering, and language translation.

- Provide a foundation for developing custom language models tailored to specific needs or industries.

- Enable researchers and developers to experiment with and deploy large language models more efficiently.

How to Use ChatOpenAI in LangChain

LangChain is a powerful library designed to streamline the development of language-based applications, particularly those leveraging AI for conversational purposes. By integrating ChatOpenAI, a component designed to work with OpenAI's conversational models, developers can significantly simplify the deployment and management of conversational AI systems. This guide will walk you through the steps to integrate ChatOpenAI within LangChain, from setting up your environment to running a chat session.

Step 1: Setting Up Your Environment

Before diving into the integration of ChatOpenAI, it's crucial to prepare your development environment. This involves having Python installed on your system. Python 3.7 or newer versions are recommended to ensure compatibility with LangChain and ChatOpenAI. Additionally, setting up a virtual environment is a good practice to manage dependencies and avoid conflicts with other Python projects.

# Create a virtual environment

python -m venv langchain-env

# Activate the virtual environment

# On Windows

langchain-env\Scripts\activate

# On Unix or MacOS

source langchain-env/bin/activate

Step 2: Installing LangChain

With your environment ready, the next step is to install LangChain. LangChain can be easily installed using pip, Python's package installer. Ensure your virtual environment is activated before running the following command:

pip install langchain

This command downloads and installs LangChain along with its dependencies.

Step 3: Importing ChatOpenAI

Once LangChain is installed, you can proceed to import ChatOpenAI into your project. ChatOpenAI is a class within LangChain designed to facilitate interaction with OpenAI's conversational models. Importing it is straightforward:

from langchain.chat_openai import ChatOpenAI

This line of code makes the ChatOpenAI class available in your script, allowing you to utilize its functionalities.

Step 4: Configuring ChatOpenAI

To use ChatOpenAI, you need to initialize it with your OpenAI API key. This key enables your application to communicate with OpenAI's API and use its language models. Here's how you can initialize ChatOpenAI:

# Initialize ChatOpenAI with your OpenAI API key

chat_openai = ChatOpenAI(api_key="your_openai_api_key_here")

Replace "your_openai_api_key_here" with your actual OpenAI API key. This step is crucial for authenticating your requests to OpenAI's services.

Step 5: Creating a Conversation

With ChatOpenAI configured, you're now ready to create a function that handles the conversation logic. This function will take user input, send it to the model, and display the model's response. Here's a simple example:

def start_conversation():

while True:

user_input = input("You: ")

if user_input.lower() == "quit":

break

response = chat_openai.generate_response(user_input)

print("AI:", response)

This function creates an interactive loop where the user can type messages, and the AI responds. Typing "quit" ends the conversation.

Step 6: Running the Chat

To test your setup and see ChatOpenAI in action, simply call the start_conversation function:

# Start the conversation

start_conversation()

Running this script in your terminal or command prompt will initiate a chat session where you can interact with the AI model.

Example: Building a Feedback Collection Bot

In this example, we'll delve into creating a feedback collection bot using LangChain and ChatOpenAI. This bot is designed to interact with users, gather their feedback on a service, and respond appropriately based on the sentiment of the feedback. While this example uses a basic form of sentiment analysis, it illustrates the foundational steps for building more complex conversational agents that can perform sophisticated sentiment analysis.

- For users who want to run a RAG system with no coding experience, you can try out Anakin AI, where you can create awesome AI Apps with a No Code Builder!

The feedback collection bot serves as a simple yet effective tool for businesses to engage with customers and gather valuable insights about their services. By analyzing the sentiment of the feedback, the bot can categorize responses and potentially escalate issues or highlight positive comments. This immediate interaction can enhance customer satisfaction and provide real-time data for service improvement.

Step-by-Step Implementation

Step 1: Setting Up the Bot

First, ensure that LangChain and ChatOpenAI are properly installed and configured as described in the previous sections. Then, you can begin coding the bot:

from langchain.chat_openai import ChatOpenAI

# Initialize ChatOpenAI with your OpenAI API key

chat_openai = ChatOpenAI(api_key="your_openai_api_key_here")

Step 2: Creating the Interaction Logic

The core of the feedback bot is its ability to interact with users and analyze their input. Here’s how you can implement the interaction logic:

def feedback_bot():

print("Hello! How was your experience with our service today?")

while True:

feedback = input("Your feedback: ")

if feedback.lower() == "quit":

break

analyze_feedback(feedback)

This function initiates a conversation and continuously collects user feedback until the user types "quit".

Step 3: Analyzing Feedback

For simplicity, this example uses a basic keyword search to determine sentiment. However, you can expand this by integrating more advanced natural language processing techniques available through LangChain and OpenAI.

def analyze_feedback(feedback):

# Simple keyword-based sentiment analysis

positive_keywords = ["great", "excellent", "good", "fantastic", "happy"]

negative_keywords = ["bad", "poor", "terrible", "unhappy", "worst"]

if any(word in feedback.lower() for word in positive_keywords):

print("AI: We're thrilled to hear that! Thank you for your feedback.")

elif any(word in feedback.lower() for word in negative_keywords):

print("AI: We're sorry to hear that. We'll work on improving.")

else:

print("AI: Thank you for your feedback. We're always looking to improve.")

This function checks for the presence of certain keywords to gauge the sentiment of the feedback. Positive and negative keywords trigger corresponding responses.

Enhancing the Bot with Advanced Sentiment Analysis

To make the feedback bot more robust and insightful, you can integrate advanced sentiment analysis models. LangChain allows you to easily plug in different language models that can analyze text more deeply, understanding nuances and context better than simple keyword searches. For instance, you could use OpenAI's GPT models to interpret the sentiment more accurately and even generate more personalized responses based on the feedback context.

Cannot Run OpenLLM? Check Version of Python

OpenLLM is designed to be compatible with Python 3.7 and newer versions. It is important to ensure that your Python environment meets this requirement to avoid any compatibility issues when working with OpenLLM and LangChain.

Checking Your Python Version

You can check your current Python version by running the following command in your terminal:

python --version

If your version is below 3.7, you will need to update Python to a newer version to use OpenLLM effectively.

Conclusion

OpenLLM in LangChain offers a powerful toolkit for developers looking to leverage large language models in their applications. By following the steps outlined above, you can begin integrating OpenLLM into your projects, enhancing them with advanced language processing capabilities. Whether you are building a chatbot, a text summarizer, or any other language-driven application, OpenLLM and LangChain can provide the tools you need to succeed.