The digital era thrives on innovation, and at its heart lies the quest for intelligent automation. Enter the realm of language models, where Mistral-Medium and GPT-3.5 Turbo emerge as significant players. Their mastery of language and understanding is not just a feat of programming; it's a ballet of algorithms and data, each step calibrated to mimic human cognition.

Is Mistral-medium the Superior Contender to GPT-3.5-Turbo?

- In short, Yes! Mistral-medium is the perfect alternative to gpt-3.5-turbo. The quality of the output and its robust performance with a decent cost, has been a storm for AI App developers.

- However, OpenAI has reduced the cost of gpt-3.5-turbo, which reduces the attractiveness of mistral-medium model.

So, we are about to compare the pros and cons of the two models, evaluating their real life performance. We will also comapre their pricing in details.

Comparing Mistral-Medium VS GPT-3.5-Turbo: the Performance

Comparison Methodology

This comparative study conducted by Reddit user @Kinniken was designed not only to rank the models but also to shed light on the nuances in their knowledge bases, reasoning proficiency, and ability to distinguish between fact and fiction, which is critical in an era where AI-generated content is ubiquitous.

The methodology deployed to evaluate and compare the AI models—Mistral-small, Mistral-medium, GPT-3.5-Turbo, and GPT-4—involved a structured approach across three distinct areas: general knowledge, reasoning capabilities, and handling of hallucinations.

- General Knowledge: Both models excel, but GPT-3.5 Turbo often edges out with its depth of data.

- Reasoning: GPT-4 shows occasional superior context recognition, suggesting a higher complexity in understanding.

- Handling Hallucinations: Mistral-Medium fares better, pointing to a nuanced approach in discerning fiction from fact.

Each model faced identical queries through direct API calls for Mistral variants and via the playground for GPT versions. This ensured a level playing field, with variations in performance attributed solely to the models' capabilities and not to differing query conditions.

Performance in General Knowledge

In assessing the prowess of AI models in general knowledge, the data presents a spectrum of capabilities:

| Model | Score (/10) |

|---|---|

| Mistral-small | 4.5 |

| Mistral-medium | 8 |

| GPT-3.5-Turbo | 7.5 |

| GPT-4 | 9 |

- Mistral-small shows a modest grasp, often tripping on more complex queries.

- Mistral-medium rises significantly, suggesting a robust knowledge base.

- GPT-3.5-Turbo performs competitively, but nuances in data retrieval are apparent.

- GPT-4 exhibits a superior command, deftly navigating diverse topics.

The strengths and weaknesses across models are stark: Mistral-small, while weaker overall, demonstrates a foundational knowledge layer. Mistral-medium's leap in performance indicates an expanded dataset or improved processing mechanisms. GPT-3.5-Turbo's results reflect a nuanced understanding of queries but reveal gaps in thoroughness. GPT-4's lead underscores a refined comprehension and retrieval system, handling intricate details with precision.

Reasoning Capabilities

The reasoning ability of each model was quantified as follows:

| Model | Average Score (/11) |

|---|---|

| Mistral-small | 6.33 |

| Mistral-medium | 7 |

| GPT-3.5-Turbo | 1 |

| GPT-4 | 7.67 |

GPT-4's adeptness in context recognition is apparent, outshining its predecessors in applying logical structures to problems. Its sensitivity to the wording of questions plays a pivotal role, suggesting a nuanced understanding of language and its implications in logical deduction.

Here is the original testing result posted by reddit user @Kinniken:

Handling Hallucinations

In the realm of hallucinations—responses to non-factual prompts—the models varied:

| Model | Score (/10) |

|---|---|

| Mistral-small | 4.5 |

| Mistral-medium | 3 |

| GPT-3.5-Turbo | 0 |

| GPT-4 | 6 |

- Mistral-small remarkably shows restraint, often avoiding fabrication.

- Mistral-medium struggles, occasionally succumbing to fabrications.

- GPT-3.5-Turbo appears most susceptible, failing to identify fictitious content.

- GPT-4 balances creativity with discernment, seldom generating unfounded content.

Mistral-small's performance is notable, indicating a potential safeguard against generating misleading content—a significant consideration for content creators aiming for accuracy. This capability to discern fact from fiction impacts the reliability and trustworthiness of content generated by AI, positioning certain models as more suitable for tasks requiring factual stringency.

Here is the original testing result posted by reddit user @Kinniken:

Who is the Better Model? Mistral-Medium or GPT-3.5-Turbo?

In the intricate dance of AI capabilities, GPT-3.5 Turbo and Mistral-Medium have both demonstrated commendable performances in areas.

- GPT-3.5 Turbo, with its expansive knowledge base, demonstrates impressive results of understanding, particularly in general knowledge.

- However, Mistral-Medium is much better at handling hallucinations, suggesting a more discerning approach to content generation.

These insights are not merely academic but serve as a beacon for users seeking to align AI capabilities with their specific needs—whether seeking depth of knowledge or heightened accuracy in content generation. However, for general users who just want a gpt-3.5 alternative, you got it. Mistral-medium is the alternative to gpt-3.5-turbo.

Comparing Mistral-Medium VS GPT-3.5-Turbo: the Price

Based on the latest available data, here is the table for Mistral-medium and GPT-3.5-turbo pricing comparison:

| Model | Input Cost per 1K Tokens (USD) | Output Cost per 1K Tokens (USD) |

|---|---|---|

| Mistral-medium | $0.0027 | $0.0081 |

| GPT-3.5-turbo (Standard Context) | $0.0015 | $0.0020 |

| GPT-3.5-turbo (Expanded Context) | $0.0030 | $0.0040 |

Source: Mistral AI Docs | Techcrunch

Key Takeaways:

The pricing for Mistral-medium, as outlined in the Mistral AI documentation, is €2.5 for input and €7.5 for output per 1 million tokens.

- The price of Mistral-medium in USD, this translates to approximately $0.0027 per 1,000 tokens for input and $0.0081 per 1,000 tokens for output.

On the other hand, GPT-3.5-turbo now stands at $0.0015 per 1,000 input tokens and $0.002 per 1,000 output tokens.

- The cost for the original GPT-3.5-turbo model, not the version with the expanded context window, has been reduced by 25%.

- This is a significant reduction from the previous pricing and makes GPT-3.5-turbo a cheaper option compared to the newer Mistral-medium, especially for applications requiring substantial text processing.

- Additionally, a new version of GPT-3.5-turbo with a 16,000 token context window is offered at $0.003 per 1,000 input tokens and $0.004 per 1,000 output tokens.

However, there is an alternative that you can use Mistral AI's API, but at a cheaper cost: using third party API providers for Mistral API!

We have a more detailed article covering the topic of Comparing Mistral API Providers:

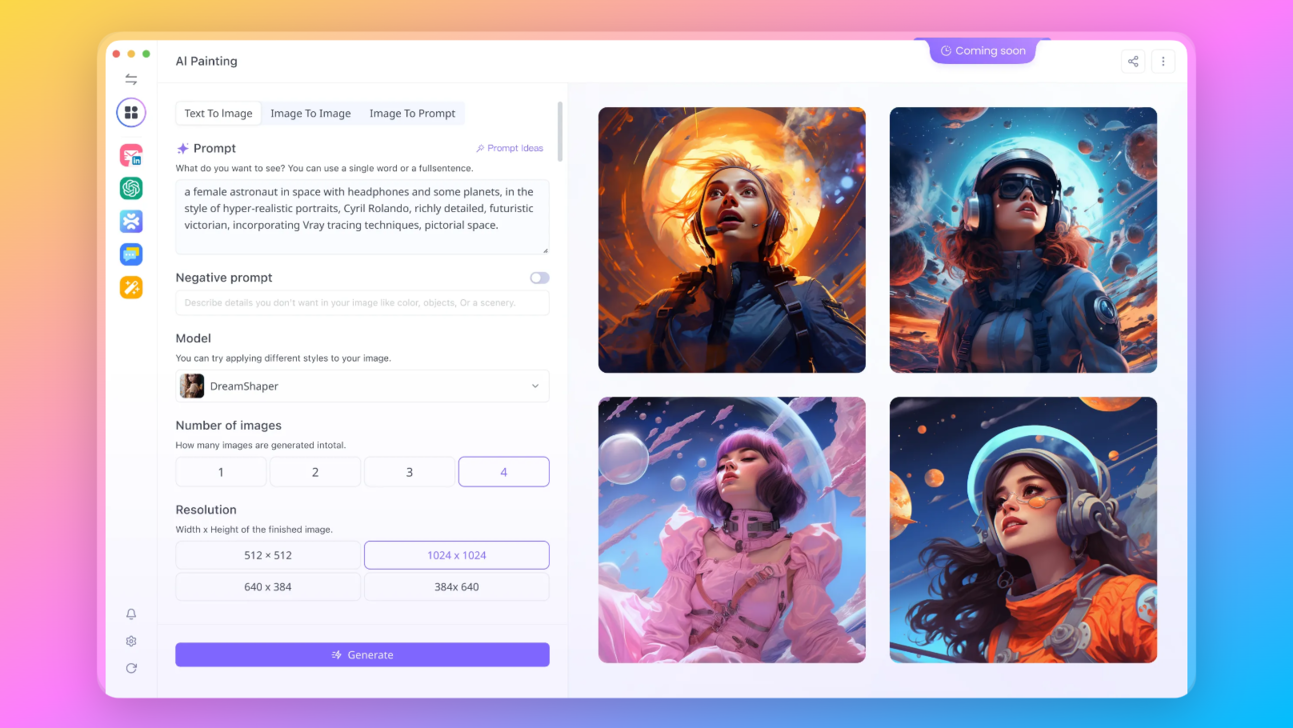

How to Try Mistral AI's Models Online: with Anakin AI

One of the easiest ways to test out Mistral AI's latest models is using the API provided by Anakin AI:

Anakin AI is not just an alternative; it's a gateway to a diverse array of AI models, each with unique capabilities and advantages. Imagine having the power to tailor your AI experience, selecting from a suite of models to perfectly align with your project's needs.

Here are the other Open Source and Free Models that Anakin AI supports:

- GPT-4: Boasting an impressive context window of up to 128k, this model takes deep learning to new heights.

- Claude-2.1 and Claude Instant: These variants provide nuanced understanding and responses, tailored to different interaction speeds.

- Google Gemini Pro: A model designed for precision and depth in information retrieval.

- Mistral 7B and Mixtral 8x7B: Specialized models that offer a blend of generative capabilities and scale.

Your vision isn't limited to text, and neither should your AI be. With Anakin AI, you gain access to state-of-the-art image generation models such as:

- DALLE 3: Create stunning, high-resolution images from textual descriptions.

- Stable Diffusion: Generate images with a unique artistic flair, perfect for creative projects.

Interested in trying Mistral's Latest Models? Try it now at Anakin.AI!

Conclusion

The contest between Mistral-Medium and GPT-3.5 Turbo unfolds as a testament to the rapid advancements in AI, where each model shines in its capacity to process and generate language with human-like finesse. As this landscape continues to evolve, the ultimate choice rests in the hands of users, who must weigh performance against price, seeking a harmony that best resonates with their vision for the future of intelligent automation.