In this comprehensive tutorial, we will explore how to build a powerful Retrieval Augmented Generation (RAG) application using the cutting-edge Llama 3 language model by Meta AI. By leveraging the capabilities of Llama 3 and the RAG technique, we will create an app that allows users to engage in interactive conversations with a webpage, retrieving relevant information and generating accurate responses to user queries. Throughout this tutorial, we will dive into the step-by-step process of setting up the development environment, loading and processing webpage data, creating embeddings and vector stores, and implementing the RAG chain to deliver an exceptional user experience.

Article Summary

- Set up the Streamlit app and install required libraries

- Load and process webpage data using WebBaseLoader and RecursiveCharacterTextSplitter

- Create Ollama embeddings and vector store using OllamaEmbeddings and Chroma

- Implement the RAG chain to retrieve relevant information and generate responses

What is Llama 3?

Llama 3 is a state-of-the-art language model developed by Meta AI that excels in understanding and generating human-like text.

- With its impressive capabilities in natural language processing, Llama 3 can comprehend complex queries, provide accurate responses, and engage in contextually relevant conversations.

- Its ability to handle a wide range of topics and its efficiency in processing make it an ideal choice for building intelligent applications.

- Want to test out the power of Llama 3? Chat with Anakin AI now! (which support virually any AI Model available!)

What is RAG?

Retrieval Augmented Generation (RAG) is a technique that combines information retrieval and language generation to enhance the performance of question-answering systems.

- In plain English, RAG allows an AI model to retrieve relevant information from a knowledge base or document and use that information to generate more accurate and contextually appropriate responses to user queries.

- By leveraging the power of retrieval and generation, RAG enables the creation of intelligent chatbots and question-answering applications that can provide users with highly relevant and informative responses.

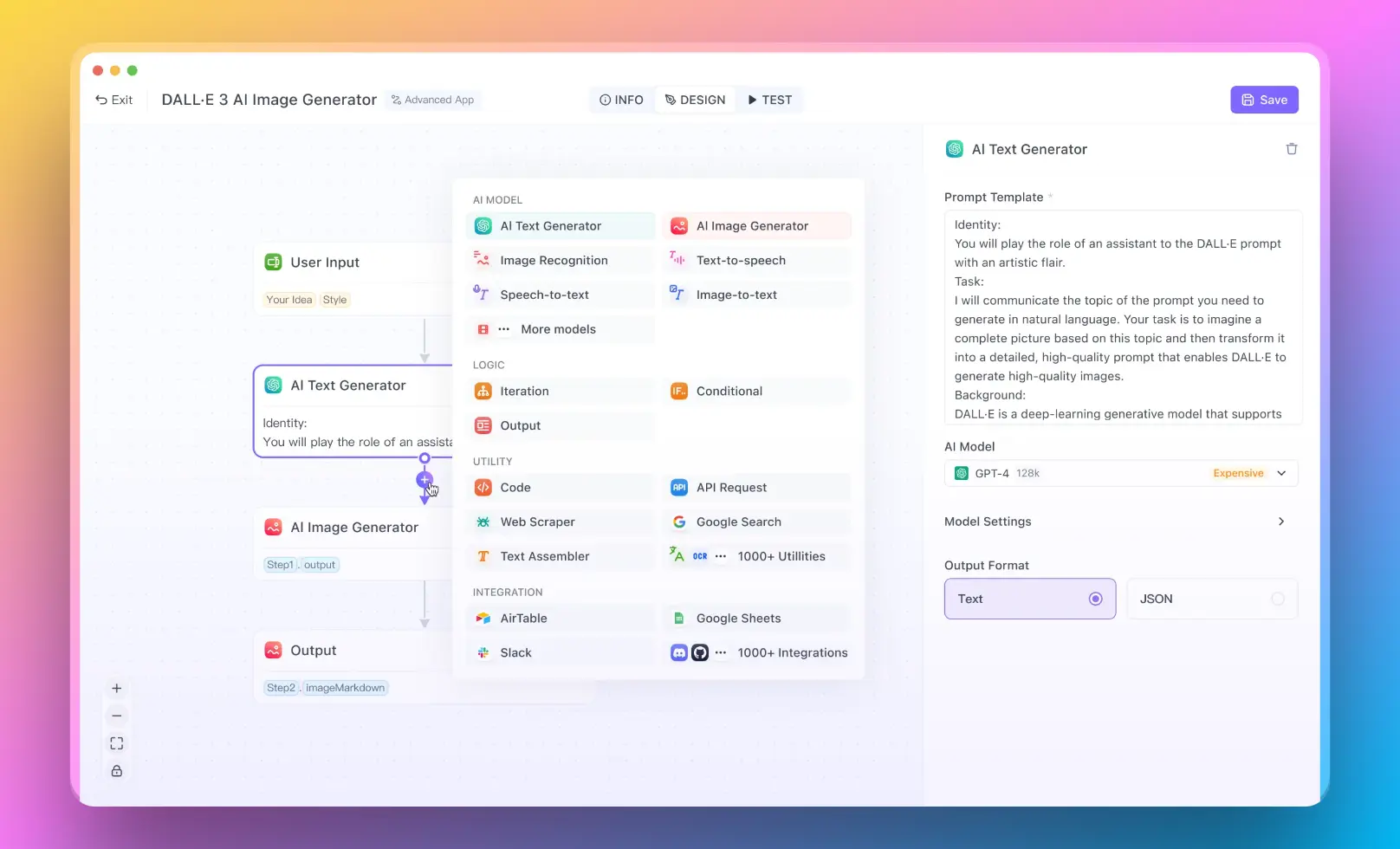

- For users who want to run a RAG system with no coding experience, you can try out Anakin AI, where you can create awesome AI Apps with a No Code Builder!

With all the information above, Let's get started!

Prerequisites to Run a Local Llama 3 RAG App

Before we begin, make sure you have the following prerequisites installed:

- Python 3.7 or higher

- Streamlit

- ollama

- langchain

- langchain_community

You can install the required libraries by running the following command:

pip install streamlit ollama langchain langchain_community

Step-by-Step Guide to Run Your Own RAG App Locally with Llama-3

Step 1: Set Up the Streamlit App

First, let's set up the basic structure of our Streamlit app. Create a new Python file named app.py and add the following code:

import streamlit as st

import ollama

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.document_loaders import WebBaseLoader

from langchain_community.vectorstores import Chroma

from langchain_community.embeddings import OllamaEmbeddings

st.title("Chat with Webpage 🌐")

st.caption("This app allows you to chat with a webpage using local Llama-3 and RAG")

# Get the webpage URL from the user

webpage_url = st.text_input("Enter Webpage URL", type="default")

This code sets up the basic structure of the Streamlit app, including the title, caption, and an input field for the user to enter the webpage URL.

Step 2: Load and Process the Webpage Data

Next, we need to load the data from the specified webpage and process it for further use. Add the following code to app.py:

if webpage_url:

# 1. Load the data

loader = WebBaseLoader(webpage_url)

docs = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=10)

splits = text_splitter.split_documents(docs)

Here, we use the WebBaseLoader from langchain_community to load the webpage data. Then, we split the loaded documents into smaller chunks using the RecursiveCharacterTextSplitter from langchain.

Step 3: Create Ollama Embeddings and Vector Store

To enable efficient retrieval of relevant information from the webpage, we need to create embeddings and a vector store. Add the following code:

# 2. Create Ollama embeddings and vector store

embeddings = OllamaEmbeddings(model="llama3")

vectorstore = Chroma.from_documents(documents=splits, embedding=embeddings)

We create Ollama embeddings using the OllamaEmbeddings class from langchain_community and specify the llama3 model. Then, we create a vector store using the Chroma class, passing the split documents and embeddings.

Step 4: Define the Ollama Llama-3 Model Function

Now, let's define a function that utilizes the Ollama Llama-3 model to generate responses based on the user's question and the relevant context. Add the following code:

# 3. Call Ollama Llama3 model

def ollama_llm(question, context):

formatted_prompt = f"Question: {question}\n\nContext: {context}"

response = ollama.chat(model='llama3', messages=[{'role': 'user', 'content': formatted_prompt}])

return response['message']['content']

This function takes the user's question and the relevant context as input. It formats the prompt by combining the question and context, and then uses the ollama.chat function to generate a response using the Llama-3 model.

Step 5: Set Up the RAG Chain

To retrieve relevant information from the vector store based on the user's question, we need to set up the RAG (Retrieval Augmented Generation) chain. Add the following code:

# 4. RAG Setup

retriever = vectorstore.as_retriever()

def combine_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

def rag_chain(question):

retrieved_docs = retriever.invoke(question)

formatted_context = combine_docs(retrieved_docs)

return ollama_llm(question, formatted_context)

st.success(f"Loaded {webpage_url} successfully!")

Here, we create a retriever from the vector store using the as_retriever method. We define a helper function combine_docs to combine the retrieved documents into a single formatted context string. The rag_chain function takes the user's question, retrieves relevant documents using the retriever, combines the documents into a formatted context, and passes the question and context to the ollama_llm function to generate a response.

Step 6: Implement the Chat Functionality

Finally, let's implement the chat functionality in our Streamlit app. Add the following code:

# Ask a question about the webpage

prompt = st.text_input("Ask any question about the webpage")

# Chat with the webpage

if prompt:

result = rag_chain(prompt)

st.write(result)

This code adds an input field for the user to ask a question about the webpage. When the user enters a question and submits it, the rag_chain function is called with the user's question. The generated response is then displayed using st.write.

Final Step: Time to Run the App!

To run the app, save the app.py file and open a terminal in the same directory. Run the following command:

streamlit run app.py

This will start the Streamlit app, and you can access it in your web browser at the provided URL.

Conclusion

Congratulations! You have successfully built a RAG app with Llama-3 running locally. The app allows users to chat with a webpage by leveraging the power of local Llama-3 and RAG techniques. Users can enter a webpage URL, and the app will load and process the webpage data, create embeddings and a vector store, and use the RAG chain to retrieve relevant information and generate responses based on the user's questions.

Feel free to explore and enhance the app further by adding more features, improving the user interface, or integrating additional functionality as needed.

Then, You cannot miss out Anakin AI!

Anakin AI is an all-in-one platform for all your workflow automation, create powerful AI App with an easy-to-use No Code App Builder, with Llama 3, Claude, GPT-4, Uncensored LLMs, Stable Diffusion...

Build Your Dream AI App within minutes, not weeks with Anakin AI!