Retrieval Augmented Generation (RAG) is a powerful technique that combines information retrieval with language models to generate high-quality responses. LangChain, a popular Python library for building applications with large language models (LLMs), provides a seamless way to implement RAG in your projects. In this article, we'll explore what RAG is, how it works in LangChain, and how you can use it to create intelligent chatbots and question-answering systems.

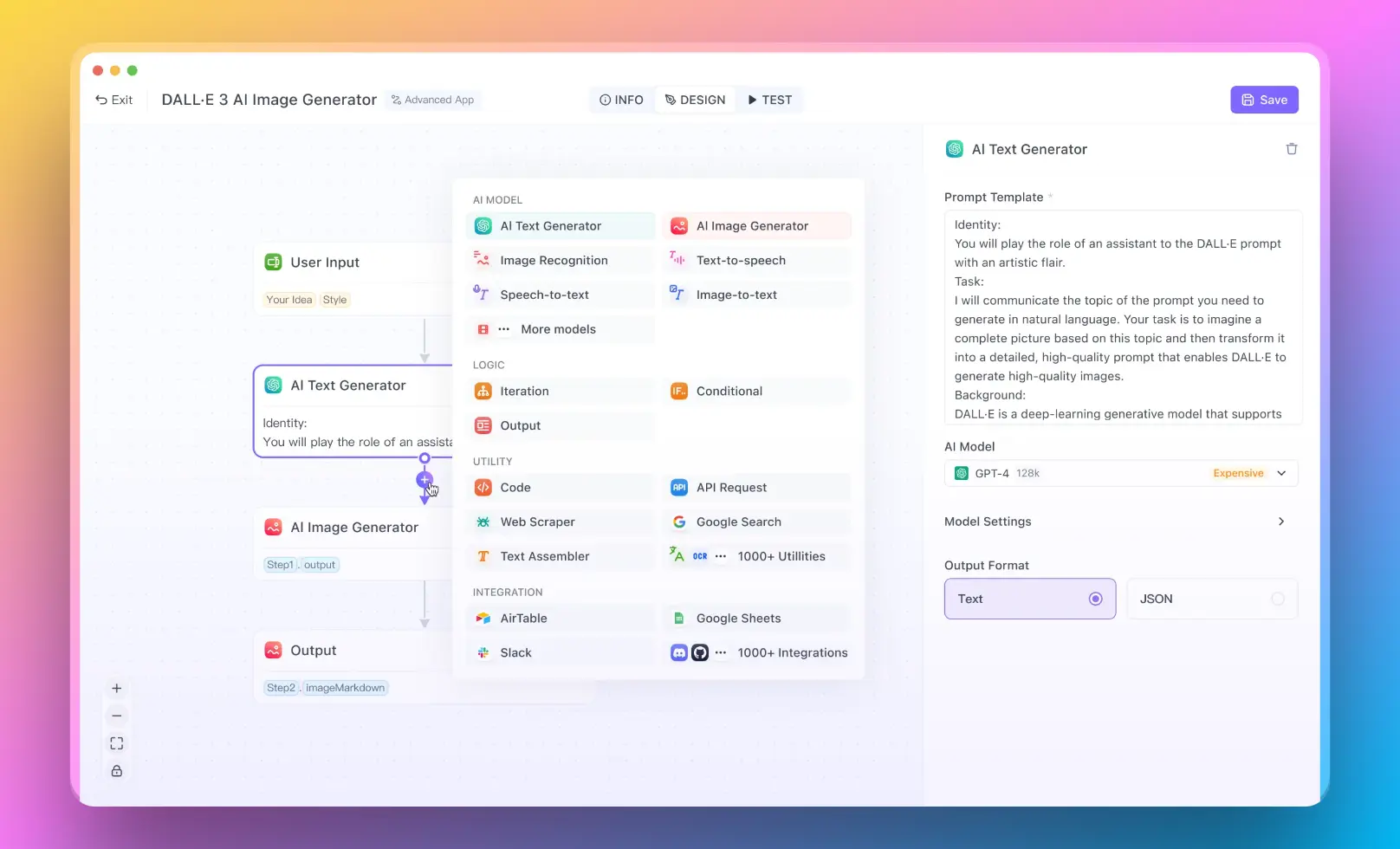

- For users who want to run a RAG system with no coding experience, you can try out Anakin AI, where you can create awesome AI Apps with a No Code Builder!

What is RAG in LangChain?

RAG in LangChain refers to the Retrieval Augmented Generation model, which enhances the capabilities of LLMs by incorporating relevant information from external sources. It allows the model to retrieve and utilize knowledge from a given set of documents or data sources, enabling it to generate more accurate and contextually relevant responses.

Understanding the RAG Model in Python

The RAG model in Python consists of three main components:

Retriever: The retriever is responsible for searching and retrieving relevant documents or passages based on the input query. It uses techniques like vector similarity search to find the most relevant information.

Generator: The generator is an LLM that takes the retrieved documents and the input query as context and generates a response based on the combined information.

Combiner: The combiner takes the retrieved documents and the generated response and combines them to produce the final output. It can use various strategies like concatenation or weighted averaging to merge the information.

How to Use RAG in Python with LangChain

To use RAG in Python with LangChain, follow these steps:

- Install the necessary dependencies:

pip install langchain openai chromadb

Prepare your data by converting it into a suitable format, such as text files or a CSV file.

Create a vector database using a library like Chroma:

from langchain.vectorstores import Chroma

documents = [...] # Load your documents

vectordb = Chroma.from_documents(documents, embedding=OpenAIEmbeddings())

- Define your retriever using the vector database:

retriever = vectordb.as_retriever(search_kwargs={"k": 3})

- Create an LLM instance:

from langchain.llms import OpenAI

llm = OpenAI(temperature=0)

- Combine the retriever and LLM to create a RAG model:

from langchain.chains import RetrievalQA

qa = RetrievalQA.from_chain_type(llm=llm, chain_type="stuff", retriever=retriever)

- Use the RAG model to generate responses:

query = "What is the capital of France?"

response = qa.run(query)

print(response)

Applications of LangChain RAG

LangChain RAG (Retrieval Augmented Generation) is a powerful technique that can be applied to various AI applications. Let's explore some of these applications in detail and provide step-by-step instructions and sample code snippets to help you get started.

Building Intelligent Chatbots with LangChain RAG

One of the primary applications of LangChain RAG is building intelligent chatbots that can answer questions based on a knowledge base. Here's how you can create a chatbot using LangChain RAG:

Prepare your knowledge base by collecting relevant documents or data sources.

Convert the documents into a suitable format, such as text files or a CSV file.

Create a vector database using a library like Chroma:

from langchain.vectorstores import Chroma

from langchain.embeddings import OpenAIEmbeddings

documents = [...] # Load your documents

vectordb = Chroma.from_documents(documents, embedding=OpenAIEmbeddings())

- Define your retriever using the vector database:

retriever = vectordb.as_retriever(search_kwargs={"k": 3})

- Create an LLM instance:

from langchain.llms import OpenAI

llm = OpenAI(temperature=0)

- Combine the retriever and LLM to create a RAG model:

from langchain.chains import ConversationalRetrievalChain

qa = ConversationalRetrievalChain.from_llm(llm=llm, retriever=retriever)

- Implement a conversation loop to interact with the chatbot:

chat_history = []

while True:

query = input("User: ")

if query.lower() == 'exit':

break

result = qa({"question": query, "chat_history": chat_history})

chat_history.append((query, result['answer']))

print(f"Assistant: {result['answer']}")

Creating Question-Answering Systems with LangChain RAG

LangChain RAG can be used to create question-answering systems for specific domains like customer support or technical documentation. Here's how you can build a question-answering system:

Collect and preprocess the relevant documents or data sources for your specific domain.

Create a vector database and retriever as shown in the previous example.

Define a question-answering chain using the RAG model:

from langchain.chains import RetrievalQA

qa_chain = RetrievalQA.from_chain_type(llm=llm, chain_type="stuff", retriever=retriever)

- Use the question-answering chain to generate responses:

query = "What are the system requirements for installing the software?"

response = qa_chain.run(query)

print(response)

Generating Summaries or Explanations with LangChain RAG

LangChain RAG can be used to generate summaries or explanations from a collection of documents. Here's how you can achieve this:

Prepare your document collection and create a vector database and retriever as shown in the previous examples.

Define a summarization chain using the RAG model:

from langchain.chains import LoadQA

summarization_chain = LoadQA(llm=llm, retriever=retriever)

- Use the summarization chain to generate summaries or explanations:

query = "Provide a summary of the key points discussed in the meeting minutes."

summary = summarization_chain.run(query)

print(summary)

Enhancing Search Functionality with LangChain RAG

LangChain RAG can be used to enhance search functionality by providing more relevant and contextual results. Here's how you can integrate RAG into a search system:

Prepare your document collection and create a vector database and retriever as shown in the previous examples.

Define a search function that utilizes the RAG model:

def search(query):

docs = retriever.get_relevant_documents(query)

context = " ".join([doc.page_content for doc in docs])

response = llm.generate(f"{context}\nQuestion: {query}\nAnswer:", stop=None, max_tokens=100).generations[0].text

return response.strip()

- Use the search function to retrieve relevant results:

query = "What are the benefits of using our product?"

result = search(query)

print(result)

By leveraging the power of retrieval and generation, LangChain RAG enables you to create AI applications that can understand and respond to user queries with high accuracy and relevance. Whether you're building chatbots, question-answering systems, summarization tools, or enhancing search functionality, LangChain RAG provides a flexible and efficient way to incorporate external knowledge and generate contextually relevant outputs.

Conclusion

RAG in LangChain is a powerful technique that combines information retrieval with language models to generate high-quality responses. By using LangChain's intuitive API and integrating with vector databases like Chroma, you can easily implement RAG in your Python projects. Whether you're building chatbots, question-answering systems, or other AI applications, LangChain RAG provides a flexible and efficient way to incorporate external knowledge and generate contextually relevant outputs.

Discover the power of LangChain RAG and revolutionize your AI applications with retrieval augmented generation!