In the rapidly evolving world of artificial intelligence, the ability to process language in real-time has become increasingly crucial. Streaming LangChain offers a robust solution, enabling developers to integrate advanced language understanding and generation capabilities into their applications seamlessly. This guide delves into the essentials of streaming LangChain, from basic concepts to practical implementation, highlighting the differences between invoking and streaming outputs and exploring the broader implications of streaming in large language models (LLMs). Whether you're building a dynamic chatbot, a real-time translation tool, or any interactive AI-driven application, understanding how to leverage streaming with LangChain can significantly enhance your project's effectiveness and user engagement.

TL;DR: Quick Overview

- Initialize Environment: Set up LangChain and configure your language model.

- Configure Streaming: Define streaming parameters and session settings.

- Implement Logic: Develop functions to handle and respond to continuous data.

- Test and Optimize: Ensure the system's responsiveness and stability through rigorous testing.

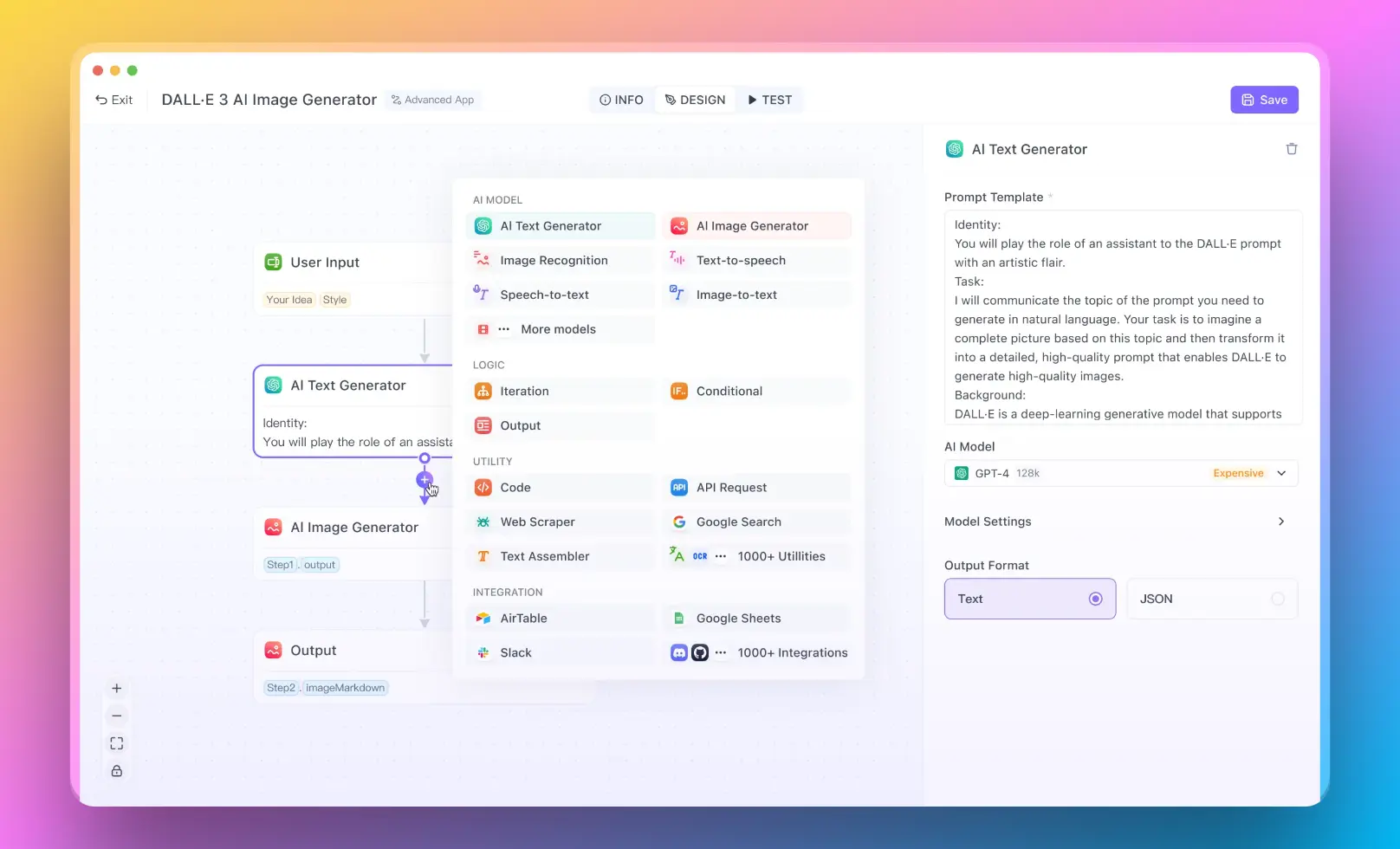

- For users who want to run a RAG system with no coding experience, you can try out Anakin AI, where you can create awesome AI Apps with a No Code Builder!

What is Streaming LangChain?

LangChain is a powerful tool designed to facilitate the integration of language models into applications, enhancing their capabilities to understand and generate human-like text. Streaming in LangChain refers to the process of handling real-time data flows, enabling the language models to process and respond to input continuously. This feature is particularly useful in scenarios where the input data is not static and evolves over time, such as in chatbots, real-time translation services, or interactive educational platforms.

Step-by-Step Guide to Streaming Output in LangChain

To effectively stream output in LangChain, developers need to follow a structured approach. Here’s how you can start streaming outputs with LangChain:

Initialize Your LangChain Environment:

Set up your LangChain environment by installing the necessary libraries and setting up your language model.

from langchain.llms import OpenAI

# Initialize the language model

llm = OpenAI(api_key='your-api-key')

Configure Streaming Settings:

Define the parameters for streaming. This includes setting up the session and specifying how the data should be streamed.

from langchain.chains import StreamingChain

# Create a streaming chain

streaming_chain = StreamingChain(llm=llm, stream=True)

Implement Streaming Logic:

Develop the logic to handle incoming data streams. This involves creating functions that will process the data and generate responses.

def handle_input(input_text):

response = streaming_chain.run(input_text)

print("Response:", response)

return response

# Example usage

handle_input("Hello, how can I assist you today?")

Test and Optimize:

Continuously test the streaming setup with different types of inputs to ensure stability and responsiveness. Optimize based on feedback and performance metrics.

What is the Difference Between Invoke and Stream in LangChain?

In LangChain, the terms "invoke" and "stream" refer to different methods of interacting with language models:

Invoke: This method is used when you need a one-time response from the language model. It is suitable for static inputs where the entire input is available at once. The model processes the input and returns a single response.

Stream: Unlike invoke, stream is used for continuous input and output. It allows the language model to maintain context over multiple interactions, making it ideal for applications like chatbots where the conversation flows naturally over time.

Here’s a simple comparison using code:

# Using invoke

response = llm.invoke("What is the weather like today?")

print(response)

# Using stream

streaming_chain.run("What is the weather like today?")

streaming_chain.run("And tomorrow?")

What is Streaming in LLMs (Large Language Models)?

Streaming in Large Language Models (LLMs) refers to the capability of these models to process input data in a continuous, real-time manner. This is crucial for applications that require dynamic interaction, such as virtual assistants, live customer support systems, and interactive learning tools. Streaming enables LLMs to keep the context of the conversation, adjust their responses based on the flow of the dialogue, and handle long-running sessions effectively.

Benefits of Streaming in LLMs

- Contextual Awareness: Maintains the context over the course of a conversation, leading to more coherent and contextually appropriate responses.

- Real-Time Interaction: Allows for immediate processing of input, which is essential for applications requiring instant feedback.

- Scalability: Supports handling high volumes of interactions simultaneously, which is beneficial for enterprise-level solutions.

Implementing Streaming in LLMs

Here’s a basic example of implementing streaming in an LLM:

from langchain.llms import OpenAI

# Initialize the LLM

llm = OpenAI(api_key='your-api-key')

# Function to handle streaming

def stream_conversation(input_text):

response = llm.stream(input_text)

print("Streaming Response:", response)

return response

# Example conversation

stream_conversation("Hello, what's your name?")

stream_conversation("How can you help me today?")

That's all you need to make it work!

Conclusion

In conclusion, streaming LangChain is a transformative approach that leverages the continuous data processing capabilities of LLMs, enabling developers to build more interactive and responsive applications. By understanding and implementing streaming, developers can significantly enhance the user experience, making AI interactions smoother and more natural.