In the quiet of a dimly-lit dorm room, with the gentle hum of my old laptop, I embarked on a journey into the world of coding. My mission was simple yet daunting: to breathe life into a cluster of code, transforming it into a chatbot that could engage in basic conversation. Night after night, I fed it lines of Python, coaxing it toward complexity. And then, one crisp autumn evening, it spat out its first coherent response. "Hello, World!" it said, and in that moment, it was as if I'd unlocked a secret door to the future.

That first foray into AI was a mix of magic and logic, a testament to human ingenuity. It's been years since that triumphant night, and the field of artificial intelligence has not just evolved; it has exploded into realms once relegated to the pages of science fiction. The release of Qwen 1.5 by Alibaba Cloud is a landmark event in this ongoing saga, a glittering beacon in the AI odyssey.

The journey from those early days to the present, where AI can compose poetry, diagnose diseases, and even drive cars, is nothing short of miraculous. Qwen 1.5 is the latest chapter in this extraordinary tale, a narrative that continues to unfold with each algorithmic breakthrough and each line of code that's written.

Article Summary

- Qwen 1.5's Debut: Alibaba Cloud launches Qwen 1.5, an AI model with six different sizes, challenging the dominance of models like GPT-3.5 with its impressive capabilities.

- Redefining Conversational AI: Equipped with multilingual support and the ability to handle lengthy 32K token contexts, Qwen 1.5 redefines the boundaries of conversational AI.

- Open-Access Revolution: Not only does Qwen 1.5 stand toe-to-toe with giants like GPT-4, but it also pioneers the open-access movement in AI, making advanced technology freely available to innovators everywhere.

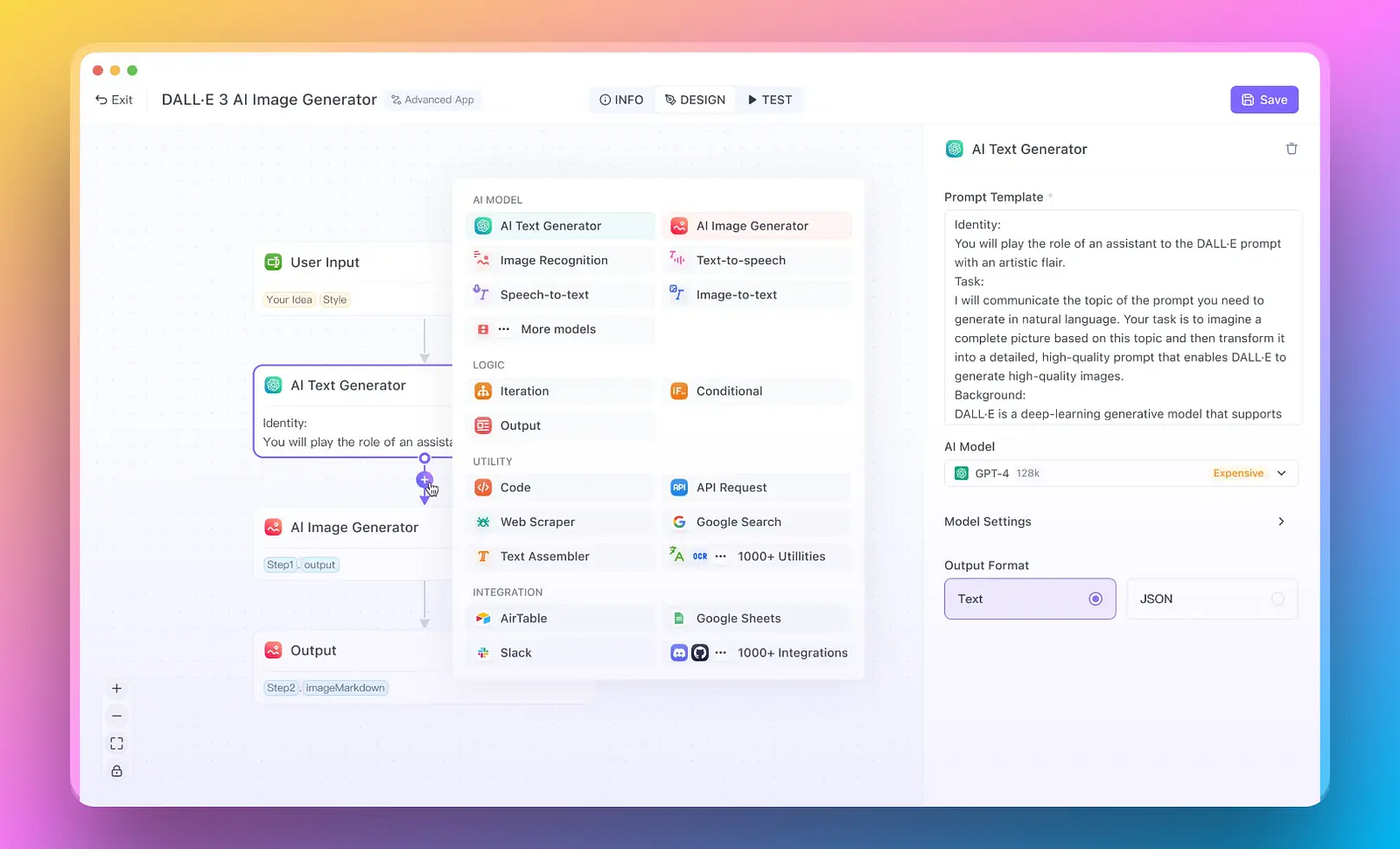

But what if you just need to build quick AI Apps? And do not want to waste time with the hustle?

Here you go: Anakin AI is the best No Code AI App Builder on the market. Build any AI Agents with multi-model support for your own data and workflow!

How Does Qwen 1.5 Redefine Multilingual AI Models?

In the bustling bazaar of AI innovations, Qwen 1.5 stands out not just as another contender, but as a trailblazer. Alibaba Cloud has unveiled a marvel that can juggle a wide array of parameters, ranging from a modest 0.5 billion to a staggering 72 billion. This diversity in model sizes is unprecedented and indicates a tailored approach to AI, one that can be as nuanced or as expansive as required.

The suite, in its varying capacities, is designed to cater to a broad spectrum of tasks, from the most straightforward to the most demanding. Its largest variant, with 72 billion parameters, is akin to a digital polymath with a profound capacity for learning and understanding.

Why is Qwen 1.5's Multilingual Support Groundbreaking?

Multilingual Support

Qwen 1.5 is not just a polyglot; it's an open door to a global conversation. With its multilingual support, it shatters the linguistic boundaries that have long compartmentalized knowledge and expertise.

This model invites a multitude of voices to the table, enabling a rich tapestry of cultures to contribute to and benefit from AI advancements. It heralds a future where language is no longer a hurdle in the global dialogue of innovation but a bridge connecting diverse intellects.

AI Model Efficiency

Furthermore, Qwen 1.5's efficiency is a testament to Alibaba Cloud's commitment to seamless functionality. By integrating smoothly with the Transformers library, Qwen 1.5 ensures that developers can plug into its power without the usual hassles of compatibility issues or steep learning curves.

The model’s weight optimizations—Int-4, GPTQ, AWQ, and GGUF—represent the pinnacle of model efficiency. These are not just jargon but milestones in the quest for a leaner, more agile AI that can deliver performance without the prodigious resource expenditure typically associated with such sophisticated models.

How Does Qwen 1.5 Stack Up Against Other Models?

In the competitive arena of artificial intelligence, where models vie for supremacy, Qwen 1.5 emerges not just to compete but to set a new standard. The MT-Bench and Alpaca-Eval performance charts are not mere numbers; they narrate a story of triumph. In this tale, Qwen 1.5 ascends the ranks, outshining rivals in a broad array of metrics. This is a model that doesn't just nudge the bar higher; it redefines where the bar should be.

Benchmark Performance

| Model | MMLU | C-Eval | GSM8K | MATH | HumanEval | MBPP | BBH | CMMLU |

|---|---|---|---|---|---|---|---|---|

| GPT-4 | 86.4 | 69.9 | 92.0 | 45.8 | 67.0 | 61.8 | 86.7 | 71.0 |

| Llama2-7B | 46.8 | 32.5 | 16.7 | 3.3 | 12.8 | 20.8 | 38.2 | 31.8 |

| Llama2-13B | 55.0 | 41.4 | 29.6 | 5.0 | 18.9 | 30.3 | 45.6 | 38.4 |

| Llama2-34B | 62.6 | - | 42.2 | 6.2 | 22.6 | 33.0 | 44.1 | - |

| Llama2-70B | 69.8 | 50.1 | 54.4 | 10.6 | 23.7 | 37.7 | 58.4 | 53.6 |

| Mistral-7B | 64.1 | 47.4 | 47.5 | 11.3 | 27.4 | 38.6 | 56.7 | 44.7 |

| Mixtral-8x7B | 70.6 | - | 74.4 | 28.4 | 40.2 | 60.7 | - | - |

| Qwen1.5-7B | 61.0 | 74.1 | 62.5 | 20.3 | 36.0 | 37.4 | 40.2 | 73.1 |

| Qwen1.5-14B | 67.6 | 78.7 | 70.1 | 29.2 | 37.8 | 44.0 | 53.7 | 77.6 |

| Qwen1.5-72B | 77.5 | 84.1 | 79.5 | 34.1 | 41.5 | 53.4 | 65.5 | 83.5 |

Here are our takes:

- Imagine a decathlon, where a single athlete outpaces, outthrows, and outjumps the competition in nearly every event. This is what Qwen 1.5 achieves in the digital realm. The performance charts illustrate Qwen 1.5's prowess, placing it not just alongside but often above other illustrious models, such as GPT-3.5.

- It’s a testament to its design that marries robustness with versatility, ensuring that whether it’s language understanding, generation, or reasoning, Qwen 1.5 is equipped to excel.

Support of Long Context

There's a certain finesse in managing a lengthy conversation or dissecting a complex document, and it's here that Qwen 1.5 demonstrates its mettle.

| Models | Coursera | GSM | QuALITY | TOEFL | SFiction | Avg. |

|---|---|---|---|---|---|---|

| GPT3.5-turbo-16k | 63.51 | 84.00 | 61.38 | 78.43 | 64.84 | 70.43 |

| Claude1.3-100k | 60.03 | 88.00 | 73.76 | 83.64 | 72.65 | 75.62 |

| GPT4-32k | 75.58 | 96.00 | 82.17 | 84.38 | 74.99 | 82.62 |

| Qwen-72B-Chat | 58.13 | 76.00 | 77.22 | 86.24 | 69.53 | 73.42 |

| Qwen1.5-0.5B-Chat | 30.81 | 6.00 | 34.16 | 40.52 | 49.22 | 32.14 |

| Qwen1.5-1.8B-Chat | 39.24 | 37.00 | 42.08 | 55.76 | 44.53 | 43.72 |

| Qwen1.5-4B-Chat | 54.94 | 47.00 | 57.92 | 69.15 | 56.25 | 57.05 |

| Qwen1.5-7B-Chat | 59.74 | 60.00 | 64.36 | 79.18 | 62.50 | 65.16 |

| Qwen1.5-14B-Chat | 69.04 | 79.00 | 74.75 | 83.64 | 75.78 | 76.44 |

| Qwen1.5-72B-Chat | 71.95 | 82.00 | 77.72 | 85.50 | 73.44 | 78.12 |

Here are the takes:

- With the capability to process up to 32,000 tokens, it's akin to a master weaver that can handle the longest threads without losing the pattern.

- This ability to maintain coherence over extended dialogues or narratives opens new doors for applications requiring deep contextual awareness, from drafting legal documents to composing literary pieces.

And... Open Source!

The merit of an AI model is not solely in its performance but also in its reach. By embracing an open-access philosophy, Qwen 1.5 extends its hand to a global community of developers and researchers. This accessibility ensures that the fruits of Alibaba Cloud's labor are not locked behind the gates of proprietary restrictions but are shared freely, catalyzing innovation and research across borders. It is a model that belongs as much to a student in a university dorm as it does to a developer in a tech conglomerate.

In the grand chessboard of AI, Qwen 1.5 is Alibaba Cloud's checkmate move, offering unparalleled performance, remarkable context management, and universal accessibility. It stands not in isolation but as part of an ecosystem, influencing and being influenced by the community that shapes and is shaped by it.

Step by Step to Run Qwen 1.5

Developing with Qwen 1.5 has been made significantly more user-friendly thanks to its integration with Hugging Face's Transformers library. Here's a step-by-step guide to getting started:

Getting Started with Qwen 1.5

Set Up Your Environment: Ensure you have the latest version of Hugging Face transformers installed that supports Qwen 1.5.

Load the Model: Use AutoModelForCausalLM.from_pretrained with the model name and device_map set to "auto" to load the model for causal language modeling.

from transformers import AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen1.5-7B-Chat", device_map="auto")

Chat with Qwen 1.5: Prepare your prompt and use the tokenizer to apply a chat template, then generate a response using the model.

from transformers import AutoModelForCausalLM, AutoTokenizer

# ... (initial setup code here)

# Generate and decode the response

# ... (generation and decoding code here)

Use Advanced Weights: For low-resource or deployment scenarios, utilize AWQ and GPTQ models by simply loading them with their respective model names.

Deploy with Inference Frameworks: Integrate Qwen 1.5 with popular frameworks like vLLM and SGLang for easy deployment.

python -m vllm.entrypoints.openai.api_server --model Qwen/Qwen1.5-7B-Chat

Run Locally: For local execution, use the provided llama.cpp support or GGUF quantized models from the Hugging Face model hub.

Create a Web Demo: Set up a local web demo using Text generation web UI for interactive experiences.

Advanced Developers and Downstream Applications:

For Advanced Development: If you're looking to perform more complex tasks like post-training, Qwen 1.5 supports the Hugging Face Trainer and Peft. Frameworks such as LLaMA-Factory and Axolotl also facilitate supervised fine-tuning and alignment techniques.

Downstream Applications: Qwen 1.5 is versatile enough to be used in a variety of applications. OpenAI-API compatible APIs and local models can be built for integration with frameworks like LlamaIndex, LangChain, and CrewAI.

Example: Using vLLM for Deployment

# Start the API server using vLLM

python -m vllm.entrypoints.openai.api_server --model Qwen/Qwen1.5-7B-Chat

# Use curl to interact with your model

curl http://localhost:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "Qwen/Qwen1.5-7B-Chat",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Tell me something about large language models."}

]

}'

Running Qwen 1.5 Locally with llama.cpp

- For a local run, llama.cpp has been updated to support Qwen 1.5, allowing you to leverage quantized models easily.

./main -m qwen1.5-7b-chat-q2_k.gguf -n 512 --color -i -cml -f prompts/chat-with-qwen.txt

Run Qwen 1.5 with Ollama

- With Ollama's support, running the model can be as simple as a single command line:

ollama run qwen

Building a Web Demo

- To create a web-based demonstration, the Text Generation Web UI is recommended for its ease of use and interactivity.

Training with Qwen 1.5

- For those looking to train or fine-tune models, Qwen 1.5 is compatible with the Hugging Face Trainer and Peft, along with LLaMA-Factory and Axolotl for advanced training techniques.

Conclusion

Qwen 1.5 represents a significant advancement in the AI community's ability to develop, deploy, and integrate cutting-edge language models. With its comprehensive support for various platforms and frameworks, along with an emphasis on developer experience, Qwen 1.5 is poised to fuel a new wave of innovation and application in the field of AI. Whether you're a researcher, a developer, or an AI enthusiast, Qwen 1.5 offers the tools and capabilities to push the boundaries of what's possible with language models.

But what if you just need to build quick AI Apps? And do not want to waste time with the hustle?

Here you go: Anakin AI is the best No Code AI App Builder on the market. Build any AI Agents with multi-model support for your own data and workflow!