In the bustling heart of Tokyo, amidst the neon-lit skyline and the relentless rhythm of daily life, I found myself facing a challenge that seemed insurmountable. As a budding digital artist, I was on a quest to create a series of artworks that could blend the vivid chaos of urban life with the nuanced expressions of human emotion. But the complexity of capturing such detail and depth in my images was daunting. It was during this time of creative turmoil that I stumbled upon Ollama Vision and its LLaVA models. Suddenly, the possibilities seemed endless.

Ollama stands as a beacon for those looking to harness the power of large language models locally, offering a suite of specialized vision models that transform the way we interact with digital imagery. The recent update to LLaVA models to version 1.6 was a game-changer, boasting higher image resolution capabilities, enhanced text recognition, and more permissive licenses. This meant that not only could I refine my art with unprecedented detail, but I could also embed layers of narrative and meaning into each piece with ease.

Prerequisites and Installation

Before diving into the world of Ollama Vision, there are a few steps to ensure you're ready to embark on this journey:

- System Requirements: Ollama is designed to be accessible, running smoothly on macOS and Linux systems. The anticipation of Windows compatibility adds to the excitement, promising even broader accessibility in the near future.

- Installation Process: Getting Ollama up and running is straightforward. Begin by visiting the official Ollama website, where you'll find detailed instructions tailored to your operating system. Download the package suited for your platform and follow the step-by-step guide to set everything up. It's essential to ensure that your environment meets the necessary prerequisites to avoid any hiccups during installation.

The process is designed to be user-friendly, but for those who find themselves in a tangle, the Ollama community and documentation are invaluable resources. Whether you're a seasoned developer or a digital artist like myself, venturing into the realm of advanced image analysis and creation, Ollama Vision opens up a world where the only limit is your imagination.

For more detailed installation instructions and system requirements, please refer to the official Ollama documentation.

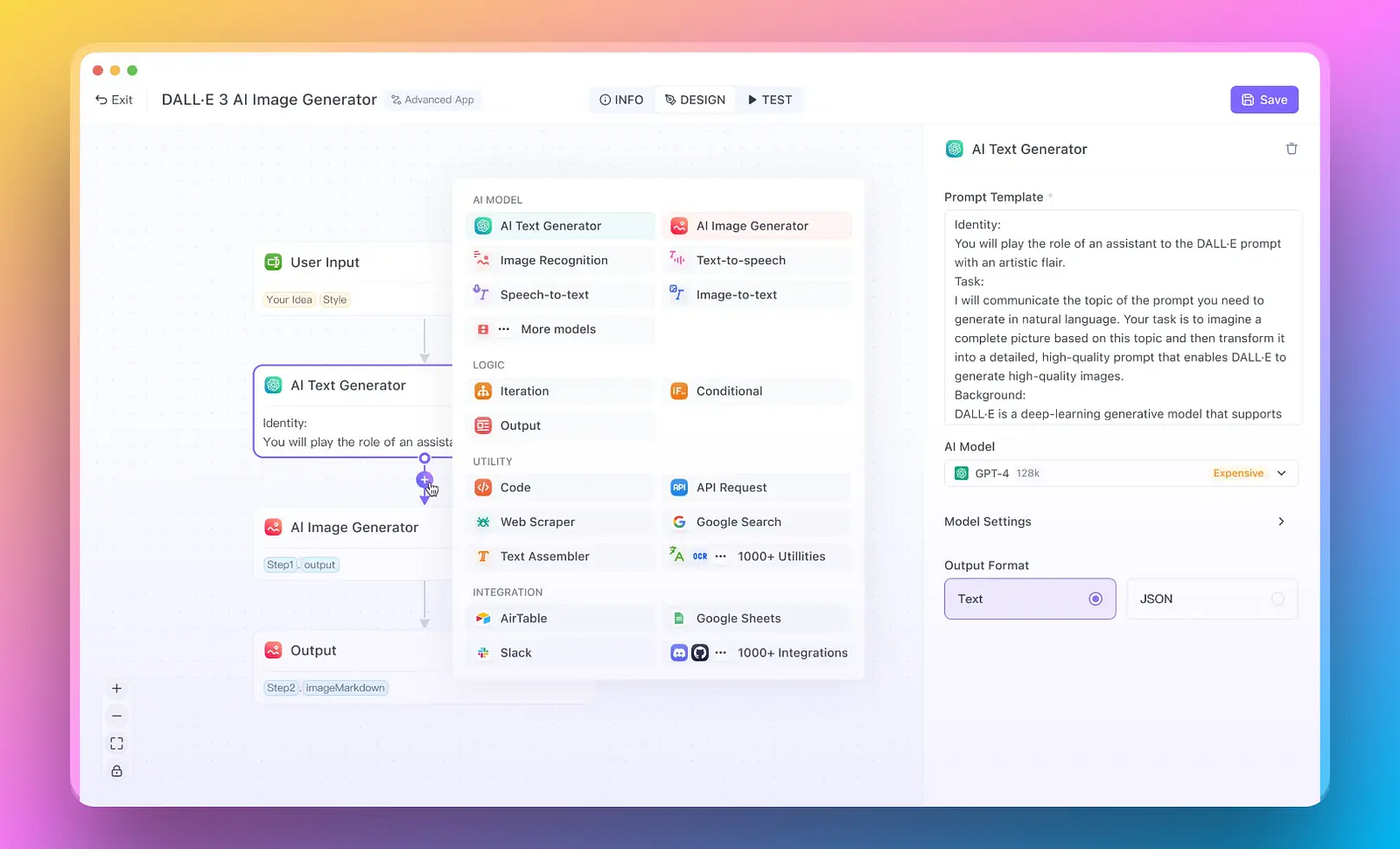

But what if you just need to build quick AI Apps? And do not want to waste time with the hustle?

Here you go: Anakin AI is the best No Code AI App Builder on the market. Build any AI Agents with multi-model support for your own data and workflow!

Getting Started with LLaVA Models in Ollama Vision

Embarking on a journey with LLaVA models begins with understanding the breadth of their capabilities and how to harness their power. Ollama Vision's LLaVA (Large Language-and-Vision Assistant) models are at the forefront of this adventure, offering a range of parameter sizes to cater to various needs and computational capabilities.

Parameter Sizes and Initialization

Leveraging LLaVA models is akin to choosing the right lens for your camera; the parameter size significantly influences the model's performance and output quality. The models come in three distinct sizes:

- 7B Parameters: This version is the most accessible, designed for those who require efficiency and speed without needing the utmost depth in analysis. It's an excellent starting point for general purposes.

- 13B Parameters: Stepping up, the 13B model offers a balanced mix of performance and depth, suitable for more detailed image analysis and complex text recognition tasks.

- 34B Parameters: At the pinnacle, the 34B model is a powerhouse of precision and depth, crafted for those who demand the highest level of detail and analytical capability.

Initiating these models is a straightforward process using the ollama run command. For example, to activate the 13B model, one would simply enter:

ollama run llava:13b

This command serves as your gateway into the world of advanced image analysis, setting the stage for the myriad of tasks you can accomplish with LLaVA models.

Model Capabilities

The LLaVA models are not just about size; they're about the scope of possibilities they unlock. With these models, you're equipped to delve into complex visual and textual landscapes, uncovering details and insights that were previously out of reach.

Object Detection: Imagine being able to look at an image and instantly identify and classify various objects within it. LLaVA models excel at detecting objects in images, from the mundane to the obscure, making them invaluable tools for tasks ranging from content moderation to data annotation in machine learning datasets.

Text Recognition: The ability to recognize and interpret text within images opens up a new dimension of interaction with digital content. Whether it's extracting text from street signs in a bustling cityscape or deciphering handwritten notes in an artist's journal, LLaVA models stand ready to bridge the gap between the visual and the textual.

Each of these capabilities not only enhances how we interact with digital content but also paves the way for innovative applications across various fields. From aiding researchers in data analysis to assisting artists in infusing layers of narrative into their work, the potential is boundless.

As you embark on this journey with LLaVA models, remember that each command you execute and each image you analyze contributes to a larger narrative of exploration and discovery, powered by the advanced capabilities of Ollama Vision.

How to Use Ollama Vision

Diving into Ollama Vision and leveraging the capabilities of LLaVA models can transform how you interact with and analyze images. Whether you're a developer, a data scientist, or an artist, integrating these models into your workflow can significantly enhance your projects. Below is a step-by-step guide on how to use LLaVA models from the command line interface (CLI), a versatile and powerful tool in your arsenal.

CLI Usage

The command line offers a direct and efficient way to interact with LLaVA models, making it ideal for scripting and automation tasks. Here's how you can use it to analyze images and generate descriptions:

Open your Terminal or Command Line Interface: Ensure you have Ollama installed on your system. If you haven't installed it yet, refer back to the installation section.

Navigate to Your Project Directory: Use the cd command to change your current directory to where your target image is located or where you want the output to be saved.

Execute the Ollama Run Command: To analyze an image and generate a description, use the following syntax:

ollama run llava:<model_size> "describe this image: ./path/to/your/image.jpg"

- Replace

<model_size>with the desired LLaVA model parameter size (e.g.,7b,13b, or34b). - Adjust

./path/to/your/image.jpgto the relative or absolute path of your image file.

For example, to describe an image named art.jpg using the 13B model, your command would look like this:

ollama run llava:13b "describe this image: ./art.jpg"

View the Results: After executing the command, the model will process the image and output a description. This description will appear directly in your terminal, providing insights into the content and context of the image.

Experiment with Different Commands: Beyond simple descriptions, you can experiment with various prompts to explore other aspects of the image, such as asking about the mood, identifying specific elements, or even generating creative interpretations.

Tips for Effective CLI Usage

- Batch Processing: If you have multiple images to analyze, consider scripting the commands in a batch file or shell script. This approach allows you to automate the analysis of a large set of images efficiently.

- Custom Prompts: Tailor your prompts to fit the specific information you're seeking from the image. The LLaVA models are versatile and can handle a wide range of queries.

- Handling Output: For advanced use cases, you might want to redirect the output to a file or another program for further processing. You can do this using standard CLI output redirection techniques.

By following these steps and tips, you can harness the power of Ollama Vision and LLaVA models directly from your command line, opening up a world of possibilities for image analysis and interpretation. Whether you're conducting research, developing applications, or creating art, these tools provide a new dimension of interaction with digital imagery.

b. Python Sample Code

Using Python to interact with Ollama Vision's LLaVA models involves leveraging the ollama.chat function. This powerful feature allows you to send an image for analysis and retrieve insightful descriptions. Here's a sample Python script that demonstrates how to accomplish this:

import ollama

# Initialize the Ollama client

ollama_client = ollama.OllamaClient()

# Define the path to your image

image_path = './path/to/your/image.jpg'

# Prepare the message to send to the LLaVA model

message = {

'role': 'user',

'content': 'Describe this image:',

'images': [image_path]

}

# Use the ollama.chat function to send the image and retrieve the description

response = ollama_client.chat(

model="llava:13b", # Specify the desired LLaVA model size

messages=[message]

)

# Print the model's description of the image

print(response['messages'][-1]['content'])

In this script:

- Replace

./path/to/your/image.jpgwith the actual path to your image file. - Adjust

llava:13bto the specific LLaVA model size you intend to use (7b,13b, or34b).

c. JavaScript Sample Code

Achieving similar functionality in JavaScript requires handling asynchronous operations with await. The following snippet shows how to use the ollama.chat method in a JavaScript environment to analyze an image and receive a description:

import ollama from 'ollama';

async function describeImage(imagePath) {

// Initialize the Ollama client

const ollamaClient = new ollama.OllamaClient();

// Prepare the message to send to the LLaVA model

const message = {

role: 'user',

content: 'Describe this image:',

images: [imagePath]

};

// Use the ollama.chat function to send the image and retrieve the description

const response = await ollamaClient.chat({

model: "llava:13b", // Specify the desired LLaVA model size

messages: [message]

});

// Log the model's description of the image

console.log(response.messages[response.messages.length - 1].content);

}

// Replace './path/to/your/image.jpg' with the path to your image file

describeImage('./path/to/your/image.jpg');

In this JavaScript example:

- Replace

'./path/to/your/image.jpg'with the actual path to your image file. - Modify

"llava:13b"to select the LLaVA model size you wish to use (7b,13b, or34b).

These examples in Python and JavaScript demonstrate how straightforward it is to integrate LLaVA models into your projects, enabling advanced image analysis capabilities with minimal code. Whether you're developing a web application, conducting research, or creating digital art, these snippets serve as a foundation for exploring the vast potential of Ollama Vision.

Advanced Usage and Examples for LLaVA Models in Ollama Vision

When you venture beyond basic image descriptions with Ollama Vision's LLaVA models, you unlock a realm of advanced capabilities such as object detection and text recognition within images. These functionalities are invaluable for a wide range of applications, from developing interactive AI-driven tools to conducting detailed visual research.

Object Detection

Object detection involves identifying and classifying individual items within an image. This can range from recognizing everyday objects like furniture and vehicles to more specialized items such as wildlife species or technical components.

Sample Command:

ollama run llava:13b "identify and classify objects in this image: ./cityscape.jpg"

Expected Output:

The model might return a detailed list of identified objects, such as "traffic lights, pedestrian crossing signs, cars, bicycles, and trees," along with possible classifications or characteristics, such as "sedan, SUV, deciduous tree."

Text Recognition

Text recognition, or Optical Character Recognition (OCR), allows the model to detect and interpret text embedded in images. This can be particularly useful for digitizing written documents, extracting information from signage in images, or analyzing graphical content that includes text.

Sample Command:

ollama run llava:34b "extract and interpret text from this image: ./handwritten_notes.jpg"

Expected Output:

The model could output the transcribed text, possibly noting its confidence level or highlighting areas of uncertainty. For handwritten notes, it might also provide context or interpretation based on the surrounding content.

Providing Images to Models

The LLaVA models can process images provided in different formats, catering to the flexibility required in various development environments.

CLI: In the command line interface, images are typically provided via file paths directly in the command, as shown in the sample commands above. This method is straightforward and effective for scripts and batch processing.

Python and JavaScript: In programming environments like Python and JavaScript, images can be provided as base64-encoded strings. This approach is particularly useful when dealing with images sourced from the web or generated within the application.

Python Example:

import base64

# Function to encode image file to base64

def encode_image_to_base64(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode('utf-8')

image_base64 = encode_image_to_base64('./path/to/your/image.jpg')

JavaScript Example:

const fs = require('fs');

// Function to encode image file to base64

function encodeImageToBase64(imagePath) {

return fs.readFileSync(imagePath, { encoding: 'base64' });

}

const imageBase64 = encodeImageToBase64('./path/to/your/image.jpg');

In these examples, the image file is read and converted to a base64-encoded string, which can then be included in the message payload sent to the LLaVA model for analysis.

These advanced use cases and methods of providing images open up a wide range of possibilities for integrating Ollama Vision into your projects, enabling sophisticated visual analysis and interaction within your applications.

Conclusion

The journey through Ollama Vision doesn't just stop at analyzing images; it's about unlocking a new realm of possibilities where technology meets art, data analysis, and beyond. The ease with which we can now interpret, understand, and interact with visual data is paving the way for groundbreaking applications across various fields, from digital art and design to scientific research and automated content moderation.

But what if you just need to build quick AI Apps? And do not want to waste time with the hustle?

Here you go: Anakin AI is the best No Code AI App Builder on the market. Build any AI Agents with multi-model support for your own data and workflow!

As we wrap up this exploration, it's clear that the fusion of large language-and-vision models like LLaVA with intuitive platforms like Ollama is not just enhancing our current capabilities but also inspiring a future where the boundaries of what's possible are continually expanded. Whether you're a seasoned developer, a researcher, or an artist at heart, the tools and examples provided here serve as a foundation for you to build upon, experiment, and create something truly innovative.