Are you curious about how LangChain agents can revolutionize the way you build AI-powered applications? Discover the incredible potential of these intelligent agents and learn how to harness their power in this comprehensive guide. Click now to dive into the world of LangChain agents and take your projects to the next level!

What are LangChain Agents?

LangChain agents are powerful tools that allow you to create intelligent assistants capable of performing complex tasks. These agents leverage the power of language models and a set of predefined tools to understand user input, reason about the best course of action, and execute the necessary steps to achieve the desired outcome.

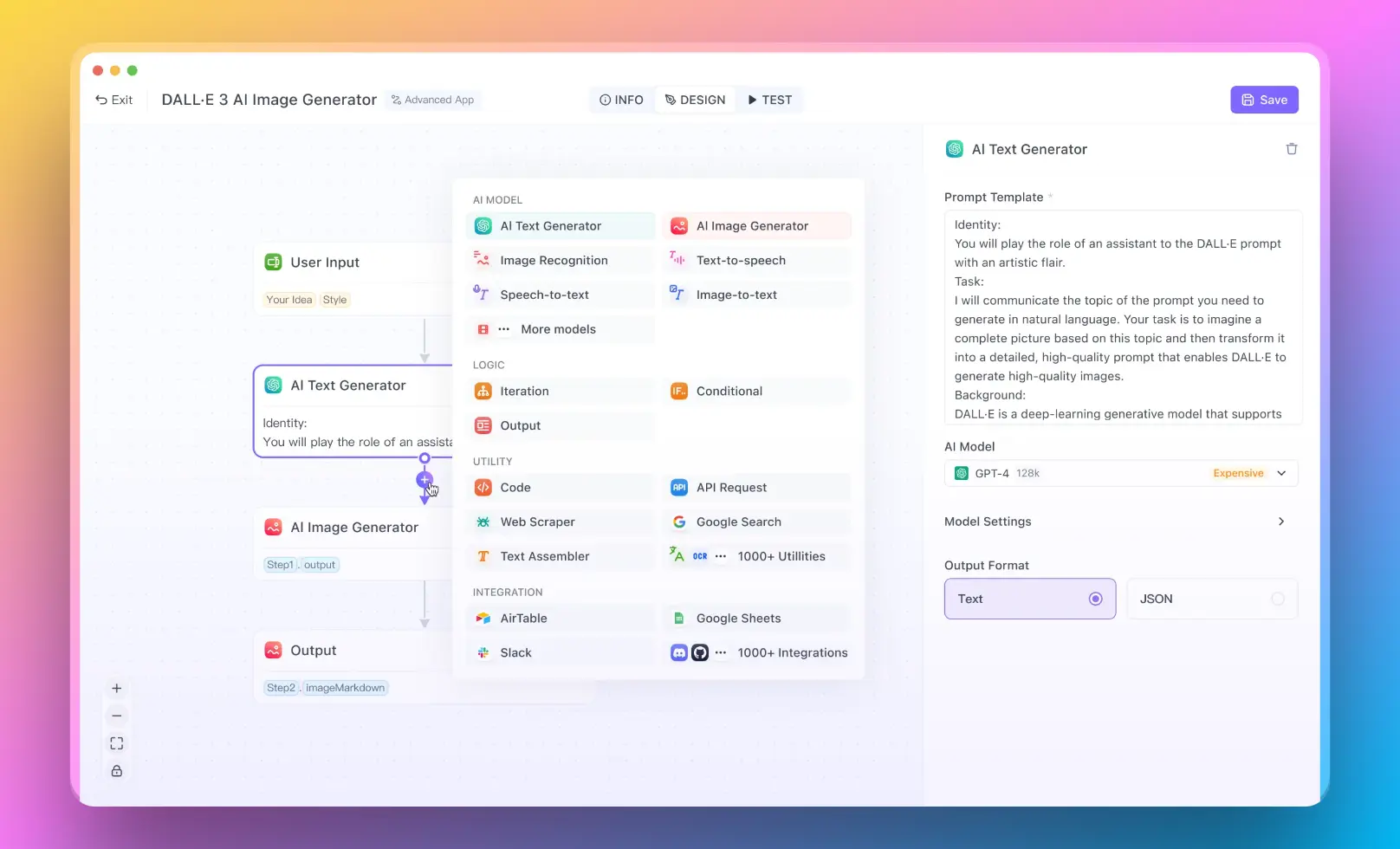

- For users who want to run a RAG system with no coding experience, you can try out Anakin AI, where you can create awesome AI Apps with a No Code Builder!

Benefits of LangChain Agents

- Flexibility: LangChain agents can be customized to suit a wide range of use cases, from answering questions to performing multi-step tasks.

- Extensibility: You can easily add new tools and capabilities to your LangChain agents, allowing them to grow and adapt as your needs evolve.

- Efficiency: By automating complex tasks, LangChain agents can save you time and effort, allowing you to focus on higher-level objectives.

The LangChain Database Agent

The LangChain database agent is a specialized agent designed to interact with databases. It can understand natural language queries, translate them into SQL statements, and retrieve the requested information from the database.

How to Use the LangChain Database Agent

- Install the necessary dependencies:

pip install langchain openai sqlite3

- Create a new database agent:

from langchain.agents import create_sql_agent

from langchain.agents.agent_toolkits import SQLDatabaseToolkit

from langchain.sql_database import SQLDatabase

from langchain.llms.openai import OpenAI

db = SQLDatabase(database_path="path/to/your/database.db")

toolkit = SQLDatabaseToolkit(db=db)

agent_executor = create_sql_agent(

llm=OpenAI(temperature=0),

toolkit=toolkit,

verbose=True

)

- Use the agent to query the database:

result = agent_executor.run("What is the average age of users in the database?")

print(result)

LangGraph vs LangChain Agent

While both LangGraph and LangChain agents are powerful tools for building intelligent assistants, there are some key differences between them:

- Cyclical Graphs: LangGraph is specifically designed to support cyclical graphs, which are often needed for agent runtimes. LangChain agents, on the other hand, are more focused on directed acyclic graphs (DAGs).

- State Management: LangGraph provides a more explicit way to manage state throughout the execution of the graph, allowing for greater control and visibility into the agent's decision-making process.

- Interoperability: LangGraph is built on top of LangChain and is fully interoperable with the LangChain ecosystem, making it easy to integrate with existing LangChain components.

LangGraph vs LangChain Agent

While both LangGraph and LangChain agents are powerful tools for building intelligent assistants, there are some key differences between them. Let's explore these differences in more detail and see how they can impact your agent development process.

Cyclical Graphs

One of the main advantages of LangGraph is its ability to support cyclical graphs. In many real-world scenarios, agent runtimes require the ability to handle loops and recursive processes. LangGraph is specifically designed to accommodate these needs, making it a more suitable choice for complex, multi-step tasks.

Here's an example of how you can define a cyclical graph in LangGraph:

from langraph import LangGraph, Node

class MyNode(Node):

def execute(self, input_data):

# Node logic goes here

return output_data

graph = LangGraph()

node1 = MyNode()

node2 = MyNode()

graph.add_node(node1)

graph.add_node(node2)

graph.add_edge(node1, node2)

graph.add_edge(node2, node1) # Creating a cycle

result = graph.run(input_data)

In this example, we create two nodes and add them to the graph. We then create edges between the nodes, including a cycle from node2 back to node1. LangGraph can handle this cyclical structure and execute the graph accordingly.

On the other hand, LangChain agents are more focused on directed acyclic graphs (DAGs). While DAGs are suitable for many use cases, they may not be the best fit for scenarios that require cyclical processing.

State Management

Another key difference between LangGraph and LangChain agents is how they handle state management. LangGraph provides a more explicit way to manage state throughout the execution of the graph. This allows for greater control and visibility into the agent's decision-making process.

In LangGraph, you can define state variables and update them as the graph executes. Here's an example:

from langraph import LangGraph, Node

class MyNode(Node):

def execute(self, input_data, state):

# Access and update state variables

state["counter"] += 1

# Node logic goes here

return output_data, state

graph = LangGraph()

node1 = MyNode()

node2 = MyNode()

graph.add_node(node1)

graph.add_node(node2)

graph.add_edge(node1, node2)

initial_state = {"counter": 0}

result, final_state = graph.run(input_data, initial_state)

In this example, we define a state variable counter and initialize it to 0. Each node can access and update the state during its execution. The final state is returned along with the result when the graph finishes running.

LangChain agents, while powerful, may not provide the same level of explicit state management. State is often managed implicitly through the input and output of each tool in the agent's toolchain.

Interoperability

Despite their differences, LangGraph is built on top of LangChain and is fully interoperable with the LangChain ecosystem. This means you can easily integrate LangGraph with existing LangChain components, such as tools, prompts, and memory.

Here's an example of how you can use a LangChain tool within a LangGraph node:

from langraph import LangGraph, Node

from langchain.tools import BaseTool

class MyTool(BaseTool):

name = "my_tool"

description = "A sample tool"

def _run(self, tool_input):

# Tool logic goes here

return output

class MyNode(Node):

def __init__(self):

self.tool = MyTool()

def execute(self, input_data):

tool_output = self.tool.run(input_data)

# Node logic goes here

return output_data

graph = LangGraph()

node = MyNode()

graph.add_node(node)

result = graph.run(input_data)

In this example, we define a custom LangChain tool called MyTool and use it within a LangGraph node. The node can execute the tool and incorporate its output into the node's logic.

How LangChain Agents Decide Which Tool to Use

LangChain agents use a combination of natural language understanding and predefined rules to determine which tool to use for a given task. The process typically involves the following steps:

Parsing User Input: The agent analyzes the user's input to identify key entities, intents, and context. This step often involves using natural language processing techniques, such as tokenization, named entity recognition, and intent classification.

Matching Tools: Once the user input is parsed, the agent compares it against the available tools. Each tool has a specific set of capabilities and a description of the tasks it can handle. The agent looks for the best match between the parsed input and the tool's capabilities.

Executing the Tool: After selecting the most appropriate tool, the agent executes it with the user input as the tool's input. The tool performs its designated task and generates an output.

Evaluating the Result: The agent assesses the output of the tool to determine if the task has been successfully completed. This evaluation may involve comparing the output against predefined criteria or using natural language understanding techniques to gauge the relevance and completeness of the output.

Iterating: If the task is not yet complete or if the output is unsatisfactory, the agent repeats the process. It uses the output of the previous tool as input for the next step, selecting another tool to further refine or expand on the previous result. This iterative process continues until the agent determines that the task has been successfully completed.

Here's a more detailed example of how an agent might decide which tool to use:

from langchain.agents import initialize_agent

from langchain.tools import BaseTool

class WikipediaQueryTool(BaseTool):

name = "wikipedia"

description = "A tool for querying Wikipedia"

def _run(self, tool_input):

# Query Wikipedia and return the result

return wikipedia_result

class WeatherQueryTool(BaseTool):

name = "weather"

description = "A tool for querying weather information"

def _run(self, tool_input):

# Query weather data and return the result

return weather_result

tools = [WikipediaQueryTool(), WeatherQueryTool()]

agent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)

user_input = "What is the capital of France, and what is the weather like there today?"

result = agent.run(user_input)

print(result)

In this example, we define two custom tools: WikipediaQueryTool and WeatherQueryTool. We then initialize an agent with these tools using the initialize_agent function from LangChain.

When the user provides an input query, the agent analyzes it and determines that it needs information about the capital of France (which can be obtained from Wikipedia) and the weather in that location (which can be obtained from the weather tool).

The agent first selects the WikipediaQueryTool to retrieve information about the capital of France. It then uses the WeatherQueryTool to get the current weather information for that location.

Finally, the agent combines the results from both tools to provide a comprehensive answer to the user's query.

This example demonstrates how LangChain agents can intelligently select and orchestrate multiple tools to handle complex, multi-step tasks based on the user's input.

Conclusion

LangChain agents are a powerful tool for building intelligent assistants that can understand natural language, reason about complex tasks, and execute the necessary steps to achieve the desired outcome. By leveraging the power of language models and a set of predefined tools, LangChain agents can help you create flexible, extensible, and efficient AI-powered applications.

Whether you're working with databases, answering questions, or performing multi-step tasks, LangChain agents provide a robust framework for building intelligent assistants that can adapt to your needs. With the added benefits of LangGraph, such as support for cyclical graphs and explicit state management, you have even more control and flexibility in designing your agent runtimes.

So why not explore the possibilities of LangChain agents today? With the knowledge gained from this guide, you're well-equipped to start building your own intelligent assistants and unlocking the full potential of AI-powered applications.