In the world of data science, language models are the new frontier. These information-processing behemoths can perform tasks that were once thought to be uniquely human. From summarizing lengthy articles, generating human-like text, translating languages, to answering complex questions, large language models (LLMs) are pushing the boundaries of what machines can do. However, dealing with these gargantuan models often requires extensive computational resources that are out of reach for many individuals and small teams.

Enter Google Colab.

Imagine you're a data scientist working on your next big project. You have a powerful LLM at hand that can potentially revolutionize your work. But there's just one problem - your local machine doesn't have the muscle to handle the model. You're faced with two options: either invest in expensive hardware upgrades or rent high-performance cloud computing services, both of which can burn a hole in your pocket.

Or, you could use Google Colab.

Google Colab is a cloud-based platform that allows you to write and execute Python code via your browser, with zero configuration required. And the best part? It's absolutely free. Google Colab democratizes access to powerful computational resources, making it possible to run LLMs without incurring hefty costs.

But wait, it gets better. Google Colab also offers the following:

- Free GPU: Google Colab provides free GPU support, drastically speeding up computations and making it feasible to run large models.

- Easy access: It's integrated with Google Drive, allowing you to easily save and share your work.

- User-friendly interface: All of this comes wrapped in a simple, intuitive interface that makes it a breeze to use.

Sounds enticing, doesn't it? But you might be wondering, "Can I really run large language models on Google Colab?" Let's find out.

Can I Run LLMs on Google Colab?

Absolutely! Google Colab's free tier gets you access to a Tesla K80 GPU with 12 GB of RAM, and while this might not be sufficient for training the largest of language models, it is more than enough for fine-tuning them or running predictions.

In fact, there are numerous large language model providers that offer pre-trained models you can leverage:

- OpenAI: OpenAI's GPT-3 is a state-of-the-art language model that can generate impressive human-like text.

- Azure OpenAI: Microsoft's Azure platform offers GPT-3 as a cloud service.

- Hugging Face: Hugging Face provides a number of pre-trained models that you can use for a variety of NLP tasks.

- Fireworks AI: Fireworks AI specializes in large language models for enterprise applications.

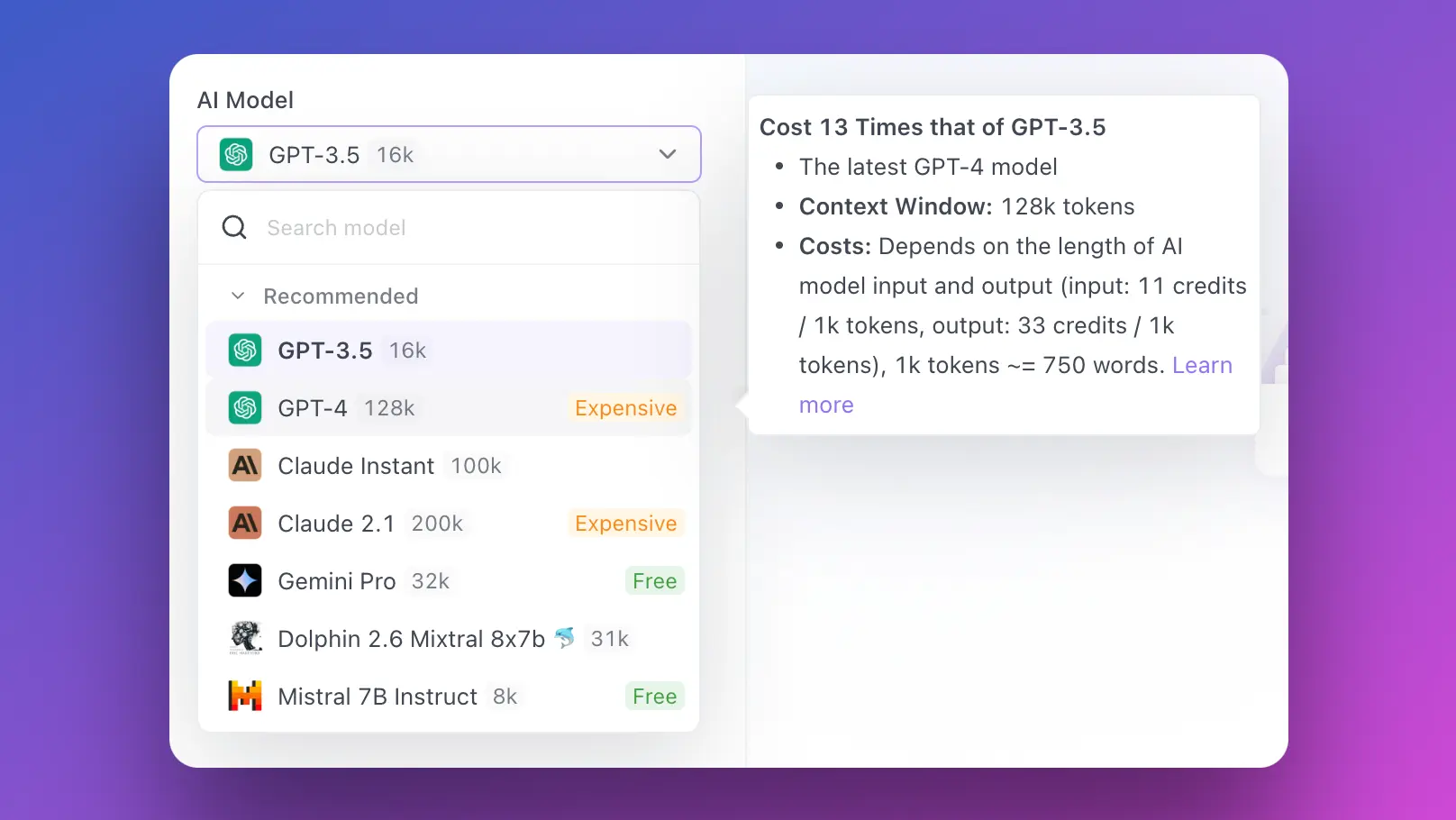

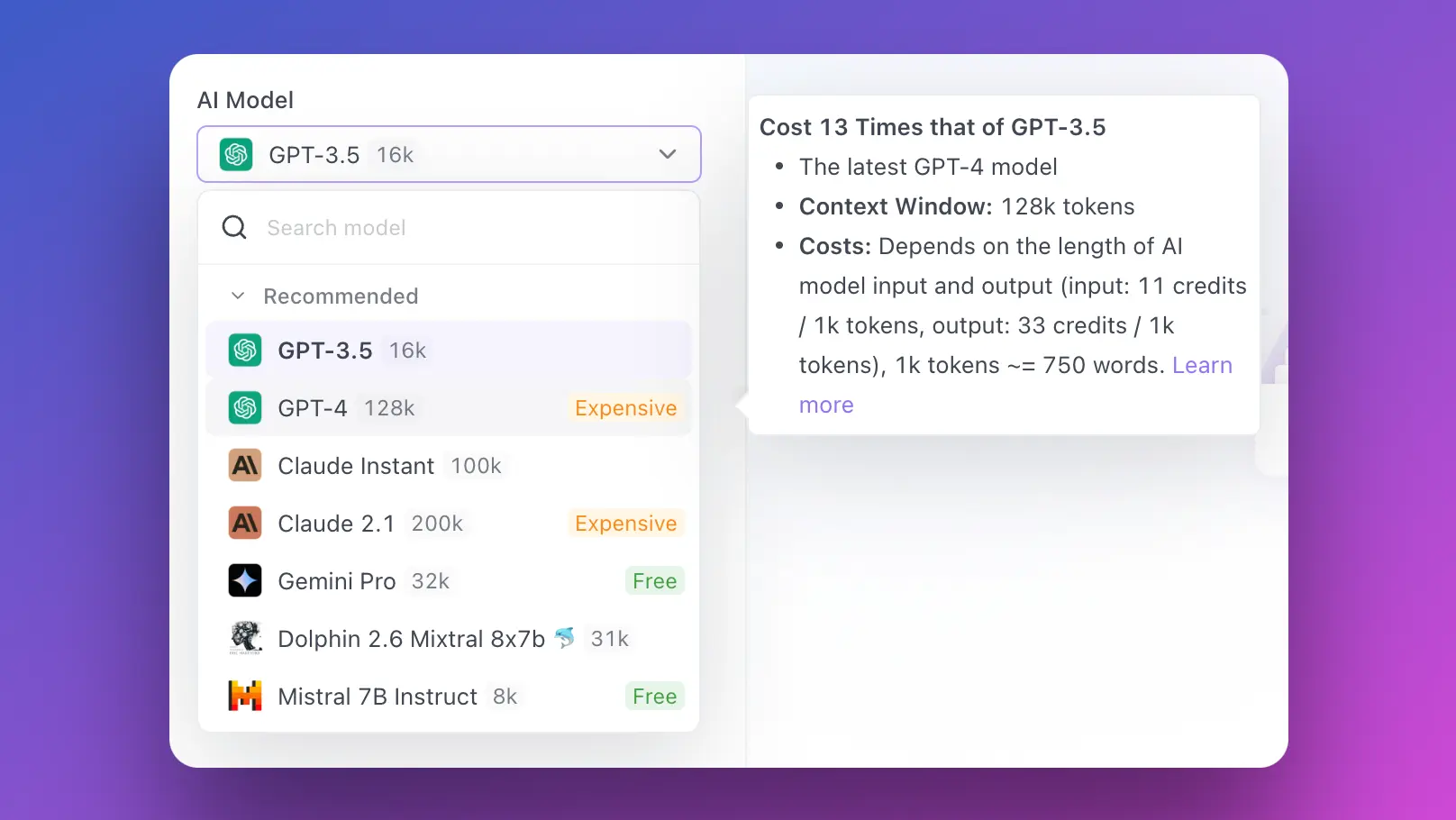

- Anakin AI:, where you can build AI Apps with ANY AI Model, using a No Code App Builder!

But how do you go about setting up your environment in Google Colab to run these models? Let's dive right in.

Setting Up Your Environment in Google Colab

Setting up Google Colab for running LLMs is a simple affair. Here's a step-by-step guide to get you started:

Create a New Notebook: Navigate to Google Colab's website and start a new Python 3 notebook.

Install Required Libraries: Install the necessary Python libraries. For instance, if you're using a Hugging Face model, you'd need to install the transformers library. Run the following code in a new cell:

!pip install transformers

Select the Correct Runtime: Click on Runtime > Change runtime type and select GPU for hardware accelerator.

Voila! Your basic setup is complete. But there's more to it if you want to optimize your workflow.

Mounting Google Drive in Google Colab

Google Drive integration is one of the key features that make Google Colab a powerful tool for data scientists. It allows you to access your data directly from your Google Drive, and save your work back to it.

Here's how you can mount your Google Drive in Google Colab:

Run the following code in a new cell:

from google.colab import drive

drive.mount('/content/drive')

Follow the link that appears, choose your Google account, and copy the authorization code.

Paste the code back into Colab, and you're done! You can now navigate through your Google Drive files directly from your Colab notebook.

Next, let's see how to enable GPU acceleration in Google Colab.

Enabling GPU Acceleration in Google Colab

If you're serious about working with LLMs, enabling GPU acceleration is an absolute must. Thankfully, Google Colab makes this easy and free of charge:

Head over to Runtime > Change runtime type.

Next to Hardware accelerator, click on the dropdown and select GPU.

And that's it! You've just supercharged your Colab notebook with GPU acceleration.

Running Large Language Models in Google Colab

By now, your Google Colab environment should be set up and ready to go. It’s time to bring in the heavy hitters.

Let’s take a look at a sample code snippet using Hugging Face’s transformers library:

from transformers import pipeline

# Initialize the model

nlp_model = pipeline('sentiment-analysis')

# Using the model for prediction

result = nlp_model('We are learning to use Google Colab for running large language models.')

print(result)

This basic code snippet allows you to feed a sentence to the model and get a sentiment analysis prediction in return. You can replace 'sentiment-analysis' with other tasks such as 'text-generation', 'translation_en_to_fr', among others based on your requirement.

Conclusion

In an era of big data and ever-growing language models, the computational resources available to data scientists are crucial. Google Colab levels the playing field by providing significant computational power, including free GPU acceleration, all within a cloud-based, user-friendly environment.

Whether you're an individual working on a personal project, a data scientist in a small team, or even a student getting your hands dirty with LLMs for the first time, running LLMs in Google Colab offers an accessible, cost-effective and reliable solution.

So, why wait? Set up your Google Colab environment today and embark on the journey of harnessing the power of large language models for free!

And don't forget to test out Anakin AI, where it saves you hours of coding work, by combing all your favourite AI Models in One Place, with a No Code AI App builder!