Anakin AI is your go-to solution!

Anakin AI is the all-in-one platform where you can access: Llama Models from Meta, Claude 3.5 Sonnet, GPT-4, Google Gemini Flash, Uncensored LLM, DALLE 3, Stable Diffusion, in one place, with API Support for easy integration!

Get Started and Try it Now!👇👇👇

Meta's Llama 3.1 models represent the latest advancement in open-source large language models (LLMs), offering impressive capabilities across various tasks. In this comprehensive guide, we'll explore how to run these models locally, compare their performance, and discuss alternative platforms for utilizing them.

Understanding Llama 3.1 Models

Llama 3.1 is available in three sizes: 8B, 70B, and 405B parameters. Each model size offers different capabilities and resource requirements:

- Llama 3.1 8B: Ideal for limited computational resources, excelling at text summarization, classification, sentiment analysis, and low-latency language translation.

- Llama 3.1 70B: Suitable for content creation, conversational AI, language understanding, and enterprise applications.

- Llama 3.1 405B: The largest publicly available LLM, perfect for enterprise-level applications, research, and synthetic data generation.

Benchmarks and Performance Comparison

Llama 3.1 models have shown significant improvements over their predecessors across various benchmarks. Here's a comparison table highlighting some key performance metrics:

| Benchmark | Llama 3.1 8B | Llama 3.1 70B | Llama 3.1 405B | GPT-4 | Claude 3.5 Sonnet |

|---|---|---|---|---|---|

| MATH | 35.2 | 68.3 | 73.8 | 76.6 | 71.1 |

| MMLU | 45.3 | 69.7 | 75.1 | 86.4 | 79.3 |

| HumanEval | 18.3 | 42.2 | 48.9 | 67.0 | 65.2 |

| GSM8K | 22.1 | 63.5 | 69.7 | 92.0 | 88.4 |

It's worth noting that while Llama 3.1 405B shows impressive performance across the board, it may not always outperform the 70B model in every task. This variability highlights the importance of choosing the right model size for specific use cases.

Running Llama 3.1 Models Locally

1. Using Ollama for Local Llama 3.1 Models Deployment(8B, 70B, 405B)

Ollama is a lightweight, extensible framework for running Llama models locally. It provides an easy-to-use command-line interface and supports various model sizes.

To get started with Ollama:

- Install Ollama from the official website (https://ollama.ai).

- Open a terminal and run the following command to download and run Llama 3.1:

ollama run llama3

- Once the model is loaded, you can start interacting with it:

ollama run llama3 "Explain the concept of quantum entanglement"

For more advanced usage, you can create a custom model file (Modelfile) to fine-tune parameters:

FROM llama3:8b

PARAMETER temperature 0.7

PARAMETER top_k 50

PARAMETER top_p 0.95

PARAMETER repeat_penalty 1.1

Save this as Modelfile and create your custom model:

ollama create mymodel -f Modelfile

ollama run mymodel

Ollama also provides a REST API for integrating Llama 3.1 into your applications:

curl http://localhost:11434/api/generate -d '{

"model": "llama3",

"prompt": "What is the capital of France?"

}'

2. Using LM Studio for Local Deployments of Llama Models (8B, 70B, 405B)

LM Studio offers a user-friendly graphical interface for running and interacting with Llama 3.1 models locally. Here's how to get started:

- Download and install LM Studio from https://lmstudio.ai.

- Launch LM Studio and navigate to the model search page.

- Search for "lmstudio-community/llama-3" to find available Llama 3.1 models.

- Choose the desired model size (8B or 70B) and variant (Base or Instruct).

- Download the selected model.

- Once downloaded, load the model in LM Studio.

- Use the chat interface to interact with the model or set up a local API server for programmatic access.

LM Studio provides two main variants of Llama 3.1:

- Base: Requires prompt engineering techniques like few-shot prompting and in-context learning.

- Instruct: Fine-tuned for following instructions and engaging in conversations.

For the best experience with the Instruct variant, use the "Llama 3" preset in LM Studio's settings.

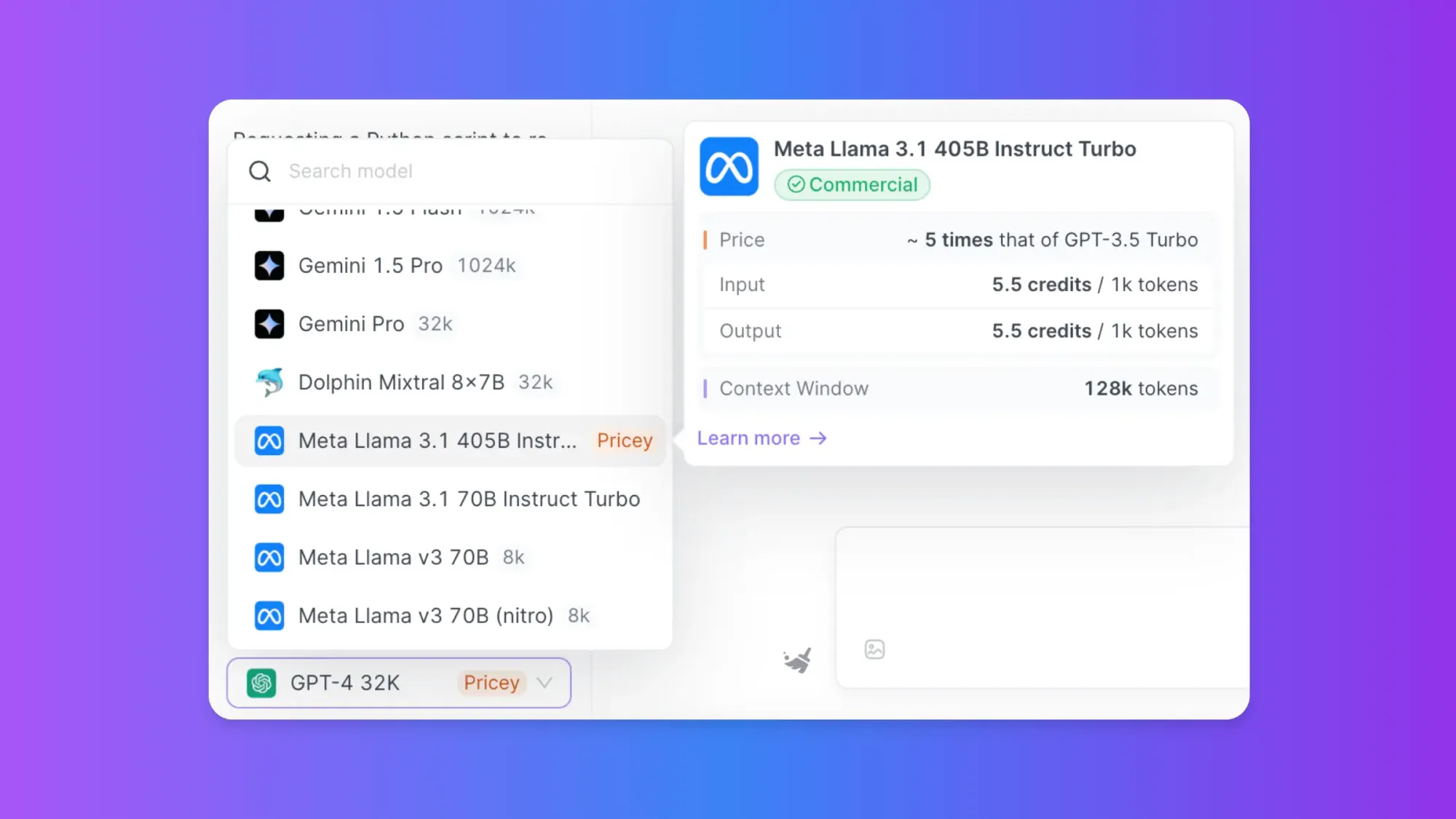

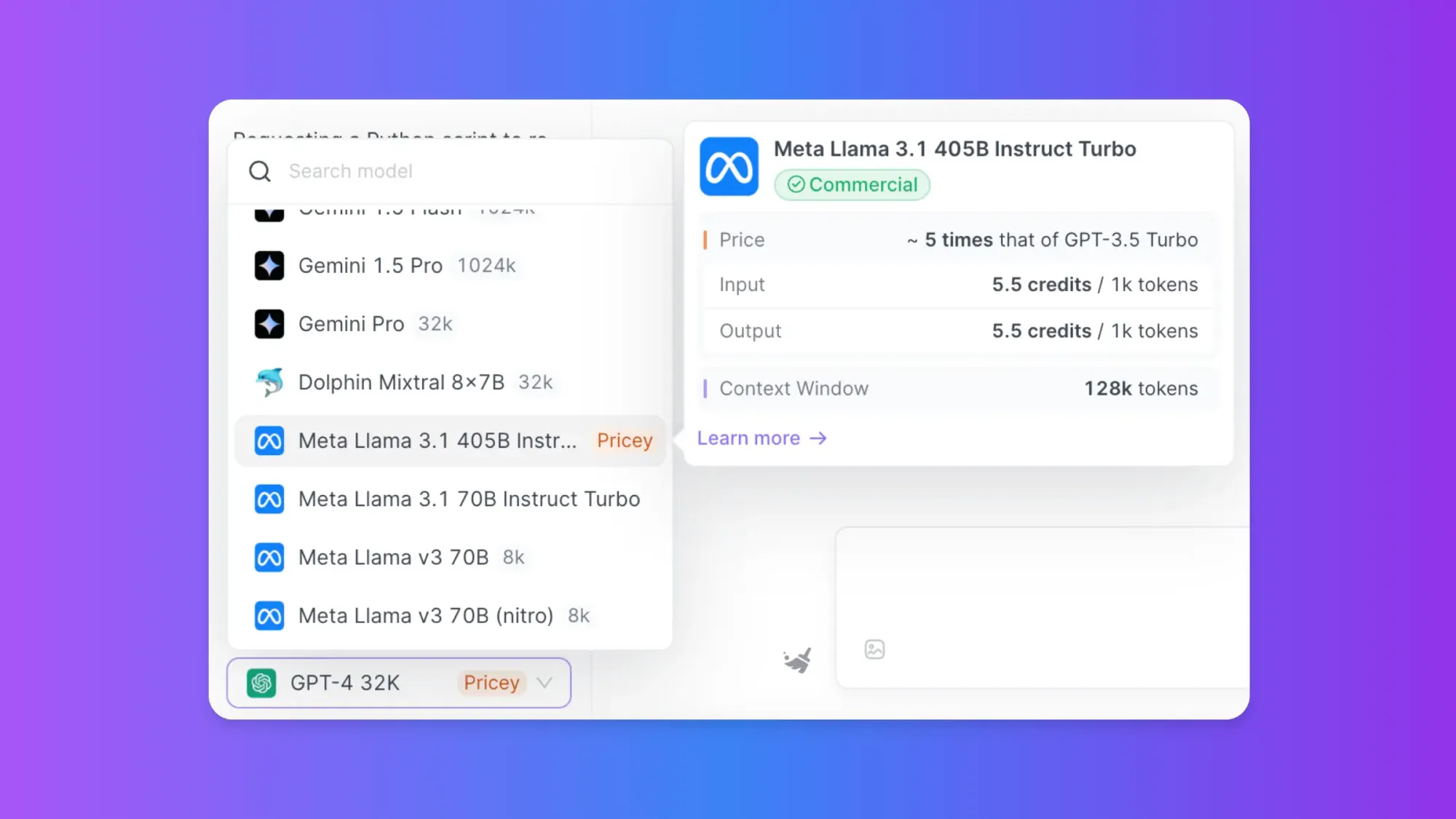

Using Anakin AI for Online Access

While running Llama 3.1 models locally offers great flexibility, it requires significant computational resources, especially for larger models. Anakin AI provides an alternative solution for those who want to leverage these models without the hassle of local setup.

Anakin AI is a versatile platform that integrates various AI models, including the Llama series, allowing users to create and customize AI applications without coding. Here's how you can use Llama 3.1 models through Anakin AI:

- Visit the Anakin AI website and create an account.

- Explore the app store featuring over 1,000 pre-built AI apps.

- Select a Llama 3.1-based app or create a custom one using the platform's tools.

- Customize the app to suit your specific requirements.

- Deploy and use the app directly through Anakin AI's interface.

You can easily create AI workflows with Anakin AI without any coding knowledge. Connect to LLM APIs such as: GPT-4, Claude 3.5 Sonnet, Uncensored Dolphin-Mixtral, Stable Diffusion, DALLE, Web Scraping.... into One Workflow!

Forget about complicated coding, automate your madane work with Anakin AI!

For a limited time, you can also use Google Gemini 1.5 and Stable Diffusion for Free!

Anakin AI offers several advantages for using Llama 3.1 models:

- No local setup required: Access powerful AI models without worrying about hardware requirements or complex installations.

- Integration with other AI models: Combine Llama 3.1 with other models like GPT-3.5, DALL-E, or Stable Diffusion for more comprehensive solutions.

- User-friendly interface: Create and deploy AI applications without coding skills.

- Scalability: Easily scale your AI solutions as your needs grow.

- Diverse use cases: Suitable for content generation, customer support, data analysis, and more.

Choosing the Right Approach

When deciding how to run Llama 3.1 models, consider the following factors:

- Computational resources: Local running requires significant CPU/GPU power, especially for larger models.

- Privacy and data security: Running models locally offers more control over your data.

- Customization needs: Local setups provide more flexibility for fine-tuning and customization.

- Ease of use: Platforms like Anakin AI offer a more user-friendly experience for non-technical users.

- Integration requirements: Consider how the model needs to integrate with your existing systems.

Best Practices for Using Llama 3.1 Models

Regardless of how you choose to run Llama 3.1 models, keep these best practices in mind:

- Start with the smallest suitable model: Begin with the 8B model and scale up if needed.

- Fine-tune for specific tasks: Adapt the model to your specific use case for better performance.

- Monitor resource usage: Keep an eye on CPU, GPU, and memory utilization, especially when running locally.

- Implement proper prompting techniques: Use clear and specific prompts to get the best results from the model.

- Stay updated: Keep your local installations or platform subscriptions up to date to benefit from the latest improvements.

Conclusion

Llama 3.1 models offer impressive capabilities for various natural language processing tasks. Whether you choose to run them locally using tools like Ollama and LM Studio or leverage cloud platforms like Anakin AI, these models provide a powerful foundation for building advanced AI applications.

Local setups offer greater control and customization options but require more technical expertise and computational resources. On the other hand, platforms like Anakin AI provide an accessible and scalable solution for those who want to harness the power of Llama 3.1 without the complexities of local deployment.

As the field of AI continues to evolve rapidly, staying informed about the latest developments and best practices will be crucial for making the most of these powerful language models. Experiment with different approaches, model sizes, and use cases to find the optimal solution for your specific needs.

Anakin AI is your go-to solution!

Anakin AI is the all-in-one platform where you can access: Llama Models from Meta, Claude 3.5 Sonnet, GPT-4, Google Gemini Flash, Uncensored LLM, DALLE 3, Stable Diffusion, in one place, with API Support for easy integration!

Get Started and Try it Now!👇👇👇