In the rapidly evolving world of technology, the integration of language models into applications has become a cornerstone for innovation in natural language processing (NLP). LangChain, a powerful library designed for Python, simplifies this integration by providing tools like CTransformers. CTransformers harness the speed of C/C++ implementations while maintaining the flexibility of Python, making it easier for developers to deploy complex language models efficiently. This article delves into the functionalities of CTransformers within the LangChain framework, offering practical examples, installation guides, and insights into the vibrant LangChain Community.

TL;DR: Quick Overview

- Install LangChain and CTransformers: Easily add these tools to your Python environment.

- Generate Text with CTransformers: Use pre-trained models to create text.

- Explore Advanced Features: Implement streaming for dynamic text generation.

- Join the LangChain Community: Engage with a network of NLP enthusiasts and experts.

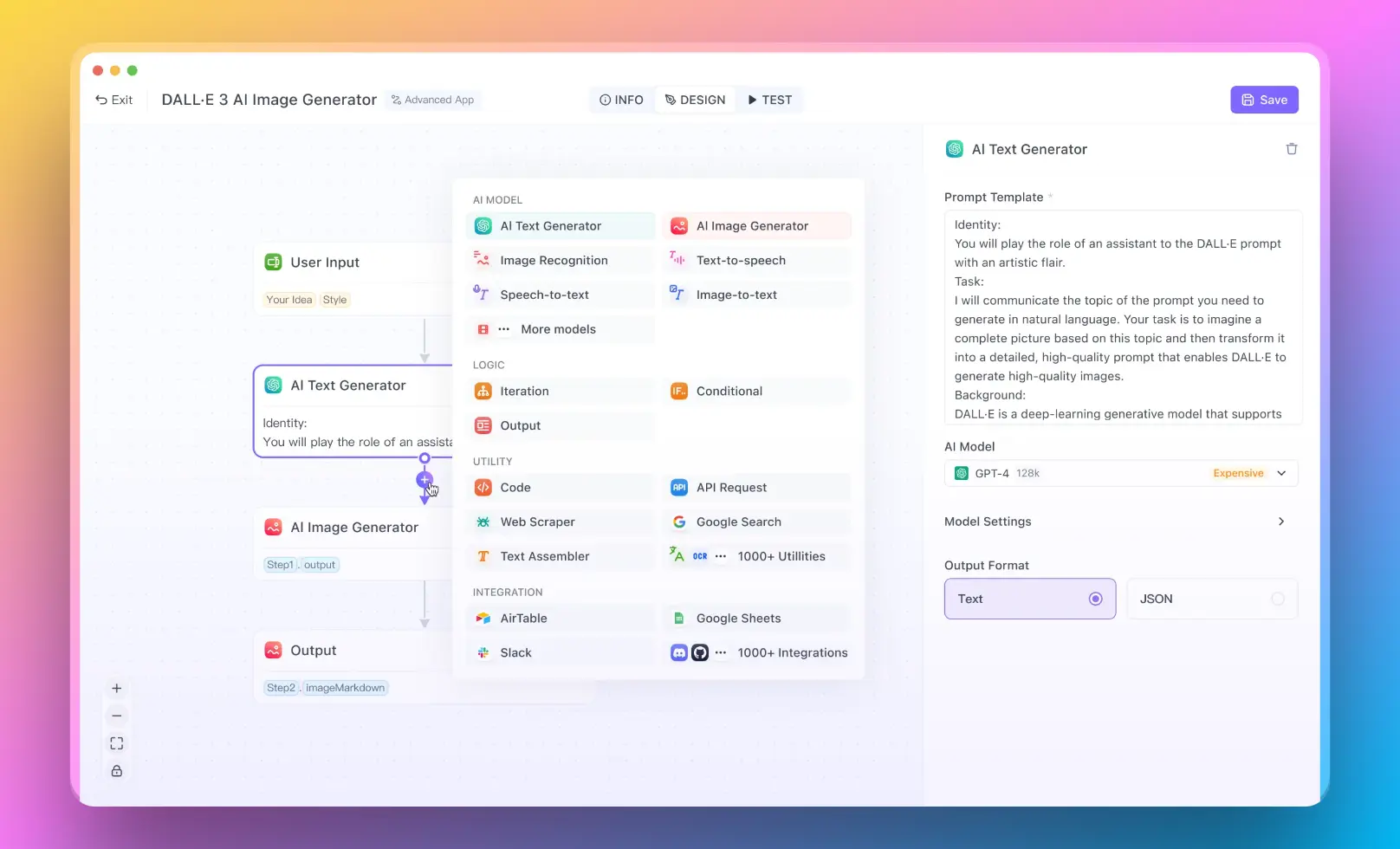

- For users who want to run a RAG system with no coding experience, you can try out Anakin AI, where you can create awesome AI Apps with a No Code Builder!

What is CTransformers in LangChain?

CTransformers within LangChain represents a powerful abstraction layer that facilitates the use of Transformer models, particularly those optimized with the GGML library, in Python. It provides Python bindings that allow for the straightforward execution of these models, offering a unified interface for a variety of model types. This compatibility extends to models hosted on the Hugging Face Hub, further broadening the scope of accessible resources.

How to Install LangChain in Python?

Installing LangChain in Python is a straightforward process, achievable with a single command:

pip install langchain

This command installs LangChain along with its dependencies, setting the stage for the integration of CTransformers and the utilization of its extensive functionalities.

What is LangChain Community?

The LangChain Community is a vibrant ecosystem of developers, researchers, and enthusiasts focused on the exploration and advancement of language models and their applications. Within this community, members share insights, tools, and resources, such as CTransformers, to foster innovation and collaboration. The community's contributions to the LangChain library, including documentation, examples, and support, play a crucial role in democratizing access to cutting-edge NLP technologies.

Steps to Use CTransformers with LangChain

Installing LangChain and CTransformers

Before diving into examples, it's crucial to set up your environment. Here's how to install LangChain and CTransformers in Python:

pip install langchain

pip install ctransformers

This simple installation process equips you with the tools needed to start experimenting with CTransformers within the LangChain framework.

Basic Usage of CTransformers in LangChain

To begin, let's explore how to load and use a model with CTransformers in LangChain:

from langchain_community.llms import CTransformers

# Initialize the CTransformers model

llm = CTransformers(model='marella/gpt-2-ggml')

# Generate text

response = llm.invoke("AI is going to")

print(response)

This snippet demonstrates the ease with which you can load a model and generate text, showcasing the seamless integration between CTransformers and LangChain.

Advanced Text Generation with Streaming

For more complex scenarios, such as streaming text generation, CTransformers accommodates with elegance:

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

# Configure CTransformers with streaming capabilities

llm = CTransformers(

model="marella/gpt-2-ggml",

callbacks=[StreamingStdOutCallbackHandler()]

)

# Invoke the model with streaming

response = llm.invoke("AI is going to")

This example illustrates how to enhance the text generation process with streaming, enabling real-time output for dynamic applications.

Peforming Sentiment Analysis with CTransformers in LangChain

In this scenario, we'll assume there's a pre-trained sentiment analysis model available on the Hugging Face Hub that we can access through CTransformers. The model will classify the sentiment of the input text. Note that for this example, we're using a fictional model name "sentiment-analysis-ggml" for demonstration purposes. You'll need to replace this with the actual model name you intend to use.

Step 1: Install LangChain and CTransformers

First, ensure that LangChain and CTransformers are installed in your Python environment. If you haven't installed them yet, you can do so by running:

pip install langchain

pip install ctransformers

Step 2: Import Necessary Libraries

Next, import the necessary components from LangChain and other required libraries:

from langchain_community.llms import CTransformers

Step 3: Initialize the Sentiment Analysis Model

Now, let's initialize the sentiment analysis model using CTransformers. Replace "sentiment-analysis-ggml" with the actual model name you're using.

# Initialize the sentiment analysis model

sentiment_model = CTransformers(model='sentiment-analysis-ggml')

Step 4: Perform Sentiment Analysis

With the model initialized, you can now perform sentiment analysis on any text. Let's analyze the sentiment of a sample sentence:

# Sample text

text = "LangChain and CTransformers make NLP tasks incredibly easy and efficient."

# Perform sentiment analysis

sentiment_result = sentiment_model.invoke(text)

print(sentiment_result)

This code snippet will output the sentiment analysis result, which typically includes the classification (e.g., "positive" or "negative") and possibly a confidence score, depending on the model's output format.

Conclusion

CTransformers in LangChain emerges as a transformative tool, bridging the gap between the efficiency of C/C++ and the versatility of Python in the realm of language models. Through practical examples and a comprehensive guide on installation, this article has illuminated the pathway for developers to harness the power of CTransformers within their projects. The LangChain Community continues to be a beacon of collaboration and innovation, propelling the field of NLP forward.

Embark on a journey with CTransformers in LangChain and unlock the full potential of language models in your applications.