Controlnet | Use ControlNet for Stable Diffusion Online | Free AI tool

Unlock the future of creative AI with ControlNet Stable Diffusion - harness the power to craft stunning images from text like never before!

Introduction

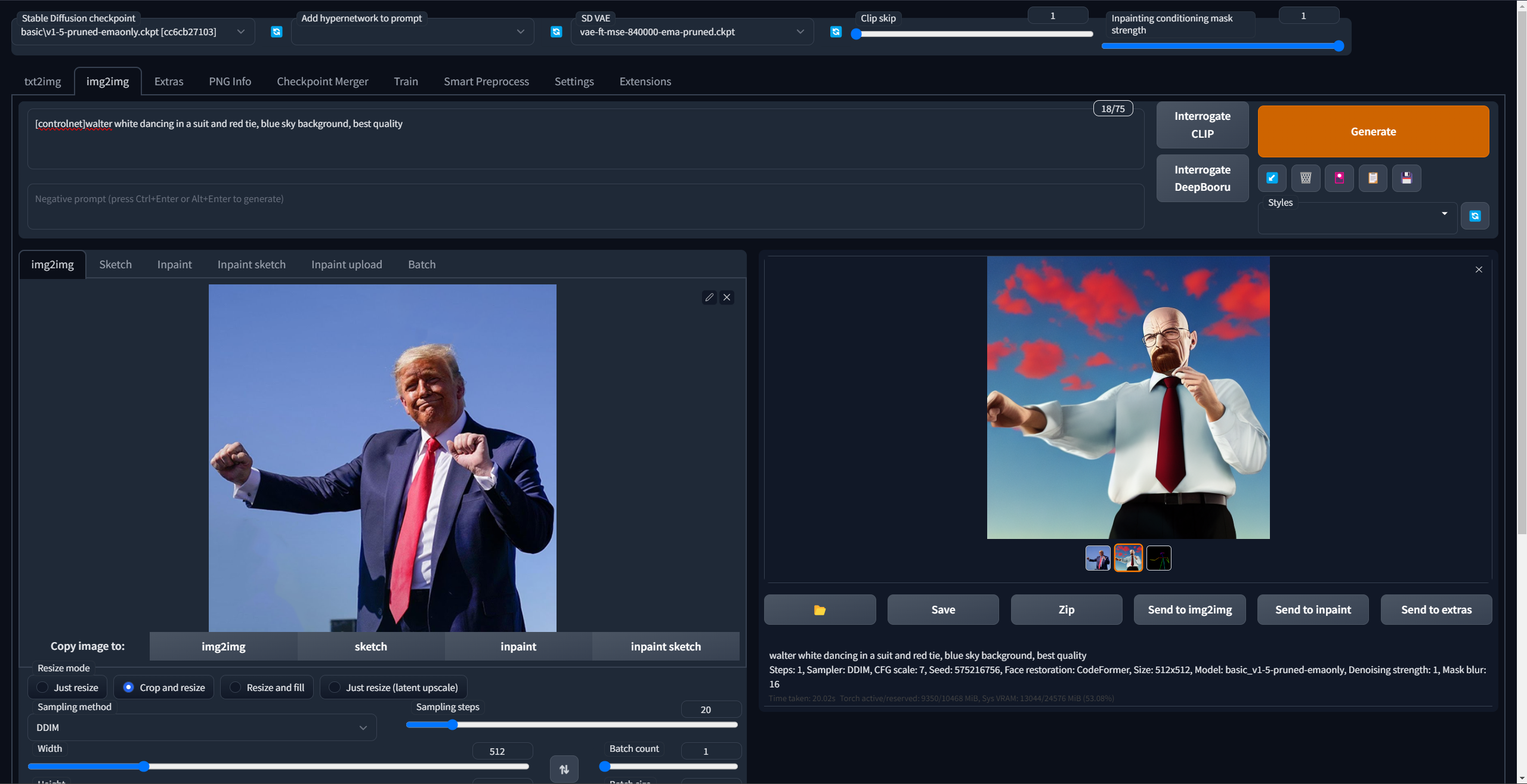

ControlNet Stable Diffusion: Enhancing Text-to-Image Generation

Are you ready to take your text-to-image generation to the next level? Look no further than ControlNet Stable Diffusion. In this comprehensive guide, we will delve into what ControlNet Stable Diffusion is, how it can elevate your text-to-image generation capabilities, and answer some burning questions about this innovative tool.

What is ControlNet Stable Diffusion?

ControlNet Stable Diffusion is a groundbreaking advancement in the realm of artificial intelligence and machine learning. It is a technique specifically designed to enhance text-to-image generation models, allowing for greater control and precision in the generation process.

This innovation is rooted in the concept of diffusion models, which have gained popularity for their ability to produce high-quality images from textual descriptions. However, ControlNet Stable Diffusion takes this technology a step further by introducing conditional control to the process. This means that users can exert more influence over the generated images by providing specific control signals along with their text prompts.

The core idea behind ControlNet Stable Diffusion is to provide users with a mechanism to control the visual attributes, style, and content of the generated images. This control is achieved through a novel network architecture and training procedure, enabling users to generate images that align closely with their creative vision.

Is ControlNet Stable Diffusion Free?

One of the first questions that often comes to mind when exploring a new AI tool is its accessibility. ControlNet Stable Diffusion is built on the foundations of open-source machine learning, and that means it is freely available to the research and developer community. It can be accessed through popular platforms like Hugging Face, making it accessible to a wide range of users.

This open-source nature allows developers and researchers to experiment with ControlNet Stable Diffusion, integrate it into their projects, and contribute to its ongoing development. So, whether you are a seasoned AI researcher or a curious developer looking to explore new horizons, ControlNet Stable Diffusion is within your reach without any financial barriers.

ControlNet vs. Unet: Understanding the Difference

Before diving into how ControlNet Stable Diffusion works, it's important to differentiate it from its predecessor, Unet. Both of these techniques play crucial roles in text-to-image generation, but they have distinct characteristics and applications.

Unet, short for "Universal Network," is a widely used architecture in the domain of text-to-image generation. It focuses on mapping textual descriptions to corresponding images, often with impressive results. Unet models are trained to learn the relationships between words in the text and the pixels in the image, allowing for direct generation.

ControlNet Stable Diffusion, on the other hand, builds upon the foundation laid by Unet but introduces a significant enhancement: conditional control. While Unet models generate images directly from text prompts, ControlNet Stable Diffusion incorporates control signals, enabling users to guide the generation process more precisely.

In essence, ControlNet Stable Diffusion empowers users to control various aspects of the generated images, such as their style, content, and visual attributes, with greater flexibility than Unet models. This additional level of control makes it a powerful tool for creating images that align perfectly with the desired artistic vision.

Adding ControlNet to Stable Diffusion

If you are eager to harness the capabilities of ControlNet Stable Diffusion for your text-to-image generation tasks, you'll want to know how to integrate it into your projects. Here's a basic guide to adding ControlNet to Stable Diffusion:

-

Choose a Framework: First, decide on the deep learning framework you want to work with. ControlNet Stable Diffusion is compatible with popular frameworks like PyTorch and TensorFlow. Ensure you have the necessary environment set up.

-

Install Dependencies: Install the required dependencies, including the ControlNet Stable Diffusion package, as well as any other libraries you plan to use for text processing and image generation.

-

Prepare Your Data: Organize your textual descriptions and any control signals or conditioning information you wish to use. Ensure that your dataset is appropriately formatted for training and testing.

-

Model Configuration: Define the architecture and configuration of your ControlNet Stable Diffusion model. You can customize various parameters, such as the depth of the network, the dimensionality of the control space, and more, to suit your specific needs.

-

Training: Train your ControlNet Stable Diffusion model using your prepared dataset. Monitor the training process, and fine-tune the model as needed to achieve the desired results.

-

Inference: Once your model is trained, you can use it to generate images from text prompts while providing control signals to guide the output. Experiment with different combinations of text and control inputs to achieve the desired level of control over the generated images.

-

Evaluation and Iteration: Continuously evaluate the quality of generated images and iterate on your model and training process to improve performance and alignment with your creative goals.

This basic guide should help you get started with ControlNet Stable Diffusion. However, keep in mind that the implementation details may vary depending on your specific use case and the deep learning framework you choose.

ControlNet on Hugging Face

Hugging Face, a prominent platform in the AI and machine learning community, plays a pivotal role in making cutting-edge AI models and techniques accessible to a wide audience. ControlNet Stable Diffusion is no exception, as it is readily available on the Hugging Face model hub.

Hugging Face provides a user-friendly interface for exploring, using, and fine-tuning ControlNet models. You can easily access pre-trained ControlNet models, experiment with different text prompts and control signals, and even fine-tune these models on your own datasets, tailoring them to your specific needs.

The integration of ControlNet on Hugging Face simplifies the adoption of this technology for researchers, developers, and enthusiasts. It offers a collaborative and community-driven environment for sharing knowledge, model architectures, and expertise related to ControlNet Stable Diffusion.

In conclusion, ControlNet Stable Diffusion is a game-changing innovation in the field of text-to-image generation. With its ability to provide conditional control over image generation, it empowers users to create visually stunning and highly customized images from textual descriptions. Its open-source nature ensures accessibility, and platforms like Hugging Face make it even more approachable for researchers and developers. By adding ControlNet to Stable Diffusion and harnessing its power, you can unlock new creative possibilities in the world of AI-generated imagery.