Alibaba Cloud has set a new benchmark in the AI landscape with its Qwen series. The launch of Qwen-72B and Qwen-1.8B is not just another update; it's a significant leap towards smarter and more intuitive artificial intelligence. These models stand out with their impressive abilities and are poised to influence how industries operate. Let's dissect these AI giants to understand their capabilities and the innovation they bring to the table.

The Qwen series by Alibaba Cloud is a suite of large language models (LLMs) designed to understand and generate human-like text. But what sets Qwen-72B and Qwen-1.8B apart is their scale and sophistication. These models are built to handle complex language tasks that even challenge human experts.

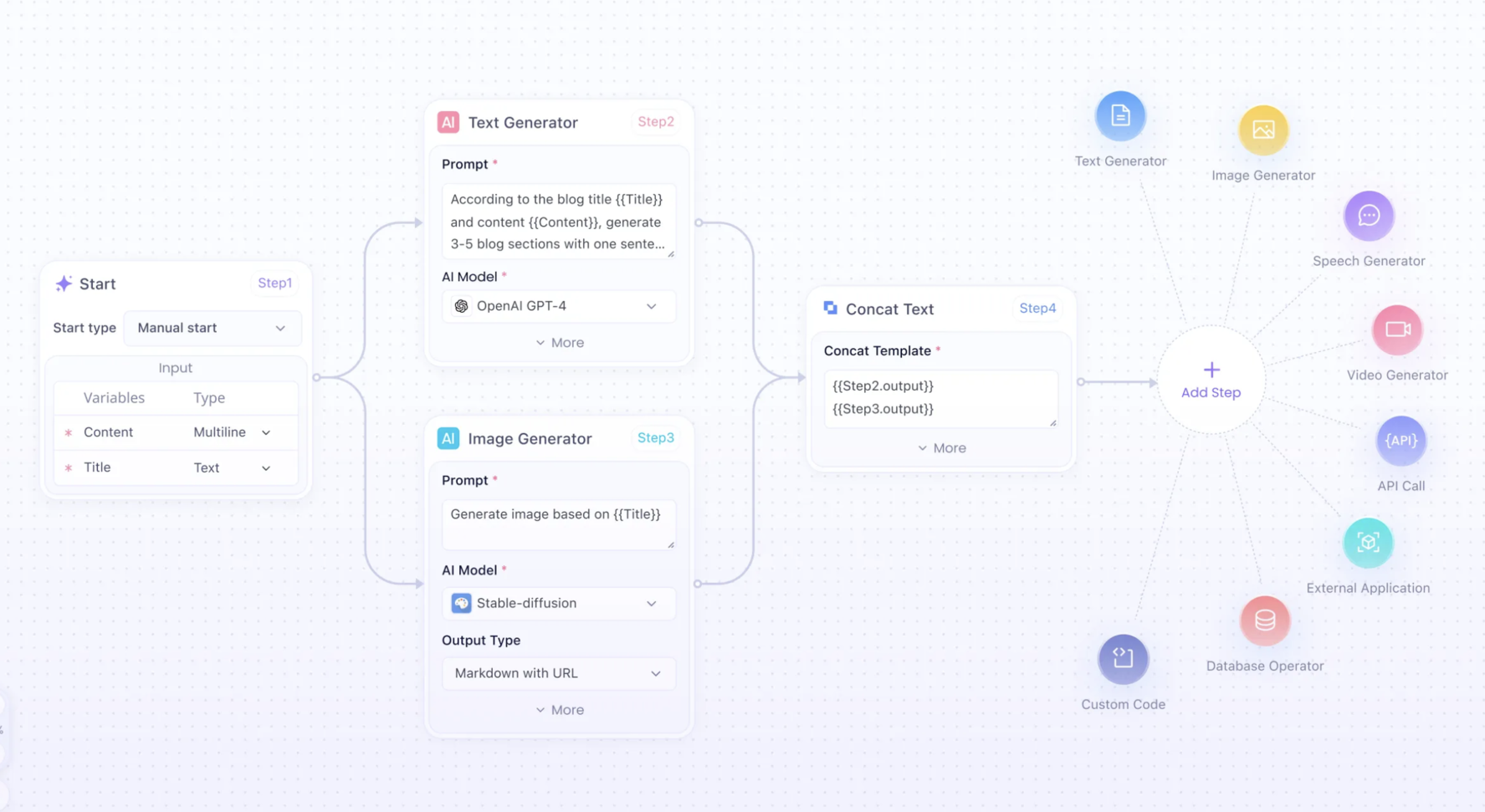

Anakin AI can help you easily create any AI app with highly customized workflow, with access to Many, Many AI models such as GPT-4-Turbo, Claude-2-100k, API for Midjourney & Stable Diffusion, and much more!

Interested? Check out Anakin AI and test it out for free!👇👇👇\

What is Qwen-72B?

Qwen-72B is a heavyweight in the AI world with 72 billion parameters. Parameters are like the AI's knowledge base - the more it has, the more it can understand and predict. This model is the result of extensive training, involving a dataset of 3 trillion tokens, which are pieces of text that help the model learn language nuances.

- Multilingual Abilities: It's not just about the size but also about reach. Qwen-72B supports multiple languages, making it a versatile tool for global communication.

- Performance Metrics: In the radar chart, Qwen-72B outperforms other models in benchmarks like MATH and HumanEval, showing its superior problem-solving skills.

And... What is Qwen-1.8B?

Then there's Qwen-1.8B, smaller in parameter count but still mighty. It challenges the notion that bigger is always better, as it excels in specific tasks, possibly outdoing larger models. This suggests that Alibaba has optimized Qwen-1.8B to punch above its weight.

- Specialized Performance: According to the image you provided, Qwen-1.8B shows strong performance in benchmarks like BBH and AGIEval, indicating its tailored efficiency.

- Compact and Powerful: Its ability to potentially surpass larger models in some scenarios points to sophisticated design and training methods.

How Good is Qwen-72B?

Alibaba’s Qwen-72B is not just another large language model; it's a powerhouse equipped to tackle tasks that require a deep understanding of language. With its 72 billion parameters, Qwen-72B is built on a foundation of 3 trillion tokens. This vast knowledge base allows it to navigate complex language tasks with an ease that rivals human expertise.

Qwen-72B's 32K Context Window

A remarkable feature of Qwen-72B is its support for a 32k character context length. This is significant because it allows the model to process and generate lengthy texts in a single instance.

To put this into context: A 32k character limit can encompass nearly half of a novel like "Harry Potter and the Sorcerer's Stone". This capability is crucial for understanding and generating long-form content, which is a game-changer for marketers and content creators.

Multilingual Support with Qwen-72B

Qwen-72B's vocabulary is extensive, with over 150,000 tokens. This extensive vocabulary enables the model to handle a wide array of languages, allowing for better quality content generation in non-English languages, where other models like GPT-4 may fall short.

With its multilingual capabilities, Qwen-72B can produce high-quality localized content.

Qwen-72B Surpassing GPT-4 in Commonsense Reasoning

In the realm of AI, commonsense reasoning is a crucial benchmark. Qwen-72B's performance on the C-Eval benchmark demonstrates its superior commonsense reasoning abilities. This means Qwen-72B:

- Understands Everyday Knowledge: It can grasp and apply common knowledge that humans usually find intuitive, making it more relatable and human-like in its interactions.

- Excels in Problem-Solving: The ability to surpass GPT-4 in this domain suggests that Qwen-72B can offer more accurate and relevant responses to a variety of real-world problems.

Qwen-1.8B: Surprisingly Efficient

Qwen-1.8B may have fewer parameters than its larger sibling, Qwen-72B, but it stands out for its efficiency and effectiveness, especially in specific language tasks. Alibaba's Qwen-1.8B is a testament to the idea that in the realm of AI, clever design can sometimes trump sheer size. Here's what makes Qwen-1.8B notable:

- Resourceful and Powerful: Qwen-1.8B is capable of generating up to 2,000 words of text on a device with just 3GB GPU RAM. This efficiency makes it an ideal choice for users with hardware constraints, enabling broader access to AI technology.

- Specialized Task Handling: In tasks where nuanced understanding and contextual awareness are key, Qwen-1.8B has demonstrated remarkable performance, indicating that it's been fine-tuned to excel in areas where precision is paramount.

- Accessible AI: The model's lower hardware requirements mean that more developers and small businesses can experiment with and deploy cutting-edge AI without the need for expensive infrastructure.

Qwen-1.8B, with its unique blend of efficiency and capability, represents an important direction for AI development: creating powerful technology that is within reach of a wider audience. It's not just about building the biggest AI; it's about making AI work smarter and more accessible, thus democratizing the power of machine learning.

https://huggingface.co/Qwen/Qwen-1_8B

Concluding Thoughts on Qwen-72B and Qwen-1.8B

In conclusion, the Qwen series, with its Qwen-72B and Qwen-1.8B models, stands as a significant achievement in the field of artificial intelligence. These models showcase the incredible potential of AI to not only mimic human-like understanding of language but also to do so with an efficiency that broadens its accessibility. The implications of their capabilities are profound, ushering in a future where advanced AI tools are within reach of a wider community of users and developers.

Qwen-72B, with its expansive vocabulary and extended context length, is poised to revolutionize long-form content creation and multilingual communication. Meanwhile, Qwen-1.8B demonstrates that power can come in smaller packages, proving that optimized AI can deliver high-quality outputs even on limited computational resources.

The impact of these models extends beyond the technical sphere into practical applications that touch upon every aspect of life and work. They have the potential to innovate customer experiences, streamline operations, and open up new avenues for creative expression.

The decision to open-source these models catalyzes a collaborative environment where innovation is fueled by shared knowledge and diverse contributions. As the Qwen series becomes a cornerstone for new AI-driven solutions across various industries, it's evident that the future is bright—and intelligent. The journey toward an AI-integrated world continues, and the Qwen series is at the forefront, charting the course for a smarter tomorrow.