Llama 3, the latest and most powerful language model from Meta, has taken the AI world by storm. With its impressive capabilities and open-source nature, it has become a highly sought-after tool for developers, researchers, and AI enthusiasts alike. However, running such a large and complex model can be a daunting task, especially for those new to the field. That's where Ollama comes in – a user-friendly platform designed to simplify the process of running and interacting with large language models like Llama 3.

In this comprehensive guide, we'll take you through the step-by-step process of running Llama 3 with Ollama, covering everything from installation to advanced features. We'll also explore the integration of Anakin AI's API, which can further enhance your experience with Llama 3 and open up new possibilities for your AI projects.

Understanding Llama 3

Before we dive into the nitty-gritty of running Llama 3 with Ollama, let's take a moment to understand what Llama 3 is and why it's such a game-changer in the AI world.

Llama 3 is a large language model developed by Meta, the company behind social media platforms like Facebook and Instagram. It is a successor to the highly successful Llama 2 model and boasts several improvements, including:

Larger Training Dataset: Llama 3 was trained on a dataset seven times larger than its predecessor, allowing it to capture a broader range of knowledge and language patterns.

Increased Context Length: With a context length of 8K tokens, Llama 3 can process and understand longer sequences of text, making it more suitable for tasks that require extensive context, such as document summarization or question-answering.

Efficient Language Encoding: Llama 3 uses a larger token vocabulary of 128K tokens, enabling it to encode language more efficiently and accurately.

Reduced Refusals: Compared to Llama 2, Llama 3 exhibits less than one-third of the false "refusals" when presented with prompts, making it more reliable and responsive.

With these impressive improvements, Llama 3 has become a powerful tool for a wide range of natural language processing tasks, including text generation, translation, summarization, and question-answering.

Introducing Ollama

Ollama is an open-source platform designed to make it easy for users to run and interact with large language models like Llama 3. It provides a user-friendly interface and a set of tools that simplify the process of downloading, configuring, and running these models.

One of the key features of Ollama is its ability to run models locally on your machine, eliminating the need for expensive cloud computing resources. This not only saves you money but also ensures that your data remains private and secure.

Ollama supports a variety of models, including Llama 3, and offers different versions optimized for different use cases, such as text generation or question-answering. It also provides a command-line interface (CLI) and an API, allowing you to integrate Llama 3 into your own applications and workflows.

Installing Ollama

The first step in running Llama 3 with Ollama is to install the platform on your machine. Ollama supports all major operating systems, including Windows, macOS, and Linux.

Windows Installation

For Windows users, Ollama provides a simple installer that guides you through the installation process. Here's how you can install Ollama on Windows:

- Visit the Ollama website (https://ollama.com) and navigate to the "Download" section.

- Click on the "Windows" button to download the installer.

- Once the download is complete, run the installer and follow the on-screen instructions.

- During the installation process, you may be prompted to install additional dependencies or components. Follow the prompts and complete the installation.

macOS Installation

Installing Ollama on macOS is equally straightforward. Here are the steps:

- Visit the Ollama website (https://ollama.com) and navigate to the "Download" section.

- Click on the "macOS" button to download the installer.

- Once the download is complete, double-click on the installer package to begin the installation process.

- Follow the on-screen instructions to complete the installation.

Linux Installation

For Linux users, Ollama provides a variety of installation methods, including package managers and manual installation from source code. Here's an example of how to install Ollama on Ubuntu using the apt package manager:

Open a terminal window.

Update the package lists by running the following command:

sudo apt update

Install the required dependencies by running the following command:

sudo apt install -y software-properties-common

Add the Ollama repository to your system's sources list by running the following command:

sudo add-apt-repository ppa:ollama/stable

Once the repository is enabled, install Ollama with the following command:

sudo apt install ollama

After the installation is complete, you can verify the installation by running the following command:

ollama --version

This should display the version of Ollama installed on your system.

With Ollama installed on your machine, you're now ready to start running Llama 3.

Running Llama 3 with Ollama

Running Llama 3 with Ollama is a straightforward process, thanks to Ollama's user-friendly interface and command-line tools.

Downloading Llama 3

Before you can run Llama 3, you need to download the model files. Ollama provides a convenient command-line tool for downloading and managing models.

Open a terminal or command prompt on your machine.

Run the following command to download Llama 3:

ollama pull llama3

This command will download the Llama 3 model files to your machine. Depending on your internet connection speed and the size of the model, this process may take some time.

Running Llama 3 in the Terminal

Once the model files are downloaded, you can run Llama 3 directly from the terminal or command prompt. Here's how:

Open a terminal or command prompt on your machine.

Run the following command to start Llama 3:

ollama run llama3

This command will load the Llama 3 model into memory and start an interactive session.

You can now interact with Llama 3 by typing your prompts or queries into the terminal. For example, you can ask Llama 3 to generate text on a specific topic, summarize a document, or answer questions.

Human: What is the capital of France?

Llama 3: The capital of France is Paris.

To exit the interactive session, simply type /exit or press Ctrl+C.

Running Llama 3 with the Ollama Web Interface

In addition to the command-line interface, Ollama also provides a web-based interface that allows you to interact with Llama 3 in a more user-friendly way.

- Open a web browser and navigate to

http://localhost:8080. - You should see the Ollama web interface. If prompted, create a new account or log in with your existing credentials.

- Once logged in, you should see a list of available models. Select "Llama 3" from the list.

- You can now start interacting with Llama 3 by typing your prompts or queries into the text box and clicking the "Send" button.

- Llama 3's responses will be displayed in the chat window, allowing you to have a natural conversation with the model.

The Ollama web interface also provides additional features, such as the ability to save and load conversations, adjust model settings, and access documentation and examples.

Build an AI APp with Anakin AI's API

While Ollama provides a powerful and user-friendly platform for running Llama 3, integrating Anakin AI's API can further enhance your experience and open up new possibilities for your AI projects.

Then, You cannot miss out Anakin AI!

Anakin AI is an all-in-one platform for all your workflow automation, create powerful AI App with an easy-to-use No Code App Builder, with Llama 3, Claude, GPT-4, Uncensored LLMs, Stable Diffusion...

Build Your Dream AI App within minutes, not weeks with Anakin AI!

Anakin AI offers a comprehensive API service that empowers developers and organizations to seamlessly integrate and enhance their projects using Anakin AI's APIs. By leveraging these APIs, users gain the flexibility to easily access Anakin AI's robust product features within their own applications.

Advantages of API Integration with Anakin AI

Integrating Anakin AI's API with Llama 3 and Ollama offers several advantages:

Rapid Development: You can rapidly develop AI applications tailored to your business needs using Anakin AI's intuitive visual interface, with real-time implementation across all clients.

Model Flexibility: Anakin AI supports multiple AI model providers, allowing you the flexibility to switch providers as needed.

Pre-packaged Functionality: You gain pre-packaged access to the essential functionalities of the AI model, streamlining the development process.

Future-proofing: Stay ahead of the curve with upcoming advanced features available through the API.

How to Use Anakin AI's API

To use Anakin AI's API with Llama 3 and Ollama, follow these steps:

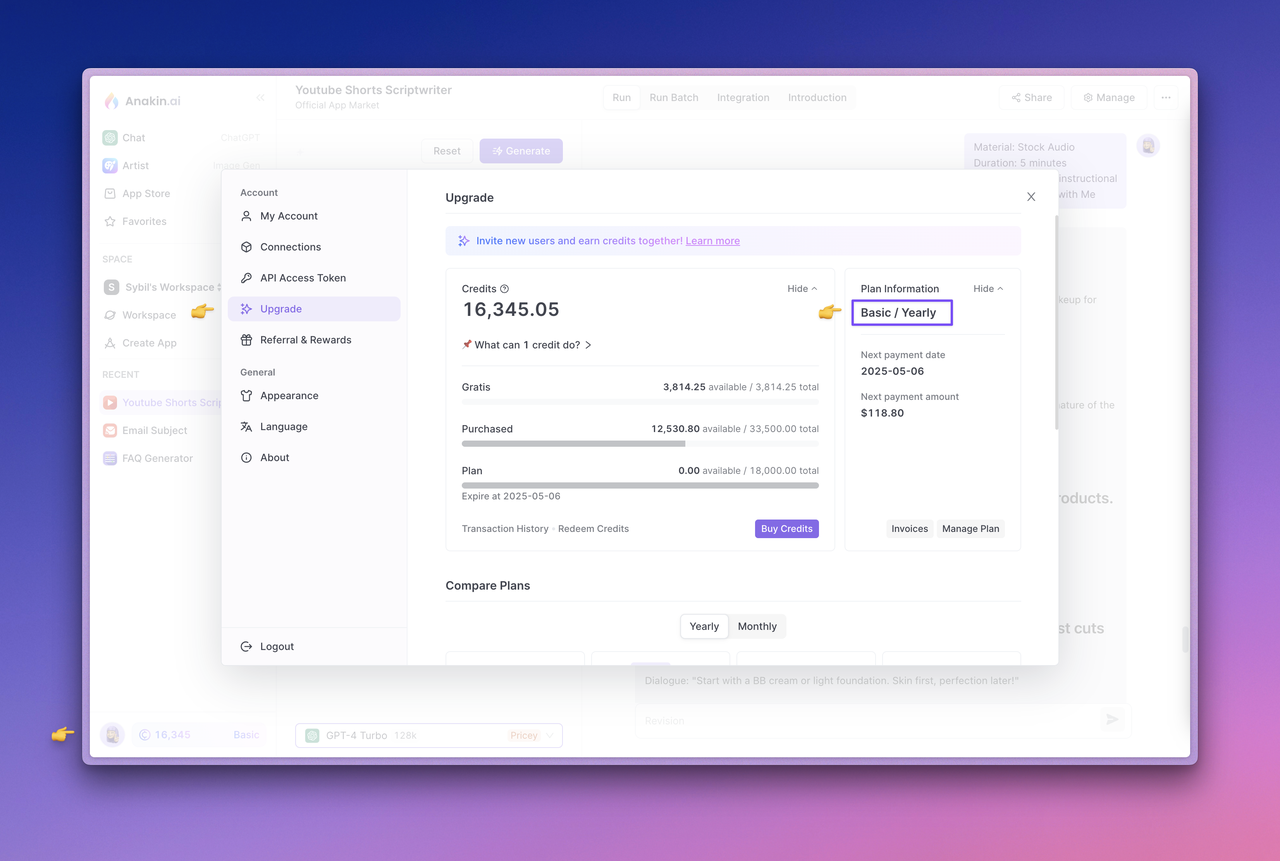

Upgrade Your Plan and Check Your Account Credits: Anakin AI's API service is currently exclusively available to subscribers. While using the AI model through API calls, credits from your account balance will be consumed. To check your subscription status or upgrade your plan, navigate to the Anakin AI Web App, click on the avatar located in the lower left corner, and access the Upgrade page. Ensure that your current account has sufficient credits.

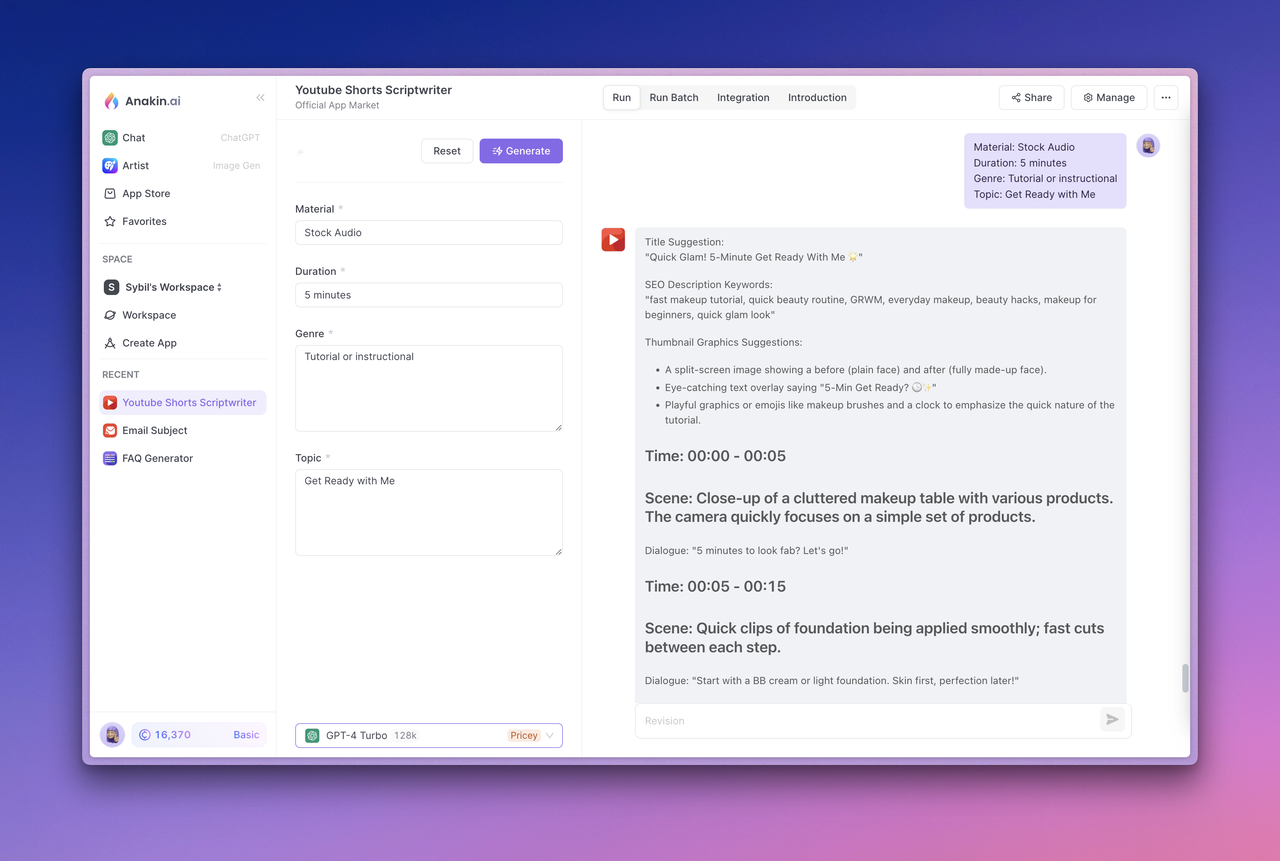

Test Your App: Select the app you want to use and click the "Generate" button. Confirm that it runs properly and produces the expected output before proceeding.

View API Documentation and Manage API Access Tokens: Next, visit the app Integration section at the top. In this section, you can click "View Details" to view the API documentation provided by Anakin AI, manage access tokens, and view the App ID.

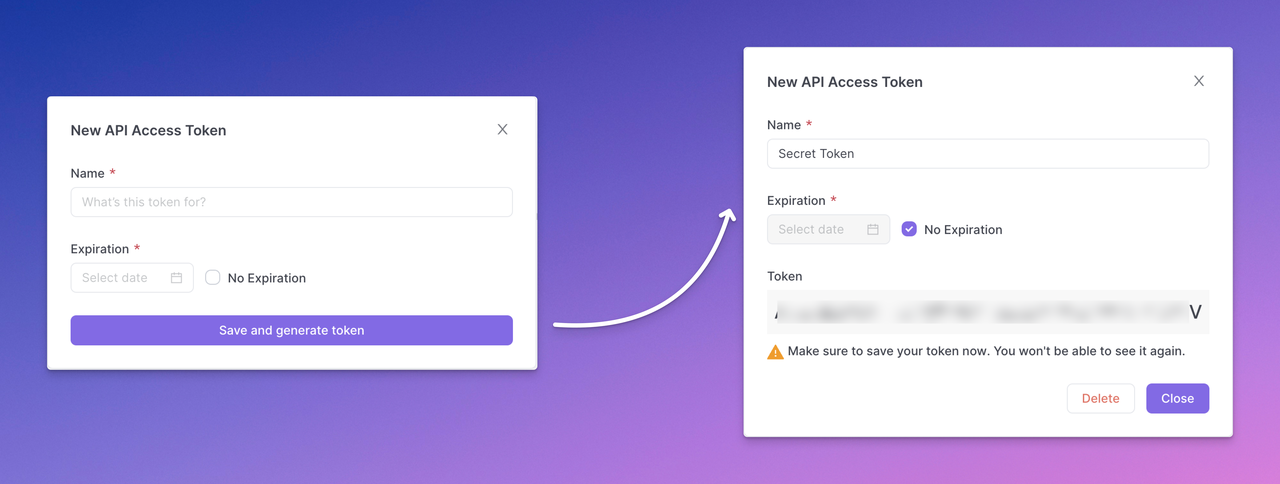

Generate Your API Access Token: Click the "Manage Token" button to manage your API Access token and select "New Token" to generate your API access token. Complete the token configuration, then click "Save and generate token," and finally copy and save the API Access Token securely.

Note: The generated API access token will only be displayed once. Make sure to copy and save it securely right away. It's a best practice to expose API keys in plaintext through backend calls rather than directly in frontend code or requests to prevent abuse or attacks on your app.

Use the API: With your API Access Token in hand, you can now integrate Anakin AI's API into your Ollama workflow or application. Anakin AI provides detailed API documentation and sample requests for various use cases, such as text generation (Quick App) and chatbot interactions (Chatbot App).

For example, to generate text using the Quick App API, you can make a POST request like this:

curl --location --request POST 'https://api.anakin.ai/v1/quickapps/{{appId}}/runs' \

--header 'Authorization: Bearer ANAKINAI_API_ACCESS_TOKEN' \

--header 'X-Anakin-Api-Version: 2024-05-06' \

--header 'Content-Type: application/json' \

--data-raw '{

"inputs": {

"Product/Service": "Cloud Service",

"Features": "Reliability and performance.",

"Advantages": "Efficiency",

"Framework": "Attention-Interest-Desire-Action"

},

"stream": true

}'

Replace {{appId}} with the appropriate App ID and ANAKINAI_API_ACCESS_TOKEN with your generated API Access Token.

By integrating Anakin AI's API with Llama 3 and Ollama, you can unlock a world of possibilities for your AI projects, from building custom applications to enhancing existing workflows with advanced AI capabilities.

Advanced Features and Use Cases

While running Llama 3 with Ollama and integrating Anakin AI's API can provide a powerful foundation for your AI projects, there are several advanced features and use cases that you can explore to further enhance your experience.

Fine-tuning Llama 3

One of the most powerful features of Llama 3 is its ability to be fine-tuned on specific datasets or tasks. Fine-tuning involves taking the pre-trained Llama 3 model and further training it on a smaller, task-specific dataset. This process can significantly improve the model's performance on that specific task, making it more accurate and reliable.

Ollama provides tools and documentation to help you fine-tune Llama 3 on your own datasets. This can be particularly useful for tasks such as:

Domain-specific language generation: Fine-tune Llama 3 on a dataset specific to your industry or domain, such as legal documents, medical reports, or technical manuals, to generate more accurate and relevant text.

Sentiment analysis: Train Llama 3 on a dataset of labeled reviews or social media posts to improve its ability to detect and classify sentiment accurately.

Named entity recognition: Fine-tune Llama 3 to better identify and classify named entities, such as people, organizations, and locations, in text.

Question-answering: Improve Llama 3's performance on question-answering tasks by fine-tuning it on a dataset of questions and answers specific to your domain or use case.

Integrating Llama 3 into Applications

While Ollama provides a convenient interface for interacting with Llama 3, you may want to integrate the model directly into your own applications or workflows. Ollama offers an API that allows you to do just that.

Using the Ollama API, you can programmatically interact with Llama 3, sending prompts or queries and receiving the model's responses. This can be particularly useful for building applications such as:

Chatbots and virtual assistants: Integrate Llama 3 into your chatbot or virtual assistant application to provide natural language understanding and generation capabilities.

Content generation tools: Build tools that leverage Llama 3's text generation capabilities to assist with tasks such as writing, ideation, and content creation.

Question-answering systems: Develop systems that can answer questions accurately and provide relevant information by leveraging Llama 3's knowledge and understanding.

Summarization and analysis tools: Create tools that can summarize long documents or analyze text for key insights and information using Llama 3's language understanding capabilities.

Exploring Other Llama Models

While Llama 3 is the latest and most powerful model in the Llama family, Ollama also supports other Llama models, such as Llama 2 and Llama 1. These models may be more suitable for certain tasks or use cases, depending on your specific requirements.

For example, Llama 2 may be a better choice if you have limited computational resources or if you need a model with a smaller memory footprint. Llama 1, on the other hand, could be useful for tasks that don't require the full power of Llama 3, such as simple text generation or classification tasks.

Ollama makes it easy to switch between different Llama models, allowing you to experiment and find the best fit for your needs.

Conclusion

Running Llama 3 with Ollama and integrating Anakin AI's API can unlock a world of possibilities for your AI projects. From natural language processing tasks to content generation and beyond, this powerful combination provides a robust and flexible platform for exploring the capabilities of large language models.

By following the steps outlined in this comprehensive guide, you'll be able to install Ollama, download and run Llama 3, and integrate Anakin AI's API to enhance your workflow. Additionally, you'll learn about advanced features such as fine-tuning, application integration, and exploring other Llama models.

As the field of AI continues to evolve rapidly, staying up-to-date with the latest tools and technologies is crucial. Ollama and Anakin AI offer a powerful and user-friendly solution for harnessing the power of Llama 3 and other large language models, empowering you to push the boundaries of what's possible with AI.

So, what are you waiting for? Start your journey with Llama 3 and Ollama today, and unlock a world of possibilities for your AI projects!

Then, You cannot miss out Anakin AI!

Anakin AI is an all-in-one platform for all your workflow automation, create powerful AI App with an easy-to-use No Code App Builder, with Llama 3, Claude, GPT-4, Uncensored LLMs, Stable Diffusion...

Build Your Dream AI App within minutes, not weeks with Anakin AI!