The Gemini 1.5 Pro API is a powerful tool that allows developers to integrate Google's cutting-edge language model into their applications. This API provides access to the latest generative models from Google, enabling you to perform a wide range of tasks such as text generation, question answering, and image captioning. In this comprehensive guide, we'll walk you through the process of using the Gemini 1.5 Pro API, from setting up your development environment to building your first application.

Then, You cannot miss out Anakin AI!

Anakin AI is an all-in-one platform for all your workflow automation, create powerful AI App with an easy-to-use No Code App Builder, with Llama 3, Claude, GPT-4, Uncensored LLMs, Stable Diffusion...

Build Your Dream AI App within minutes, not weeks with Anakin AI!

Step 1: Generate an API Key

Before you can start using the Gemini 1.5 Pro API, you'll need to obtain an API key from the Google AI for Developers page. This key will authenticate your requests and grant you access to the API.

- Visit the Google AI for Developers page and log in with your Google account.

- Click on the "Get an API key" button.

- Follow the prompts to create a new project or select an existing one.

- Once your project is set up, you'll be provided with an API key. Make sure to keep this key secure, as it will be required for all API requests.

Step 2: Install the API Library

To simplify the process of interacting with the Gemini 1.5 Pro API, Google provides official client libraries for various programming languages. In this guide, we'll be using the Python library, but the process is similar for other languages.

- Open your terminal or command prompt.

- Install the Gemini Python API package using pip:

pip install google-generativeai

- Once the installation is complete, you can import the necessary libraries into your Python script:

import google.generativeai as genai

from google.generativeai.types import ContentType

from PIL import Image

Step 3: Configure the API Key

Before making any API calls, you'll need to configure your API key in your Python script:

GOOGLE_API_KEY = 'your-api-key-goes-here'

genai.configure(api_key=GOOGLE_API_KEY)

Replace 'your-api-key-goes-here' with the API key you obtained in Step 1.

Step 4: Access the Gemini 1.5 Pro Model

The Gemini 1.5 Pro API provides access to various models, each with its own capabilities and specializations. In this example, we'll be using the gemini-1.5-pro-latest model, which is the latest version of the Gemini 1.5 Pro model.

model = genai.GenerativeModel('gemini-1.5-pro-latest')

Step 5: Generate Content

With the model initialized, you can now start generating content using the Gemini 1.5 Pro API. The API supports both text-only and multimodal prompts, allowing you to incorporate images, videos, and other media into your requests.

Text-only Prompt

To generate text from a text-only prompt, you can use the generate_content method:

prompt = "Write a story about a magic backpack."

response = model.generate_content(prompt)

print(response.text)

This will generate a story based on the provided prompt and print the result to the console.

Multimodal Prompt

The Gemini 1.5 Pro API also supports multimodal prompts, which combine text and images. Here's an example of how to generate a caption for an image:

text_prompt = "Describe the image in detail."

image = Image.open('example_image.jpg')

prompt = [text_prompt, image]

response = model.generate_content(prompt)

print(response.text)

In this example, we first define a text prompt and open an image file using the PIL library. We then combine the text prompt and the image into a list, which serves as the prompt for the generate_content method. The API will generate a detailed description of the image based on the provided prompt.

Step 6: Explore Advanced Features

The Gemini 1.5 Pro API offers a wide range of advanced features, such as multi-turn conversations (chat), streamed responses, and embeddings. Let's explore some of these features in more detail.

Multi-turn Conversations (Chat)

The Gemini API allows you to build interactive chat experiences for your users. Using the chat feature, you can collect multiple rounds of questions and responses, enabling users to incrementally work towards answers or get help with multi-part problems.

model = genai.GenerativeModel('gemini-1.5-flash')

chat = model.start_chat(history=[])

response = chat.send_message("Pretend you're a snowman and stay in character for each response.")

print(response.text)

response = chat.send_message("What's your favorite season of the year?")

print(response.text)

In this example, we start a new chat session with an empty history. We then send two messages to the chat, with the model responding in character as a snowman. The send_message method allows you to continue the conversation by sending additional messages.

Streamed Responses

The Gemini API provides an option to receive responses from generative AI models as a data stream. This feature allows you to send incremental pieces of data back to your application as it is generated by the model, creating a more interactive experience for your users.

response = model.generate_content(prompt, stream=True)

for chunk in response.iter_text():

print(chunk, end='')

In this example, we set the stream parameter to True when calling the generate_content method. The response is then returned as a stream, and we can iterate over the iter_text method to print each chunk of the response as it becomes available.

Embeddings

The Gemini API provides an embedding service that generates state-of-the-art embeddings for words, phrases, and sentences. These embeddings can be used for various natural language processing tasks, such as semantic search, text classification, and clustering.

from google.generativeai import EmbeddingModel

embedding_model = EmbeddingModel()

text = "This is an example sentence."

embeddings = embedding_model.get_embeddings(text)

print(embeddings)

In this example, we create an instance of the EmbeddingModel class and use the get_embeddings method to generate embeddings for a given text. The resulting embeddings can be used in various downstream tasks or applications.

API Integration with Anakin.ai

Anakin.ai offers comprehensive API service for all applications, empowering developers and organizations to seamlessly integrate and enhance their projects using Anakin.ai APIs. By leveraging these APIs, users gain the flexibility to easily access Anakin.ai's robust product features within their own applications.

This capability allows developers and organizations to meet their specific customization requirements without the hassle of managing complex backend architecture and deployment processes. As a result, development costs and workload are significantly reduced, providing unparalleled convenience for developers.

Advantages of API Integration

- Rapidly develop AI applications tailored to your business needs using Anakin.ai's intuitive visual interface, with real-time implementation across all clients.

- Support for multiple AI model providers, allowing you the flexibility to switch providers as needed.

- Pre-packaged access to the essential functionalities of the AI model.

- Stay ahead of the curve with upcoming advanced features available through the API.

How to Use Gemini API with Anakin AI

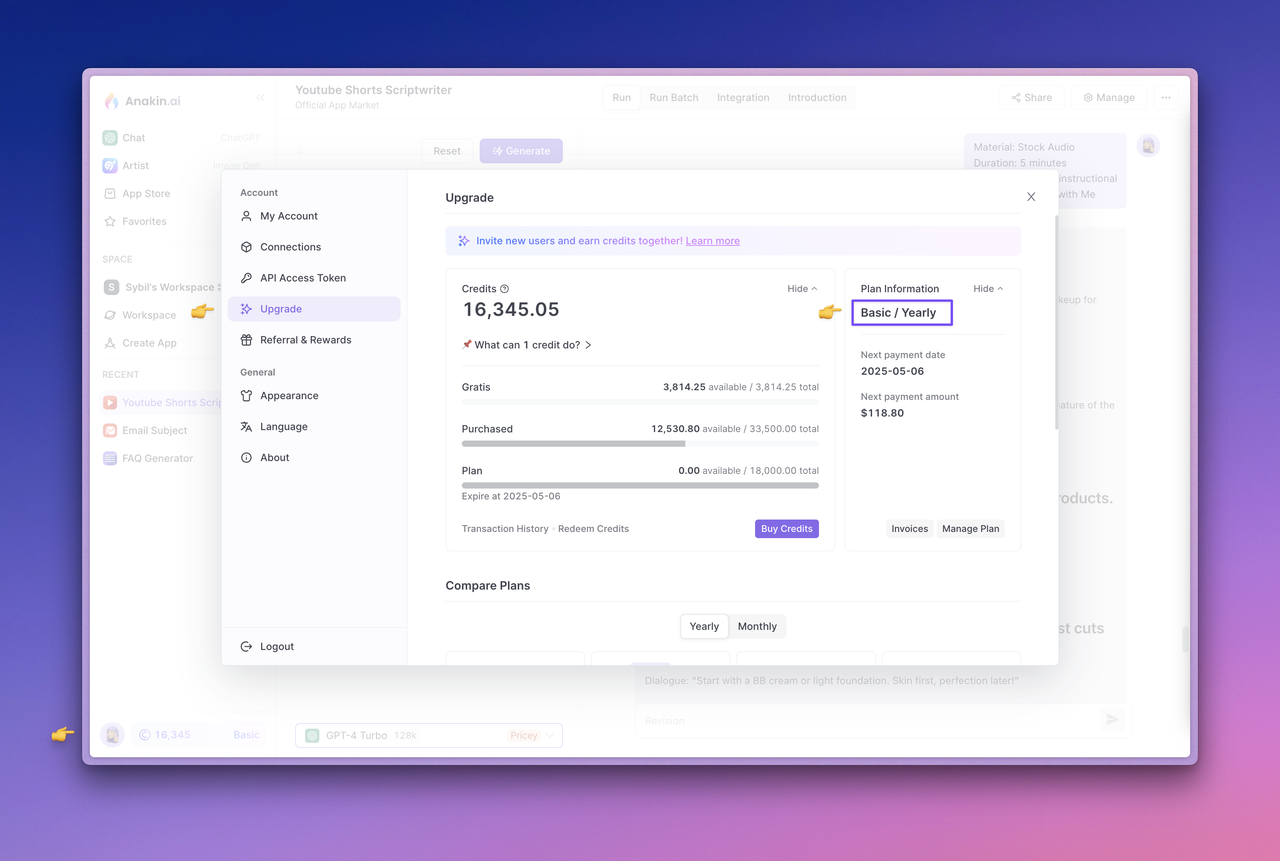

Step 1: Upgrade Your Plan and Check Your Account Credits

The API service is currently exclusively available to subscribers. While using the AI model through API calls, credits from your account balance will be consumed. To check your subscription status or upgrade your plan, navigate to the Anakin.ai Web App. Click on the avatar located in the lower left corner to access the Upgrade page. Please ensure that your current account has sufficient credits.

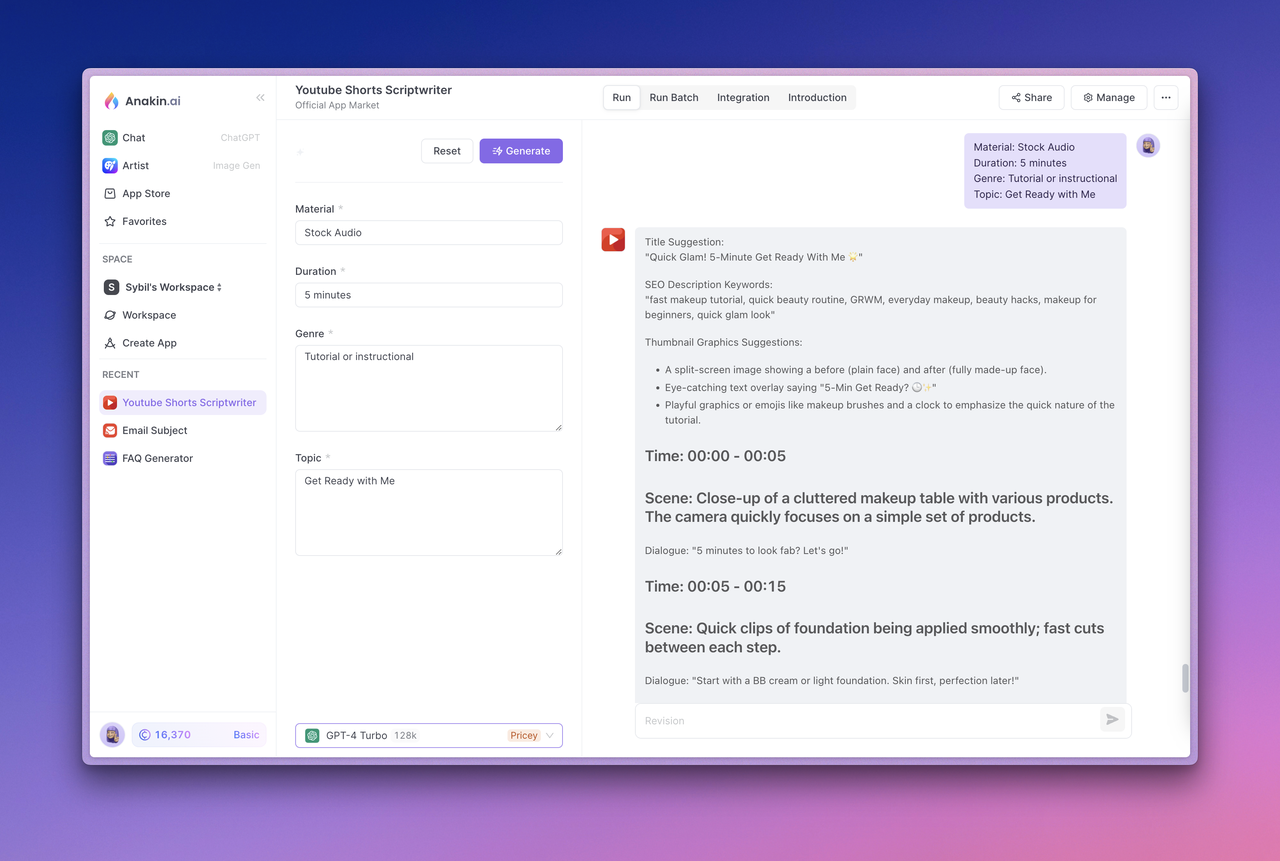

Step 2: Test Your App

Now, to test the app, select the app and click the Generate button. Confirm it runs properly and produces the expected output before proceeding.

Step 3: View API Documentation and Manage API Access Tokens

Next, visit the app Integration section at the top. In this section, you can click View Details to view the API documentation provided by Anakin.ai, manage access tokens, and view the App ID.

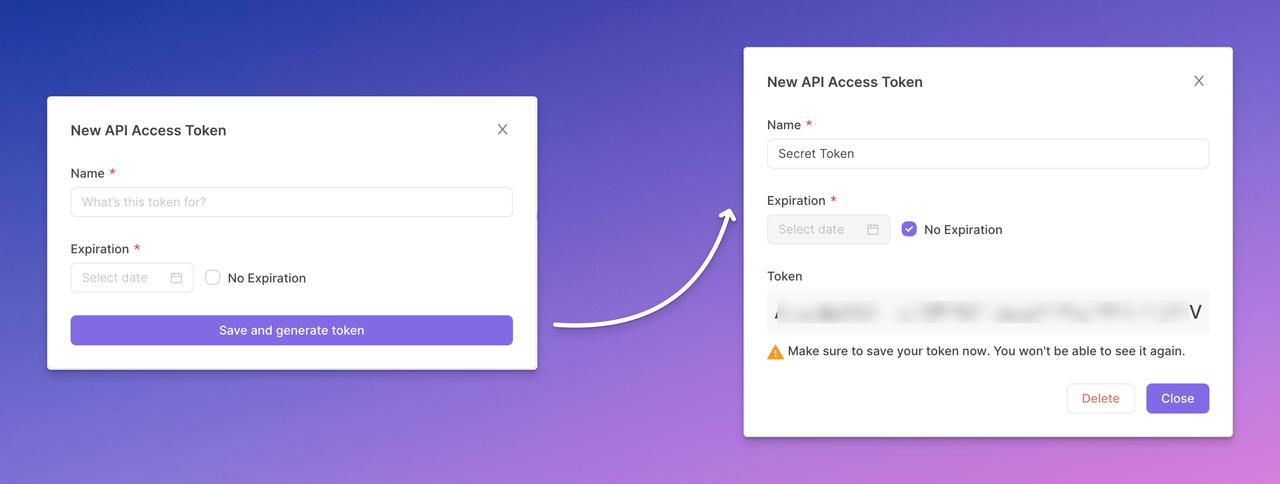

Step 4: Generate Your API Access Token

Click the Manage Token button to manage your API Access token and select New Token to generate your API access token. Complete the token configuration, then click Save and generate token, and finally copy and save the API Access Token securely.

Note: The generated API access token will only be displayed once. Make sure to copy and save it securely right away. A best practice is to expose API keys in plaintext through backend calls rather than directly in frontend code or requests. This helps prevent abuse or attacks on your app.

You can create multiple API Access Tokens for an app to distribute it among different users or developers. This ensures that while users of the API can access the AI capabilities provided by the app developer, the underlying Prompt engineering and other tool capabilities remain intact.

Build a Quick App with Anakin AI

A quick app allows you to generate high-quality text content such as blog posts, translations, and other creative contents. By calling the Run a Quick App API, the user input content is sent, and the generated text result is obtained.

The model parameters and prompt template used to generate text depend on the inputs settings in the Anakin.ai App -> Manage -> Design Page.

You can find the API documentation and request examples for the app in App -> Integration -> API List - > View Details.

Here's an API call example to create text completion information:

curl --location --request POST 'https://api.anakin.ai/v1/quickapps/{{appId}}/runs' \

--header 'Authorization: Bearer ANAKINAI_API_ACCESS_TOKEN' \

--header 'X-Anakin-Api-Version: 2024-05-06' \

--header 'Content-Type: application/json' \

--data-raw '{

"inputs": {

"Product/Service": "Cloud Service",

"Features": "Reliability and performance.",

"Advantages": "Efficiency",

"Framework": "Attention-Interest-Desire-Action"

},

"stream": true

}'

Tip: Remember to replace the variable {{appId}} with the appId you want to request, and replace the ANAKINAI_API_ACCESS_TOKEN with the API Access Token you generated in Step 4.

Check more details about the Quick App API in the API Reference.

Build Your Own AI Chatbot App with Anakin AI

A Chatbot app lets you create chatbots that interact with users in a natural, question-and-answer format. To start a conversation, call the Conversation with Chatbot API, and continue to pass in the returned parameter name to maintain the conversation.

You can find the API documentation and sample requests for the app in App -> Integration -> API List - > View Details.

Here's an API call example to send conversation messages:

curl --location --request POST 'https://api.anakin.ai/v1/chatbots/{{appId}}/messages' \

--header 'Authorization: Bearer ANAKINAI_API_ACCESS_TOKEN' \

--header 'X-Anakin-Api-Version: 2024-05-06' \

--header 'Content-Type: application/json' \

--data-raw '{

"content": "What's your name? Are you the clever one?",

"stream": true

}'

Tip: Remember to replace the variable {{appId}} with the appId you want to request, and replace the ANAKINAI_API_ACCESS_TOKEN with the API Access Token you generated in Step 4.

Check more details about the Chatbot API in the API Reference.

Conclusion

The Gemini 1.5 Pro API is a powerful tool that enables developers to integrate Google's cutting-edge language model into their applications. By following the steps outlined in this guide, you can set up your development environment, generate API keys, and start building applications that leverage the capabilities of the Gemini 1.5 Pro model.

Whether you're working on text generation, question answering, image captioning, or any other natural language processing task, the Gemini 1.5 Pro API provides a flexible and powerful solution. Additionally, by integrating with Anakin.ai's API service, you can further enhance your applications with advanced features and streamline the development process.

As you continue to explore the Gemini 1.5 Pro API and Anakin.ai's API integration, be sure to consult the official documentation and stay up-to-date with the latest updates and features. The world of AI is rapidly evolving, and these tools will continue to unlock new possibilities for developers and organizations alike.

Then, You cannot miss out Anakin AI!

Anakin AI is an all-in-one platform for all your workflow automation, create powerful AI App with an easy-to-use No Code App Builder, with Llama 3, Claude, GPT-4, Uncensored LLMs, Stable Diffusion...

Build Your Dream AI App within minutes, not weeks with Anakin AI!