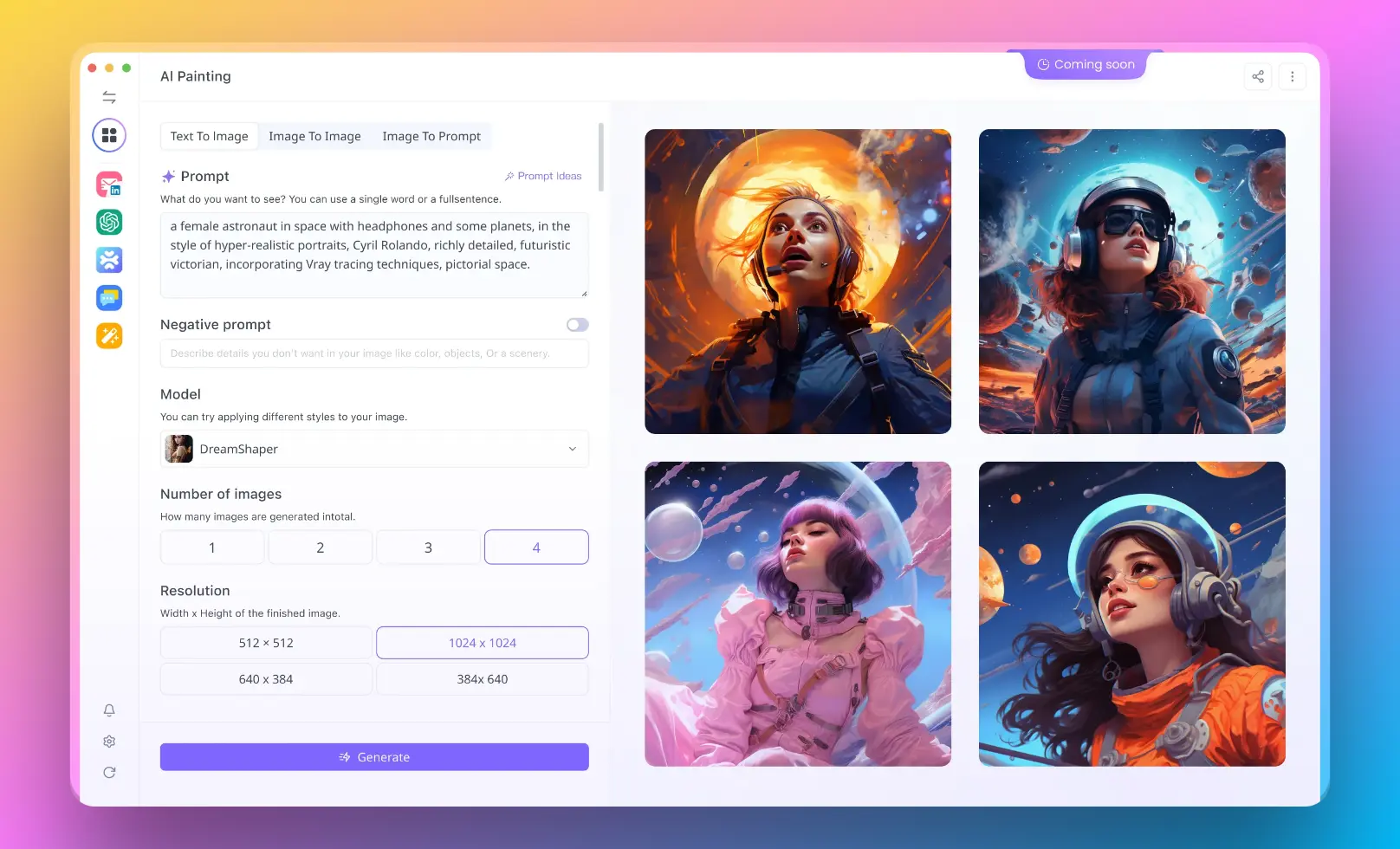

Then, You cannot miss out Anakin AI!

Anakin AI is an all-in-one platform for all your workflow automation, create powerful AI App with an easy-to-use No Code App Builder, with Deepseek, OpenAI's o3-mini-high, Claude 3.7 Sonnet, FLUX, Minimax Video, Hunyuan...

Build Your Dream AI App within minutes, not weeks with Anakin AI!

Introduction

Dolphin-MCP is an open-source implementation that extends Anthropic's Model Context Protocol (MCP) to work with various language models beyond Claude. This technical guide will walk you through setting up and utilizing Dolphin-MCP specifically with OpenAI's API, allowing you to leverage GPT models through the MCP architecture.

Technical Overview

Dolphin-MCP acts as a translation layer between the MCP specification and various LLM APIs. For OpenAI integration, it:

- Converts MCP protocol messages to OpenAI API formats

- Handles token mapping differences between models

- Manages conversation state and history

- Provides tool execution capabilities in a model-agnostic way

Prerequisites

Before beginning, ensure you have:

- Python 3.8+

- pip (package manager)

- OpenAI API key

- Git installed

- Basic understanding of LLMs and API concepts

Installation Steps

# Clone the Dolphin-MCP repository

git clone https://github.com/cognitivecomputations/dolphin-mcp.git

cd dolphin-mcp

# Create a virtual environment

python -m venv venv

source venv/bin/activate # On Windows: venv\Scripts\activate

# Install dependencies

pip install -r requirements.txt

# Install the package in development mode

pip install -e .

Configuration

1. Setting Up Environment Variables

Create a .env file in your project root:

OPENAI_API_KEY=sk-your-openai-api-key-here

MCP_MODEL=gpt-4-0125-preview

MCP_PROVIDER=openai

2. Creating a Configuration File

Create a config.json file:

{

"provider": "openai",

"model_settings": {

"model": "gpt-4-0125-preview",

"temperature": 0.7,

"max_tokens": 2048

},

"api_config": {

"api_key": "sk-your-openai-api-key-here",

"base_url": "https://api.openai.com/v1",

"timeout": 120

}

}

Basic Usage Examples

Example 1: Simple Conversation

from dolphin_mcp import DolphinMCP

# Initialize the client

mcp_client = DolphinMCP.from_config("./config.json")

# Create a conversation

conversation = mcp_client.create_conversation()

# Add a system message

conversation.add_system_message("You are a helpful AI assistant specialized in Python programming.")

# Send a user message and get a response

response = conversation.add_user_message("How do I implement a binary search tree in Python?")

# Print the response

print(response.content)

Example 2: Using Tools with OpenAI

from dolphin_mcp import DolphinMCP

from dolphin_mcp.tools import WebSearchTool, CodeExecutionTool

# Initialize client with tools

mcp_client = DolphinMCP.from_config("./config.json")

# Register tools

mcp_client.register_tool(WebSearchTool(api_key="your-search-api-key"))

mcp_client.register_tool(CodeExecutionTool())

# Create conversation with tools enabled

conversation = mcp_client.create_conversation()

# Add system instructions for tools

conversation.add_system_message("""

You are an AI assistant with access to the following tools:

- web_search: Search the internet for current information

- code_execution: Execute Python code safely in a sandbox

Use these tools when appropriate to provide accurate and helpful responses.

""")

# User query requiring tools

response = conversation.add_user_message(

"What is the current weather in New York? Also, can you show me how to calculate the factorial of a number in Python?"

)

# The response will include tool usage automatically

print(response.content)

print("\nTool executions:")

for tool_name, tool_result in response.tool_results.items():

print(f"{tool_name}: {tool_result}")

Advanced Configuration

Using OpenAI-Compatible Endpoints

If you want to use alternative OpenAI-compatible endpoints (like Azure OpenAI or self-hosted models), modify your config:

{

"provider": "openai",

"model_settings": {

"model": "your-custom-model-deployment",

"temperature": 0.7,

"max_tokens": 2048

},

"api_config": {

"api_key": "your-api-key",

"base_url": "https://your-custom-endpoint.com/v1",

"api_version": "2023-07-01-preview", // For Azure

"api_type": "azure" // For Azure

}

}

Implementation of Custom Tools

Dolphin-MCP allows you to create custom tools for OpenAI models. Here's how to implement a custom calculator tool:

from dolphin_mcp.tools import BaseTool

import math

class CalculatorTool(BaseTool):

name = "calculator"

description = "Performs mathematical calculations"

async def execute(self, expression: str):

try:

# Create a safe environment with limited math functions

safe_env = {

"sqrt": math.sqrt,

"sin": math.sin,

"cos": math.cos,

"tan": math.tan,

"pi": math.pi,

"e": math.e

}

# Evaluate the expression in the safe environment

result = eval(expression, {"__builtins__": {}}, safe_env)

return str(result)

except Exception as e:

return f"Error in calculation: {str(e)}"

@property

def parameters(self):

return {

"type": "object",

"properties": {

"expression": {

"type": "string",

"description": "The mathematical expression to evaluate"

}

},

"required": ["expression"]

}

# Usage example

mcp_client = DolphinMCP.from_config("./config.json")

mcp_client.register_tool(CalculatorTool())

Handling Streaming Responses

For applications requiring real-time responses:

async def stream_response():

mcp_client = DolphinMCP.from_config("./config.json")

conversation = mcp_client.create_conversation()

# Set up the conversation

conversation.add_system_message("You are a helpful AI assistant.")

# Stream the response

async for chunk in conversation.add_user_message_streaming(

"Explain quantum computing in simple terms."

):

if chunk.type == "content":

print(chunk.content, end="", flush=True)

elif chunk.type == "tool_start":

print(f"\n[Starting to use tool: {chunk.tool_name}]")

elif chunk.type == "tool_result":

print(f"\n[Tool result from {chunk.tool_name}]: {chunk.result}")

elif chunk.type == "error":

print(f"\nError: {chunk.error}")

print("\nResponse complete.")

# Run the async function

import asyncio

asyncio.run(stream_response())

Error Handling

Implement robust error handling to manage API issues:

from dolphin_mcp.exceptions import MCPAPIError, MCPConfigError, MCPTimeoutError

try:

mcp_client = DolphinMCP.from_config("./config.json")

conversation = mcp_client.create_conversation()

response = conversation.add_user_message("Generate a complex response")

except MCPTimeoutError:

print("The request timed out. Check your network connection or increase the timeout value.")

except MCPAPIError as e:

print(f"API Error: {e.status_code} - {e.message}")

if e.status_code == 429:

print("Rate limit exceeded. Implement exponential backoff.")

except MCPConfigError as e:

print(f"Configuration Error: {e}")

except Exception as e:

print(f"Unexpected error: {e}")

Performance Optimization

For production environments, consider these optimizations:

# Session reuse for connection pooling

from dolphin_mcp import DolphinMCP

import aiohttp

async def optimized_mcp_usage():

# Create a shared session for connection pooling

async with aiohttp.ClientSession() as session:

mcp_client = DolphinMCP.from_config(

"./config.json",

session=session,

request_timeout=60,

connection_pool_size=10

)

# Process multiple conversations efficiently

tasks = []

for i in range(5):

conversation = mcp_client.create_conversation()

conversation.add_system_message("You are a helpful assistant.")

tasks.append(conversation.add_user_message_async(f"Question {i}: What is machine learning?"))

# Gather all responses

responses = await asyncio.gather(*tasks)

for i, response in enumerate(responses):

print(f"Response {i}: {response.content[:100]}...")

Integration with Web Applications

Example Flask integration:

from flask import Flask, request, jsonify

from dolphin_mcp import DolphinMCP

app = Flask(__name__)

mcp_client = DolphinMCP.from_config("./config.json")

@app.route("/chat", methods=["POST"])

def chat():

data = request.json

conversation_id = data.get("conversation_id")

message = data.get("message")

# Retrieve or create conversation

if conversation_id and conversation_id in active_conversations:

conversation = active_conversations[conversation_id]

else:

conversation = mcp_client.create_conversation()

conversation_id = conversation.id

active_conversations[conversation_id] = conversation

conversation.add_system_message("You are a helpful AI assistant.")

# Process the message

response = conversation.add_user_message(message)

return jsonify({

"conversation_id": conversation_id,

"response": response.content,

"tool_results": response.tool_results

})

if __name__ == "__main__":

active_conversations = {}

app.run(debug=True)

Troubleshooting Common Issues

| Issue | Solution |

|---|---|

| "Invalid API key" error | Verify your OpenAI API key is correct and has sufficient permissions |

| Rate limiting | Implement exponential backoff and request throttling |

| Timeout errors | Increase timeout in configuration or check network connection |

| Model not found | Verify model name exists in OpenAI's available models |

| Token limit exceeded | Break down requests into smaller chunks or use streaming |

Conclusion

Dolphin-MCP provides a flexible, open-source way to use OpenAI models with the MCP protocol. This implementation frees developers from vendor lock-in while maintaining a consistent interface across different LLM providers. By following the steps in this guide, you can leverage the power of GPT models through the standardized MCP architecture.

philschmid.de shows similar integrations with other LLMs, and tools like mcpadapt can help extend Dolphin-MCP's capabilities even further.