Introduction to Cursor and Local LLMs

Cursor is a powerful open-source tool that allows you to interact with large language models (LLMs) locally on your machine. This means you can leverage the capabilities of state-of-the-art language models without relying on cloud services or exposing your data to third-party servers.

One of the key advantages of using Cursor with a local LLM is privacy and data security. By keeping your data and computations on your local machine, you can ensure that sensitive information never leaves your controlled environment. This is particularly important for applications that deal with confidential or proprietary data.

Additionally, running LLMs locally can be more cost-effective in the long run, as you don't have to pay for cloud computing resources or API usage fees. Once you have the necessary hardware and software set up, you can run the LLM as many times as you need without incurring additional costs.

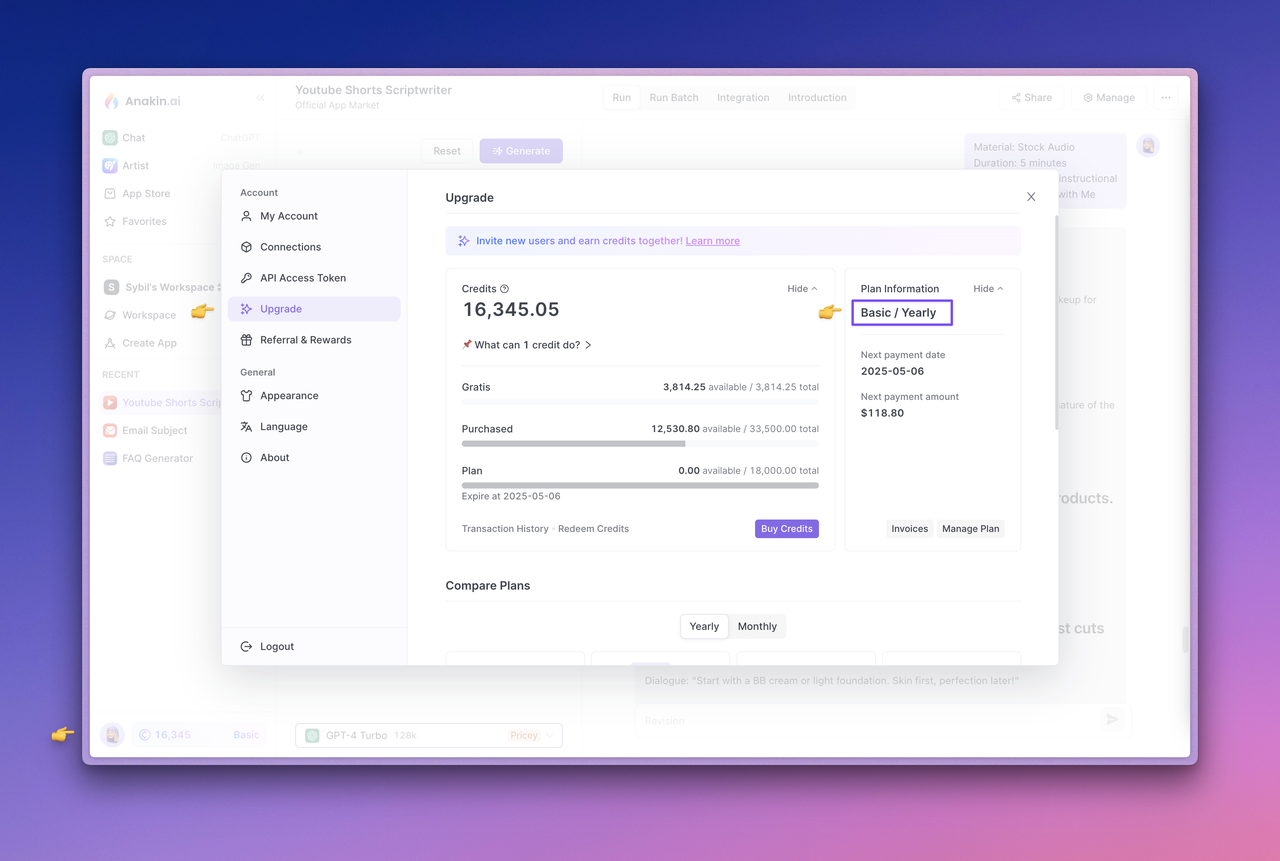

No worries! Anakin AI is your All-in-one AI aggregator platform where you can easily access all LLM and Image Generation Models in One Place!

Get started with Anakin AI's API Integration Now!

Why Using Cursor IDE with Local LLM?

As AI-powered coding assistants gain traction, concerns around data privacy and intellectual property protection have become increasingly prevalent. Many organizations and developers are hesitant to share their codebase with cloud-based services due to the potential risk of exposing sensitive information or proprietary algorithms. Local LLMs address this concern by allowing users to run the language model on their local machines, ensuring that their code never leaves the controlled environment.

Moreover, local LLMs provide the flexibility to fine-tune the model on specific codebases or domains. This tailored approach can lead to more accurate and relevant code suggestions, as the model becomes attuned to the unique coding styles, patterns, and terminologies used within a particular project or organization.

How to Intergrate Local LLMs into Cursor

Cursor's architecture is designed to be extensible, making it well-suited for integrating local LLMs. The process would involve creating a custom plugin or module that interfaces with the local LLM, enabling Cursor to communicate with the model and leverage its capabilities seamlessly.

Supporting local LLMs in Cursor would unlock a wide range of use cases and benefits for developers and organizations:

Enhanced Privacy and Security: By keeping the codebase and language model local, organizations can ensure that sensitive information never leaves their controlled environment, mitigating the risk of data breaches or unauthorized access.

Customized Code Assistance: Fine-tuning local LLMs on specific codebases or domains can lead to more accurate and relevant code suggestions, tailored to the unique coding styles and patterns of a project or organization.

Offline Capabilities: Local LLMs enable developers to leverage AI-powered coding assistance even in offline or disconnected environments, ensuring uninterrupted productivity.

Regulatory Compliance: Certain industries or organizations may be subject to strict data privacy regulations, making local LLMs a necessity for adopting AI-powered coding tools.

Cost Optimization: While cloud-based LLMs can be expensive, especially for large-scale usage, local LLMs may offer a more cost-effective solution, particularly for organizations with significant computational resources.

How to Use Cursor with a Local LLM

Setting Up Cursor with a Local LLM

To get started with Cursor and a local LLM, follow these steps:

Install Cursor: Visit the Cursor website (https://cursor.com) and download the latest version of the Cursor IDE for your operating system.

Obtain an LLM: You'll need to acquire a local LLM model compatible with Cursor. Several options are available, including open-source models like GPT-J, GPT-NeoX, or proprietary models from providers like Anthropic or OpenAI.

Configure Cursor: Once you have the LLM model, you'll need to configure Cursor to use it. Open the Cursor preferences and navigate to the "AI" section. Here, you can select the option to use a local LLM and provide the necessary details, such as the model path and any required configuration files.

Using Cursor with a Local LLM

With Cursor and your local LLM set up, you can start leveraging AI capabilities within your coding workflow. Here are some of the key features and use cases:

Code Completion and Suggestions

One of the primary benefits of using an AI-powered code editor like Cursor is its ability to provide intelligent code completion and suggestions. As you type, Cursor will analyze your code and offer relevant suggestions based on the context, helping you write code faster and more accurately.

# Example: Cursor providing code suggestions

def calculate_area(shape, dimensions):

if shape == "rectangle":

length, width = dimensions

area = length * width

return area

elif shape == "circle":

radius = dimensions[0]

area = 3.14 * radius ** 2

return area

# Cursor can suggest adding an "else" clause or handling other shapes

Natural Language Code Generation

Cursor's "Cmd-K" feature allows you to write code using natural language instructions. This powerful capability enables you to update entire classes or functions by simply providing a prompt describing the desired changes.

# Example prompt: "Refactor the calculate_area function to use a match statement instead of if-elif"

# Cursor generates the refactored code:

def calculate_area(shape, dimensions):

area = match shape:

case "rectangle":

length, width = dimensions

area = length * width

case "circle":

radius = dimensions[0]

area = 3.14 * radius ** 2

case _:

raise ValueError(f"Invalid shape: {shape}")

return area

Code Understanding and Exploration

With a local LLM, Cursor can help you better understand and explore your codebase. You can ask questions about specific files, functions, or classes, and Cursor will provide relevant information based on the code analysis.

# Example query: "What does the calculate_area function do?"

# Cursor's response:

The `calculate_area` function takes two arguments: `shape` (a string representing the shape) and `dimensions` (a list or tuple containing the dimensions of the shape). It calculates the area based on the provided shape and dimensions.

For a rectangle, it expects `dimensions` to be a list or tuple with two elements (length and width), and it calculates the area by multiplying them.

For a circle, it expects `dimensions` to be a list or tuple with one element (radius), and it calculates the area using the formula `pi * radius^2`.

If an invalid shape is provided, it raises a `ValueError` with an appropriate error message.

Integration with Anakin AI's API

While Cursor's local LLM capabilities are powerful, you can further enhance your AI-powered coding experience by integrating with Anakin AI's API. Anakin AI offers a comprehensive API service that allows you to seamlessly integrate AI capabilities into your applications.

Here's how you can leverage Anakin AI's API with Cursor:

Sign up for Anakin AI: Visit the Anakin AI website (https://anakin.ai) and create an account.

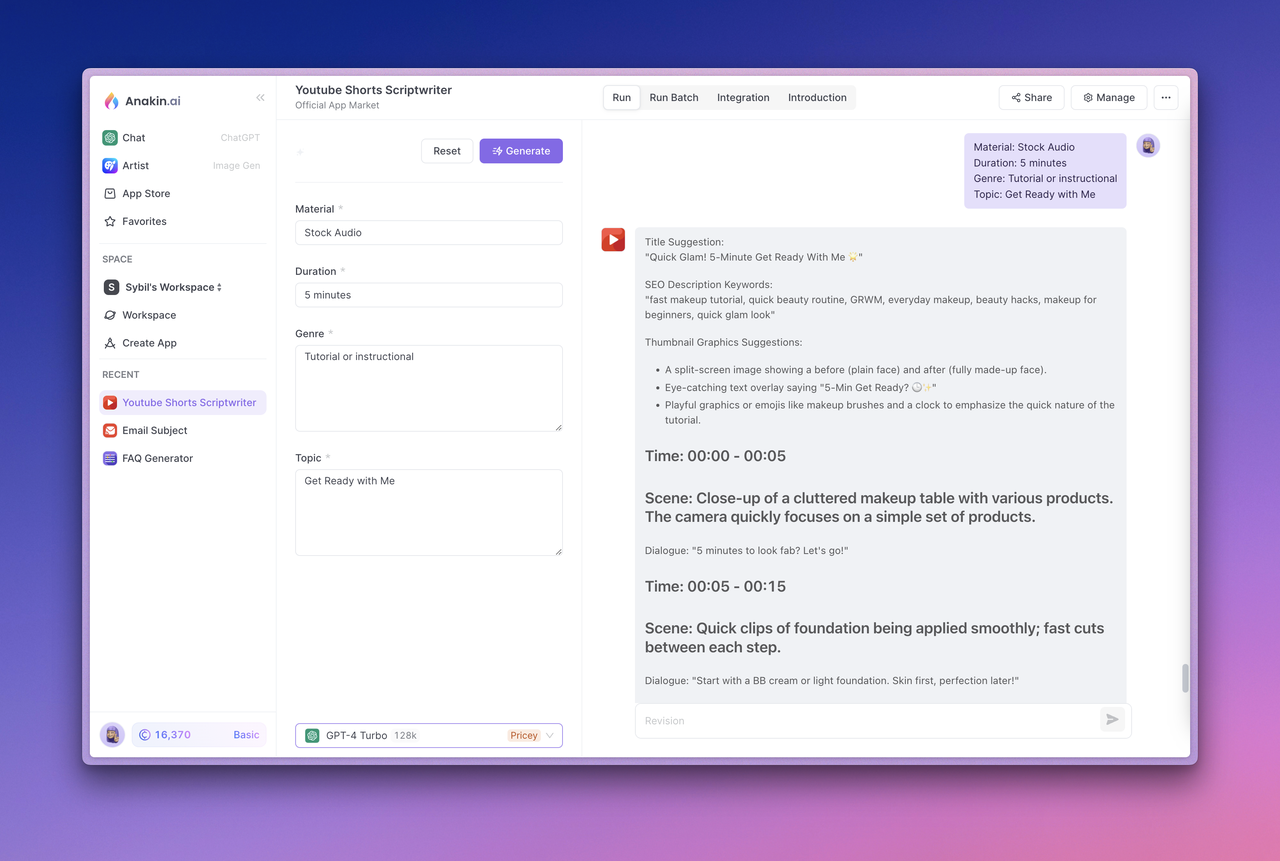

Create an App: In the Anakin AI dashboard, create a new app tailored to your needs. You can choose from various app types, such as Quick Apps for text generation or Chatbot Apps for conversational AI.

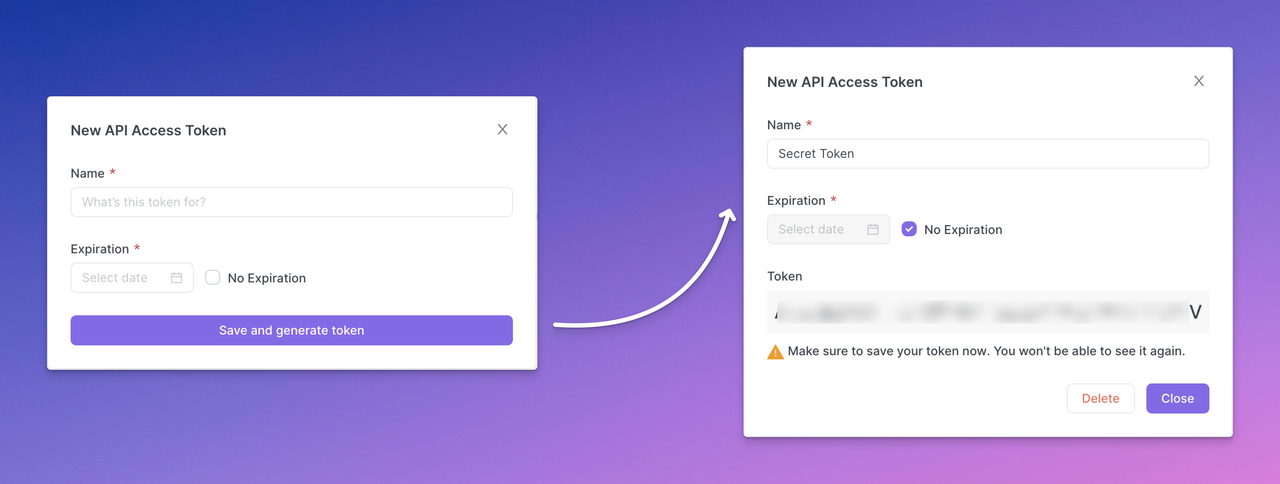

Generate API Access Token: Follow the steps provided by Anakin AI to generate an API access token for your app.

Integrate with Cursor: In Cursor, you can configure the integration with Anakin AI's API by providing the API access token and other necessary details. This will allow Cursor to leverage Anakin AI's AI models and capabilities alongside your local LLM.

With the Anakin AI integration, you can benefit from additional features like text generation, translation, and conversational AI, further enhancing your coding productivity and capabilities.

Sample Code: Using Anakin AI's API with Cursor

Here's an example of how you can use Anakin AI's API within Cursor to generate code documentation:

import requests

# Replace with your Anakin AI API access token

ANAKIN_AI_API_TOKEN = "your_api_token_here"

# Replace with your Anakin AI app ID

ANAKIN_AI_APP_ID = "your_app_id_here"

def generate_code_documentation(code_file):

url = f"https://api.anakin.ai/v1/quickapps/{ANAKIN_AI_APP_ID}/runs"

headers = {

"Authorization": f"Bearer {ANAKIN_AI_API_TOKEN}",

"X-Anakin-Api-Version": "2024-05-06",

"Content-Type": "application/json",

}

data = {

"inputs": {

"Code": code_file.read(),

},

"stream": True,

}

response = requests.post(url, headers=headers, json=data)

response.raise_for_status()

documentation = ""

for chunk in response.iter_content(chunk_size=None):

if chunk:

documentation += chunk.decode()

return documentation

# Example usage

with open("my_code.py", "r") as code_file:

documentation = generate_code_documentation(code_file)

print(documentation)

In this example, we define a generate_code_documentation function that takes a code file as input. It sends a POST request to the Anakin AI API with the code file's content as input. The API generates the code documentation, which is then returned by the function.

By integrating Anakin AI's API with Cursor, you can leverage the power of AI to automate tasks like code documentation, refactoring, and more, further enhancing your productivity and coding experience.

Conclusion

Cursor's integration with local LLMs and Anakin AI's API opens up a world of possibilities for developers, enabling them to harness the power of AI while maintaining control over their codebase and data privacy. With features like code completion, natural language code generation, code understanding, and API integration, Cursor empowers developers to be extraordinarily productive and efficient.

Whether you're working on a small project or a large-scale enterprise application, leveraging Cursor with a local LLM and Anakin AI's API can revolutionize your coding workflow, streamline development processes, and unlock new levels of productivity and innovation.

No worries! Anakin AI is your All-in-one AI aggregator platform where you can easily access all LLM and Image Generation Models in One Place!

Get started with Anakin AI's API Integration Now!