Google has made a significant move in the world of artificial intelligence with the release of Gemma 2 2B, a compact yet powerful open-source language model. This new addition to the Gemma family represents a strategic step towards democratizing AI technology while maintaining Google's commitment to responsible and efficient AI development.

You can easily create AI workflows with Anakin AI without any coding knowledge. Connect to LLM APIs such as: GPT-4, Claude 3.5 Sonnet, Uncensored Dolphin-Mixtral, Stable Diffusion, DALLE, Web Scraping.... into One Workflow!

Forget about complicated coding, automate your madane work with Anakin AI!

For a limited time, you can also use Google Gemini 1.5 and Stable Diffusion for Free!

The Power of Gemma 2 2B

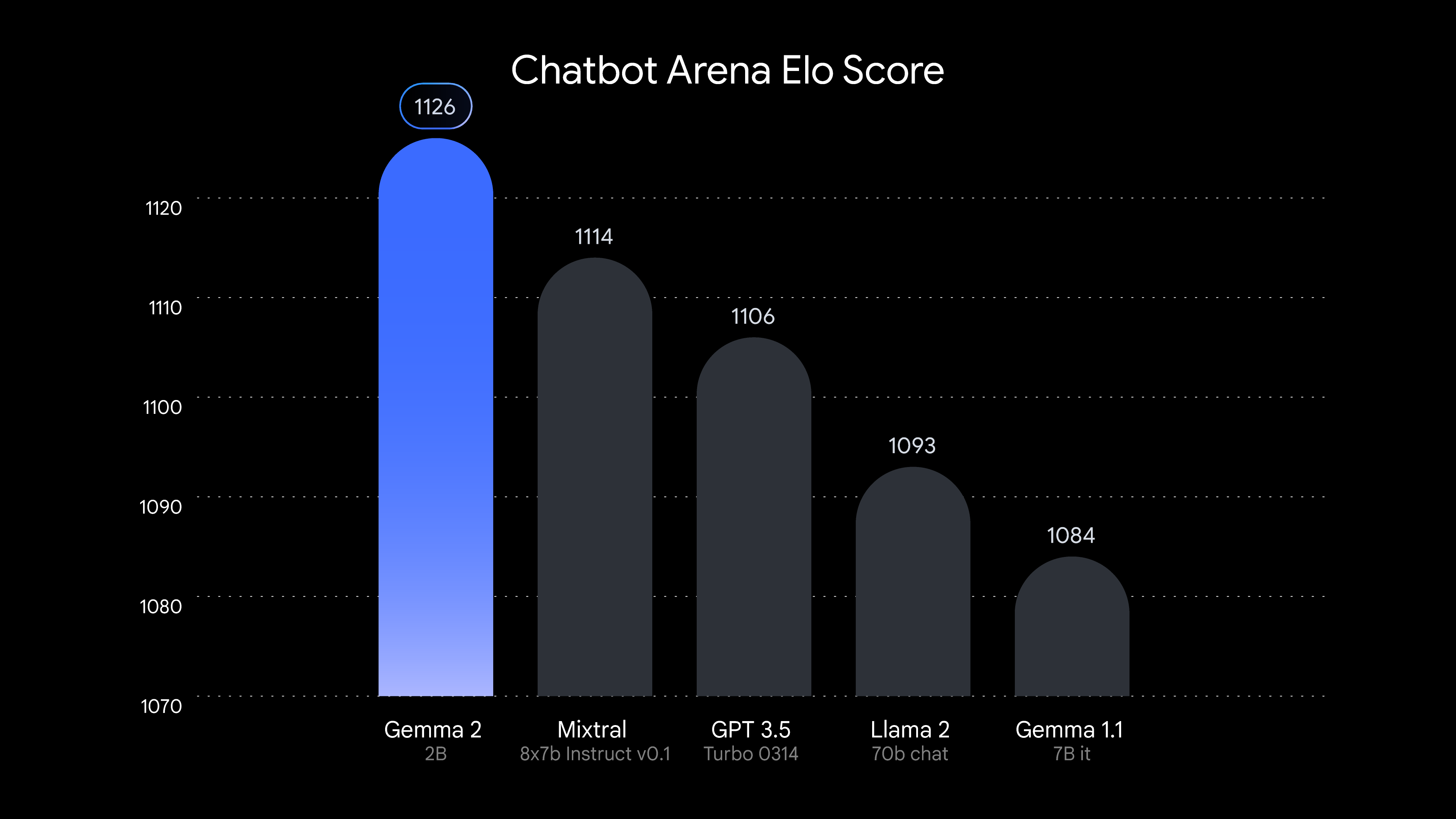

Despite its relatively small size of just 2 billion parameters, Gemma 2 2B packs a surprising punch. It outperforms many larger models, including some GPT-3.5 variants, in conversational tasks on the LMSYS Chatbot Arena leaderboard. This achievement showcases the model's efficiency and the effectiveness of Google's advanced training techniques.

Technical Specifications of Gemma 2 2B

Gemma 2 2B is a text-to-text, decoder-only large language model designed for a variety of natural language processing tasks. Here are some key technical details:

- Architecture: Decoder-only transformer

- Parameters: 2 billion

- Context Length: 8,192 tokens

- Vocabulary Size: 256,000 tokens

- Training Data: Approximately 2 trillion tokens

- Model Format: Compatible with popular frameworks like PyTorch, JAX, and Hugging Face Transformers

Gemma 2 2B excels in various text generation tasks, including:

- Question answering

- Summarization

- Creative writing

- Code generation

- Reasoning

Its performance is particularly impressive given its compact size, making it suitable for deployment on resource-constrained devices like laptops, desktops, and edge computing platforms.

How Google Optimized Gemma 2 2B

Google has optimized Gemma 2 2B for various hardware configurations:

- NVIDIA GPUs: Optimized for TensorRT-LLM library, enabling efficient inference on a wide range of NVIDIA GPUs

- Google TPUs: Leverages the power of Tensor Processing Units for accelerated training and inference

- CPU Compatibility: Can run on standard CPUs, making it accessible to a broader range of developers

Gemma 2 2B: Open Source, and Better

By releasing Gemma 2 2B as an open-source model, Google is fostering innovation and collaboration within the AI community. This move allows researchers and developers to:

- Inspect and modify the model architecture

- Fine-tune the model for specific applications

- Contribute to the model's improvement

- Develop new techniques based on Gemma's architecture

Gemma 2 vs. Gemini: The Open-Source Race

While Gemma 2 2B is open-source, it's important to note its relationship to Google's closed-source Gemini models. Both Gemma and Gemini share technical foundations, but serve different purposes in Google's AI strategy:

Gemma 2 (Open-Source)

- Designed for widespread adoption and experimentation

- Focuses on efficiency and accessibility

- Encourages community-driven innovation

Gemini (Closed-Source)

- Google's flagship AI model for commercial applications

- Offers maximum performance and advanced capabilities

- Tightly controlled for specific use cases

The release of Gemma 2 2B signals Google's commitment to advancing open-source AI while maintaining a competitive edge with Gemini. This dual approach allows Google to:

- Foster an ecosystem of AI developers and researchers

- Gather insights from the open-source community

- Potentially incorporate advancements back into the Gemini models

As Gemma 2 evolves, it may narrow the gap with Gemini in certain areas, creating a fascinating dynamic between open and closed-source AI development at Google.

Responsible AI Development: Built-in with Gemma 2 2B

Google has prioritized responsible AI practices in the development of Gemma 2 2B:

Data Preprocessing

- Rigorous filtering of training data to remove sensitive and harmful content

- Automated techniques to exclude personal information

Safety Measures

- Extensive fine-tuning and reinforcement learning from human feedback (RLHF)

- Alignment with responsible behaviors and ethical guidelines

Evaluation and Testing

- Manual red-teaming to identify potential vulnerabilities

- Automated adversarial testing to assess model robustness

- Comprehensive evaluation of model capabilities to mitigate risks

ShieldGemma: Enhanced Safety Features

Alongside Gemma 2 2B, Google has introduced ShieldGemma, a suite of safety classifiers built on the Gemma 2 architecture. These classifiers are designed to detect and filter harmful content in both model inputs and outputs, addressing key areas of concern:

- Hate speech and explicit content

- Violence and gore

- Harassment and bullying

- Dangerous or illegal activities

ShieldGemma is available in multiple sizes, offering flexibility for different use cases:

- 2B version for real-time classification

- Larger 9B and 27B variants for higher accuracy in less time-sensitive applications

Gemma Scope: Unlocking Model Interpretability

To promote transparency and aid in responsible AI development, Google has released Gemma Scope, a set of tools designed to examine the inner workings of Gemma 2 models. This suite includes over 400 sparse autoencoders (SAEs) that allow researchers to:

- Visualize and analyze model activations

- Identify specific patterns and behaviors within the model

- Gain insights into decision-making processes

Gemma Scope represents a significant step towards more interpretable and accountable AI systems, potentially leading to improved model design and safer AI applications.

Development and Deployment

Gemma 2 2B offers a range of options for developers looking to integrate the model into their projects:

Frameworks and Tools

- Multi-framework support: Keras 3.0, PyTorch, JAX, and Hugging Face Transformers

- Google AI Studio for quick experimentation

- Vertex AI on Google Cloud for scalable deployment and MLOps

Fine-tuning and Customization

- Supports fine-tuning on custom datasets

- Adaptable for specific applications like summarization or retrieval-augmented generation (RAG)

Deployment Options

- Local deployment on laptops and desktops

- Edge computing devices

- Cloud-based solutions through Google Cloud or other providers

Code Examples

Here's a simple example of how to use Gemma 2 2B with the Hugging Face Transformers library:

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

# Load the model and tokenizer

tokenizer = AutoTokenizer.from_pretrained("google/gemma-2-2b")

model = AutoModelForCausalLM.from_pretrained("google/gemma-2-2b", device_map="auto")

# Prepare input text

input_text = "Explain the concept of artificial intelligence in simple terms."

input_ids = tokenizer(input_text, return_tensors="pt").to(model.device)

# Generate response

outputs = model.generate(**input_ids, max_new_tokens=100)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(response)

For more advanced use cases, developers can leverage techniques like quantization to further optimize performance:

from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig

# Configure 4-bit quantization

quantization_config = BitsAndBytesConfig(load_in_4bit=True)

# Load the model with quantization

model = AutoModelForCausalLM.from_pretrained(

"google/gemma-2-2b",

quantization_config=quantization_config,

device_map="auto"

)

# Use the model as before

Future Developments

As Gemma 2 2B gains traction in the AI community, we can expect to see:

- Community-driven improvements and optimizations

- New applications leveraging the model's efficiency

- Integration with other open-source AI tools and frameworks

- Potential expansion to support additional languages and tasks

Google has hinted at plans to introduce new variants of Gemma for diverse applications, suggesting an ongoing commitment to the open-source AI ecosystem.

Conclusion

Gemma 2 2B represents a significant milestone in Google's AI strategy, bridging the gap between cutting-edge closed-source models like Gemini and the broader AI development community. By offering a powerful, efficient, and responsibly designed open-source model, Google is empowering developers and researchers to push the boundaries of AI technology while maintaining a focus on safety and ethical considerations.

As the AI landscape continues to evolve, the interplay between Gemma and Gemini will likely drive innovation on both fronts, ultimately benefiting the entire field of artificial intelligence. With its impressive performance, accessibility, and robust safety features, Gemma 2 2B is poised to become a valuable tool for AI practitioners across various domains, from academic research to commercial applications.