Fireworks AI just announced the release of Firefunction-v2, an open weights function calling model that takes function calling capabilities to the next level. Firefunction-v2 builds upon recent advancements in large language models to deliver a model optimized for real-world scenarios, including multi-turn conversation, instruction following, and parallel function calling.

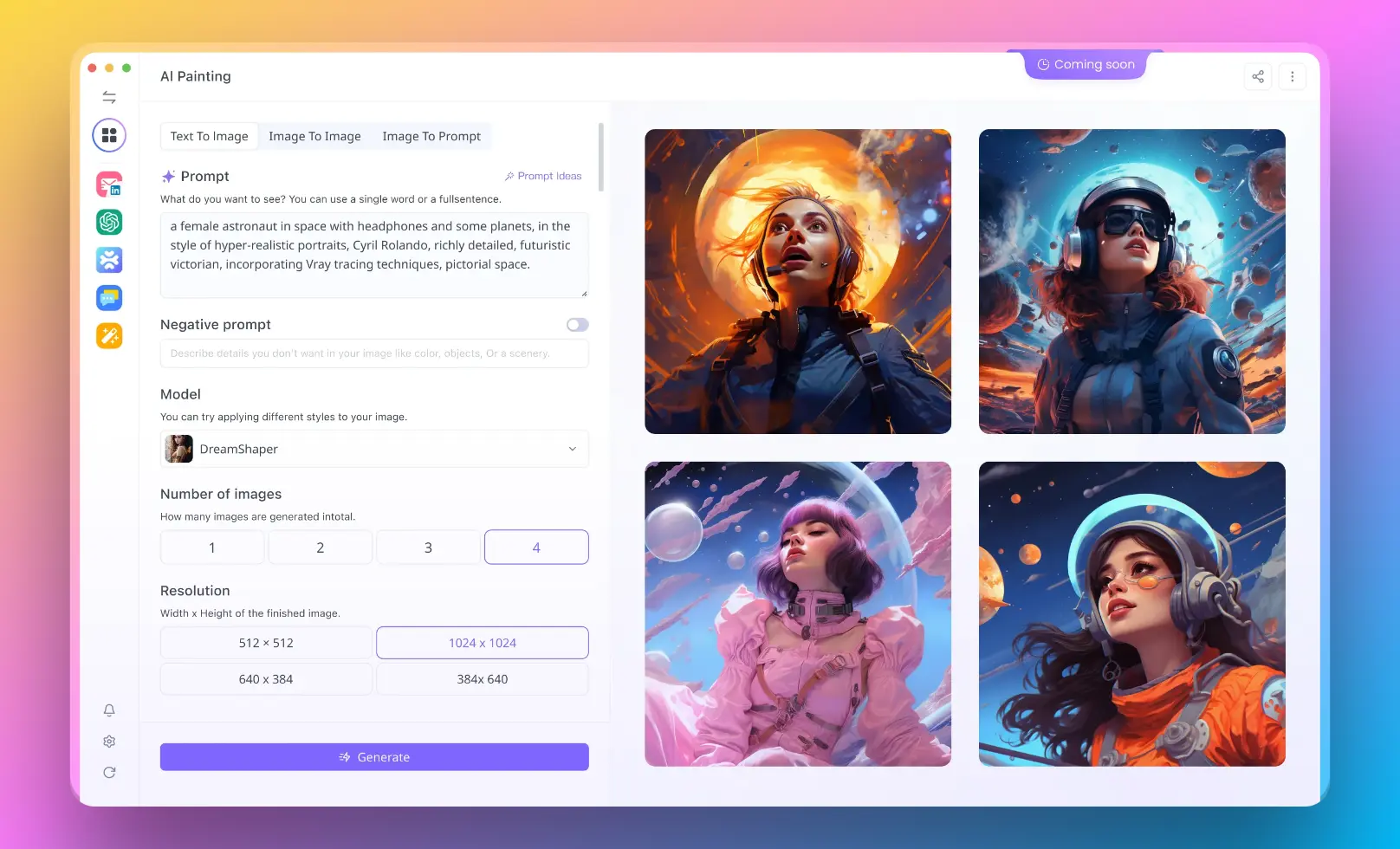

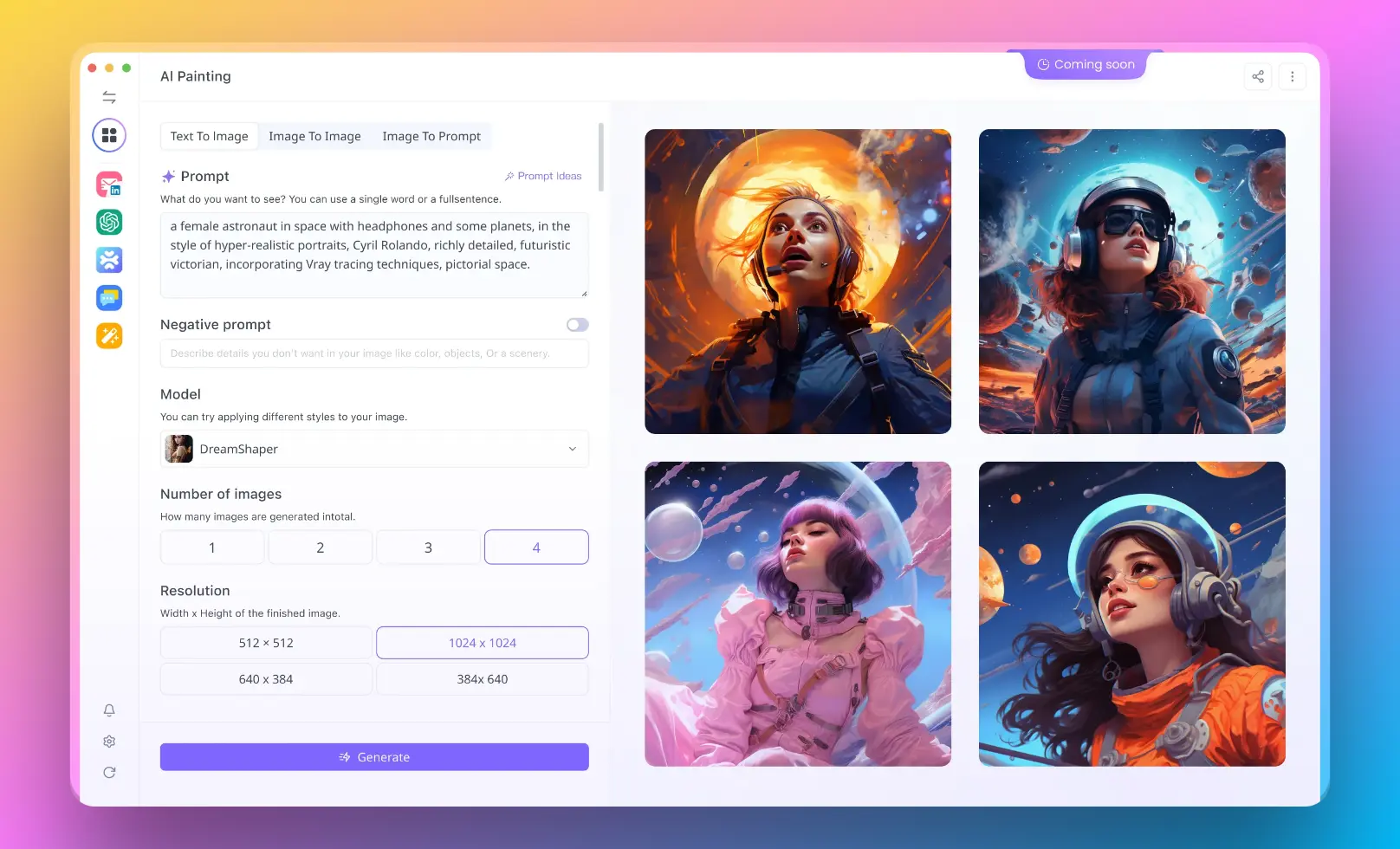

Then, You cannot miss out Anakin AI!

Anakin AI is an all-in-one platform for all your workflow automation, create powerful AI App with an easy-to-use No Code App Builder, with Llama 3, Claude, GPT-4, Uncensored LLMs, Stable Diffusion...

Build Your Dream AI App within minutes, not weeks with Anakin AI!

Key Highlights

- Llama 3-like chat capabilities: Firefunction-v2 preserves the impressive chat and generalized capabilities of its base model, Llama 3 70B.

- Enhanced function calling: Firefunction-v2 demonstrates an improved ability to handle complex function calls and follow instructions for function calls.

- Faster and more cost-effective than GPT-4o: Enjoy function calling capabilities on par with GPT-4o while benefiting from better UX and lower costs. Firefunction-v2 achieves speeds of 180 tokens/sec and pricing of $0.9 per 1M tokens, compared to GPT-4o's 69 tokens/sec and $15 per 1M output tokens.

| Feature | Firefunction-v1 | Firefunction-v2 | GPT-4o |

|---|---|---|---|

| Single turn function call (routing) | ✔️ | ✔️ | ✔️ |

| Multi-turn conversations | ⚠️ (limited) | ✔️ | ✔️ |

| Parallel function calling | ❌ | ✔️ | ✔️ |

| Instruction following | ⚠️ | ✔️ | ✔️ |

| General conversation (with optional function calling) | ⚠️ | ✔️ | ✔️ |

| Cost per 1M tokens | $0.5 | $0.9 | $5 (input), $15 (output) |

| Response latency | Up to 200 tokens/sec | ~180 tokens/sec | ~69 tokens/sec |

| Combined benchmark scores (MT bench, Gorilla, Nexus) | 0.49 | 0.81 | 0.80 |

The State of Function Calling: One Year in Retrospect

It's been nearly a year since OpenAI introduced function calling as a feature, enabling language models to output structured text to call external APIs. While function calling has immense potential, productionizing this capability has been challenging due to the tradeoffs between open-source and closed-source models.

Traditional open-source function calling models tend to be overly specialized, focusing narrowly on function calling benchmarks at the expense of generalizability and general reasoning abilities. On the other hand, closed function calling models like GPT-4 and Claude offer strong performance in non-function calling tasks but come with high latencies and costs, limiting their production usability.

The Making of Firefunction-v2

Fireworks AI took a novel approach to creating Firefunction-v2, based on user feedback highlighting the importance of real-world function calling models excelling at non-function calling tasks. Instead of overfitting to function calling scenarios, Fireworks augmented the Llama 3 70B base model with function calling capabilities while preserving its instruction following abilities.

The training process involved:

- Selecting llama3-70b-instruct as the base model for its excellent real-world performance.

- Curating a dataset composed of both function calling and regular conversation data.

- Carefully monitoring the training process to prevent degradation of base model capabilities.

- Preserving the original 8k context length of llama3-70b-instruct.

Evaluation and Benchmark Performance

Fireworks evaluated Firefunction-v2 on a mix of publicly available datasets, including the Gorilla and Nexus benchmarks for function calling capability and MTBench for multi-turn instruction following. The results demonstrate that Firefunction-v2 achieves the highest performance on the medley of benchmarks, consistently outperforming Llama 3 on function calling tasks while maintaining similar multi-turn instruction following capabilities.

| Benchmark | Firefunction-v1 | Firefunction-v2 | Llama 3 70B Instruct | GPT-4o |

|---|---|---|---|---|

| Gorilla simple | 0.91 | 0.94 | 0.925 | 0.88 |

| Gorilla multiple_function | 0.92 | 0.91 | 0.86 | 0.91 |

| Gorilla parallel_function | 0 | 0.89 | 0.86 | 0.89 |

| Gorilla parallel_multiple_function | 0 | 0.79 | 0.62 | 0.72 |

| Nexus parallel | 0.38 | 0.51 | 0.30 | 0.47 |

| MTBench (multi-turn instruction following) | 0.73 | 0.84 | 0.89 | 0.93 |

| Average | 0.49 | 0.81 | 0.74 | 0.80 |

Highlighted Capabilities

To showcase Firefunction-v2's real-world capabilities, Fireworks open-sourced a fully-functional chatbot demo that users can customize with their own functions. The demo app highlights some of the model's improved capabilities:

Parallel Function Calling

Firefunction-v2 demonstrates adeptness at more complex function calling tasks, reliably handling up to 30 function specs. In contrast, Firefunction-v1's performance degrades when utilizing more than ~5 functions. Parallel function calling, where the model executes two calls from one query, is crucial for real-world usage as it enables a more intuitive user experience and compatibility with a wider range of APIs.

Instruction Following

Generalized models like Llama 3 often struggle to make intelligent decisions about when to call functions and may force unnecessary function calls. Firefunction-v2, on the other hand, correctly discerns when a function call is relevant to the given instruction, responding like a typical chat model when appropriate.

Getting Started with Firefunction-v2

Ready to experience the power of Firefunction-v2? Get started with the model using Fireworks' comprehensive documentation, which includes sample apps and guides. Fireworks hosts Firefunction-v2 on their platform with a speed-optimized setup and an OpenAI-compatible API, making it easy to integrate the model into your existing projects.

You can also explore Firefunction-v2's capabilities in the UI playground, where you can add example functions and obtain code snippets for experimentation.

Conclusion

With the release of Firefunction-v2, Fireworks AI is committed to providing a model optimized for real-world usage in terms of response quality, speed, and cost. Developed with the feedback and support of the Fireworks community, Firefunction-v2 has already garnered enthusiastic reactions from beta testers impressed by its real-world readiness.

Fireworks AI invites developers to join their Discord function calling community and share feedback to shape the future of Firefunction models. As Fireworks continues to iterate on these models, they remain dedicated to helping developers productionize generative AI at scale, offering a platform that is fast, cost-efficient, and specialized to your use case.

With Firefunction-v2, the era of production-grade open-source AI is here. Happy building!

Then, You cannot miss out Anakin AI!

Anakin AI is an all-in-one platform for all your workflow automation, create powerful AI App with an easy-to-use No Code App Builder, with Llama 3, Claude, GPT-4, Uncensored LLMs, Stable Diffusion...

Build Your Dream AI App within minutes, not weeks with Anakin AI!