Chameleon, a groundbreaking new family of early-fusion, token-based, mixed-modal AI models, is revolutionizing the field of artificial intelligence. This innovative approach enables the understanding and generation of interleaved text and images in any arbitrary sequence, opening up a world of possibilities for seamless multi-modal communication and creation.

The significance of Chameleon's capabilities cannot be overstated. By effortlessly combining text and images in a unified representational space, these models pave the way for more natural and intuitive human-machine interactions. From generating captivating visual narratives to analyzing complex documents with both textual and graphical elements, Chameleon sets a new standard for AI versatility and performance.

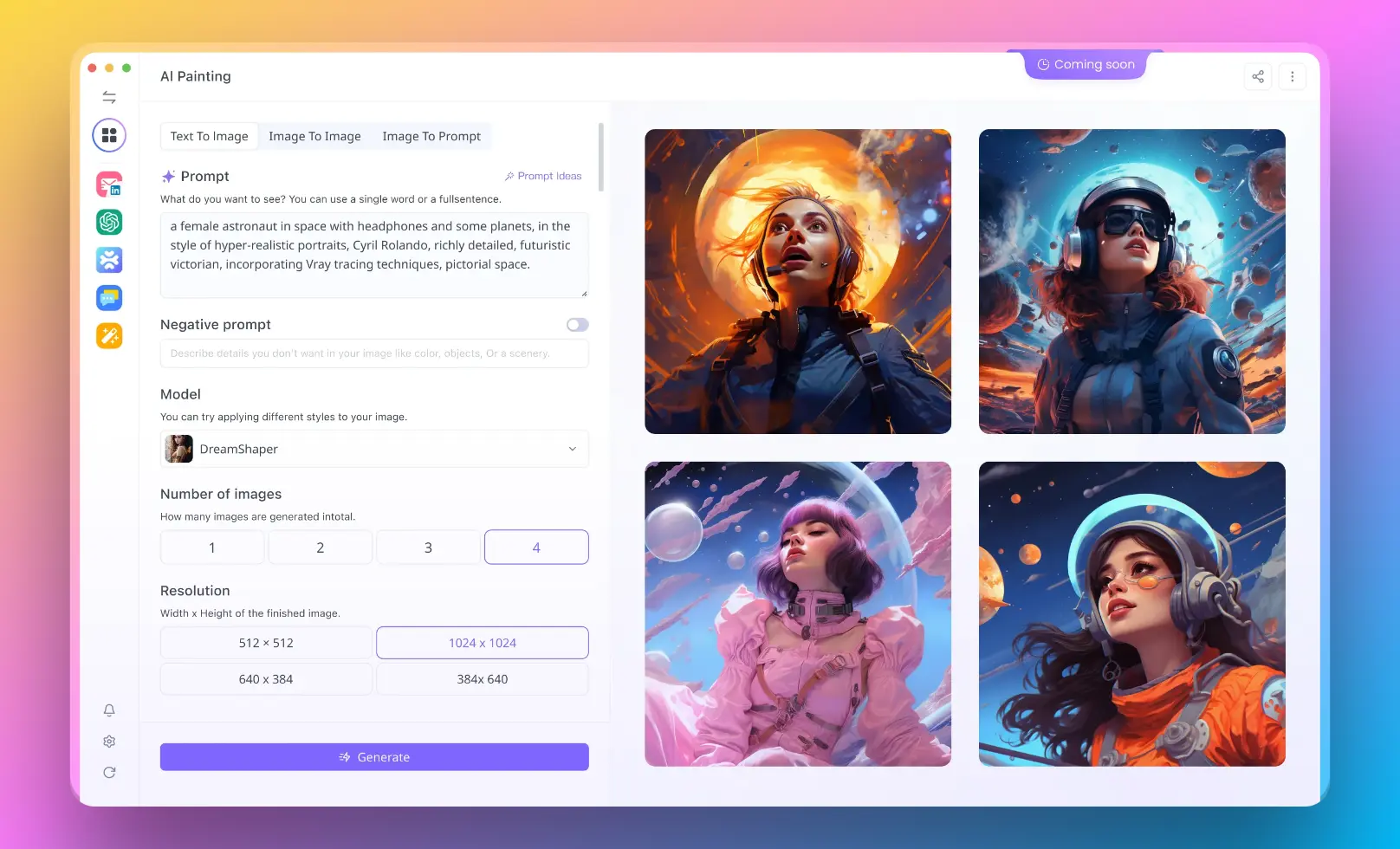

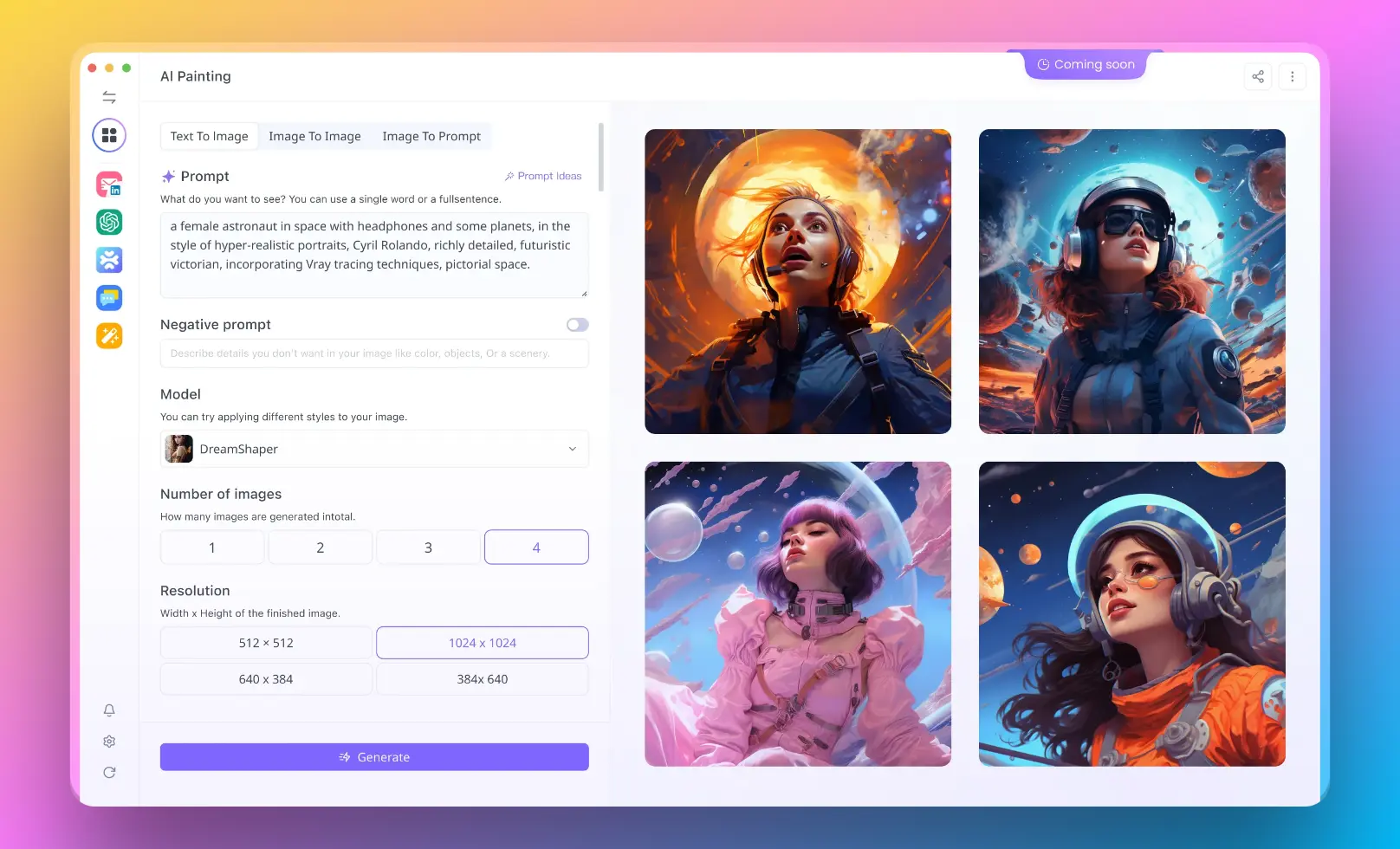

Then, You cannot miss out Anakin AI!

Anakin AI is an all-in-one platform for all your workflow automation, create powerful AI App with an easy-to-use No Code App Builder, with Llama 3, Claude, GPT-4, Uncensored LLMs, Stable Diffusion...

Build Your Dream AI App within minutes, not weeks with Anakin AI!

How Chameleon Works

At the heart of Chameleon's architecture lies a novel approach to representing both text and images using discrete tokens in a shared space. This unified representation allows the same transformer architecture to process text and image tokens without the need for separate encoders or decoders.

Here's a high-level overview of how Chameleon operates:

- Tokenization: Images are quantized into discrete tokens, analogous to words in text. This enables a consistent representation across modalities.

- Early Fusion: Text and image tokens are combined into a single sequence, allowing the model to learn relationships and dependencies between the two modalities from the ground up.

- Transformer Architecture: The interleaved token sequence is processed by a standard transformer model, which applies self-attention mechanisms to capture long-range dependencies and generate contextually relevant outputs.

- Stable Training at Scale: Chameleon introduces architectural innovations such as query-key normalization and strategic layer norm placement to ensure training stability even at large scales.

To illustrate, here's a simplified code snippet showing how text and image tokens might be combined:

def interleave_tokens(text_tokens, image_tokens):

interleaved = []

for text, image in zip(text_tokens, image_tokens):

interleaved.extend([text, image])

return interleaved

text_tokens = [...] # Tokenized text

image_tokens = [...] # Tokenized image

combined_tokens = interleave_tokens(text_tokens, image_tokens)

# Feed combined_tokens into the transformer model

By treating images and text as a unified sequence of tokens, Chameleon enables seamless reasoning and generation across modalities. The early fusion approach allows the model to capture intricate relationships between text and images, resulting in more coherent and contextually relevant outputs.

The architectural innovations introduced in Chameleon, such as query-key normalization and strategic layer norm placement, are crucial for stable training at scale. These techniques help mitigate issues like uncontrolled norm growth and enable the successful training of large-scale mixed-modal models.

In the next section, we'll explore Chameleon's impressive capabilities and performance across a range of tasks, showcasing its potential to reshape the landscape of multi-modal AI.

Chameleon's Capabilities and Performance

Chameleon demonstrates strong performance across a wide range of vision-language tasks, setting new benchmarks in several key areas:

- State-of-the-art on image captioning: Chameleon-34B outperforms leading models like Flamingo, IDEFICS, and Llava on the MS-COCO and Flickr30k image captioning benchmarks. Using just 2-shot examples, it achieves a CIDEr score of 120.2 on COCO and 74.7 on Flickr30k, surpassing the 32-shot performance of 80B models.

- Competitive on visual question answering (VQA): On the VQA-v2 benchmark, Chameleon-34B matches the 32-shot accuracy of larger models like Flamingo-80B and IDEFICS-80B using only 2-shot examples. The fine-tuned Chameleon-34B-Multitask variant further improves, approaching the performance of models like IDEFICS-80B-Instruct and Gemini Pro.

At the same time, Chameleon maintains competitive performance on text-only tasks, demonstrating its versatility as a general-purpose model:

- Matches strong language models on commonsense reasoning and reading comprehension: On benchmarks like PIQA, HellaSwag, ARC, and OBQA, Chameleon-7B and Chameleon-34B are competitive with corresponding sized models like Llama-2 and Mistral. Chameleon-34B even outperforms the larger Llama-2 70B on 5 out of 8 tasks.

- Exhibits impressive math and world knowledge capabilities: Despite training for additional modalities, Chameleon shows strong mathematical reasoning skills on the GSM8K and MATH benchmarks. Chameleon-34B outperforms Llama2-70B and approaches the performance of Mixtral 8x7B. On the MMLU benchmark testing world knowledge, Chameleon models outperform their Llama-2 counterparts.

But perhaps most impressively, Chameleon unlocks entirely new capabilities in mixed-modal reasoning and open-ended generation:

- Outperforms leading models in long-form mixed-modal responses: In a carefully designed human evaluation experiment, Chameleon-34B substantially outperforms strong baselines like Gemini Pro and GPT-4V on the quality of mixed-modal long-form responses to open-ended prompts. It achieves a 60.4% preference rate against Gemini Pro and 51.6% against GPT-4V in pairwise comparisons.

These results highlight Chameleon's unique strengths as a unified early-fusion model. By seamlessly integrating information across modalities, it enables new possibilities for multi-modal interaction and document understanding. The model's ability to generate coherent mixed-modal outputs in response to open-ended queries represents a significant step forward for the field.

Overall, Chameleon sets a new bar for open multimodal foundation models, demonstrating state-of-the-art performance on established vision-language benchmarks while unlocking novel capabilities in mixed-modal reasoning and generation. Its strong results across such a diverse range of tasks showcase the power and potential of the early-fusion token-based approach.

Significance and Potential Applications

Chameleon represents a major step forward in the development of unified multimodal foundation models. By seamlessly integrating text and images into a shared representational space, Chameleon enables new possibilities for reasoning over and generating mixed-modal content. This opens up exciting opportunities for a wide range of applications:

Multimodal document understanding: Chameleon's ability to process interleaved text and images makes it well-suited for analyzing complex documents like scientific papers, instruction manuals, and web pages. It could enable more intelligent information retrieval and knowledge extraction from such sources.

Creative content generation: The model's strong performance on open-ended mixed-modal generation tasks hints at its potential for creative applications. Chameleon could assist in the authoring of illustrated stories, educational content, marketing materials, and more.

Assistive technologies: Chameleon's multimodal capabilities could be leveraged to develop more natural and intuitive interfaces for people with disabilities. For example, it could power systems that respond to a combination of voice commands, gestures, and visual cues.

By jointly modeling text and images, Chameleon sets a new bar for open multimodal foundation models in terms of scale and capabilities. Its strong results on both established benchmarks and the new mixed-modal evaluation demonstrate the immense potential of the early-fusion token-based approach.

Conclusion

In this article, we introduced Chameleon, a new family of early-fusion token-based mixed-modal models. By representing both text and images as discrete tokens in a shared space, Chameleon is able to seamlessly reason over and generate interleaved text-image sequences using a single unified architecture.

The key to Chameleon's success lies in its novel training approach and architectural design. Techniques like query-key normalization and layer norm reordering enable stable optimization at scale, while the fully token-based architecture allows for information integration across modalities in an end-to-end fashion.

Through extensive evaluations, we demonstrated Chameleon's breakthrough results on both established vision-language benchmarks and new mixed-modal reasoning tasks. Chameleon-34B achieves state-of-the-art performance on image captioning, outperforms models like Llama-2 on text-only tasks, and unlocks impressive new capabilities in open-ended multimodal interaction.

Looking forward, Chameleon opens up many exciting research directions. Further scaling the model size and training data could yield even more capable and general-purpose models. Exploring new architectures and training objectives tailored to the mixed-modal setting is another promising avenue. And adapting Chameleon to other modalities like video and audio could enable a truly universal foundation model.

Chameleon marks a significant milestone on the path towards multimodal AI systems that can understand and generate content as flexibly as humans can. With its strong empirical results and the new possibilities it enables, Chameleon sets the stage for a new era of multimodal machine learning research and applications.

Then, You cannot miss out Anakin AI!

Anakin AI is an all-in-one platform for all your workflow automation, create powerful AI App with an easy-to-use No Code App Builder, with Llama 3, Claude, GPT-4, Uncensored LLMs, Stable Diffusion...

Build Your Dream AI App within minutes, not weeks with Anakin AI!